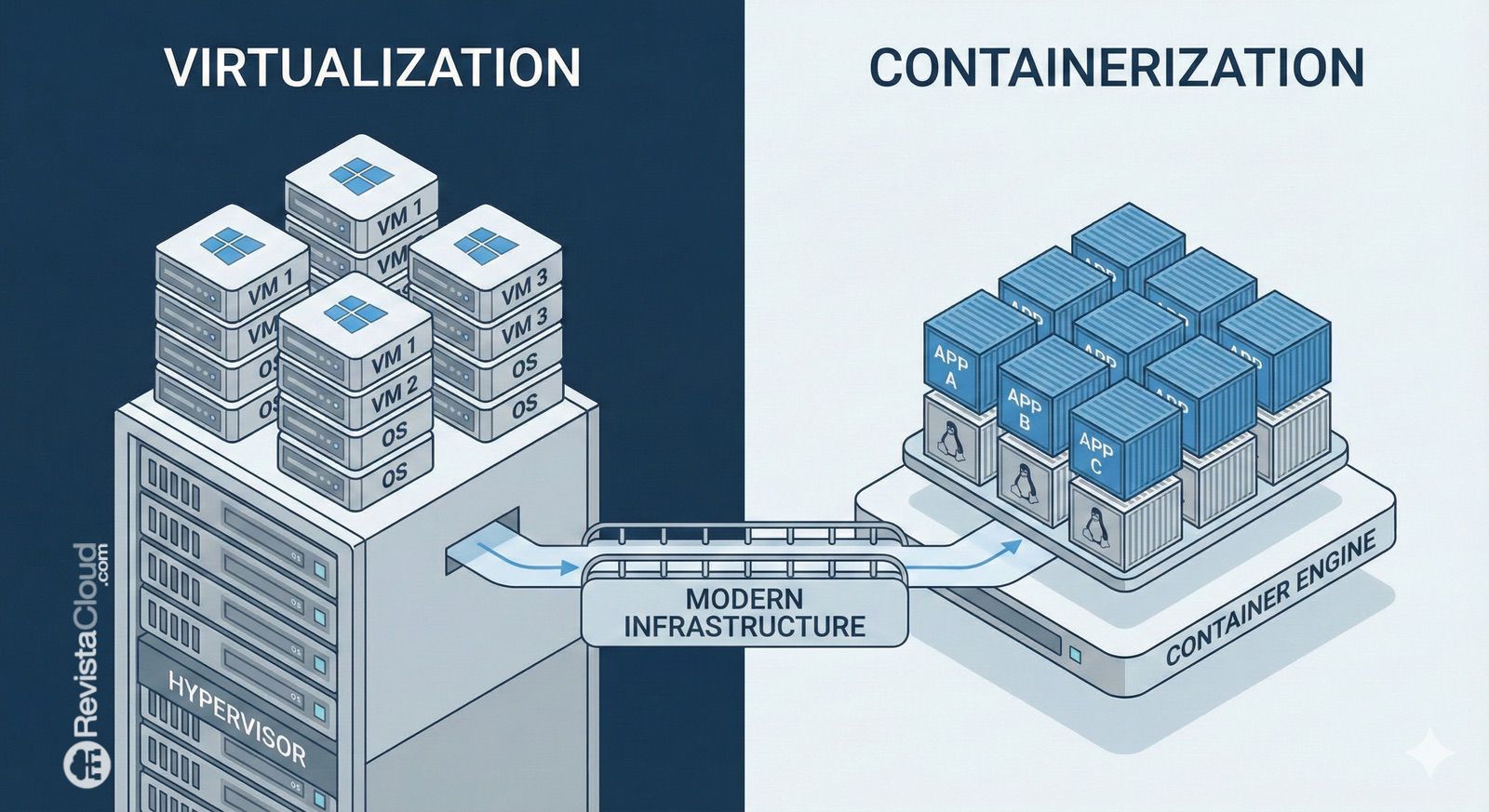

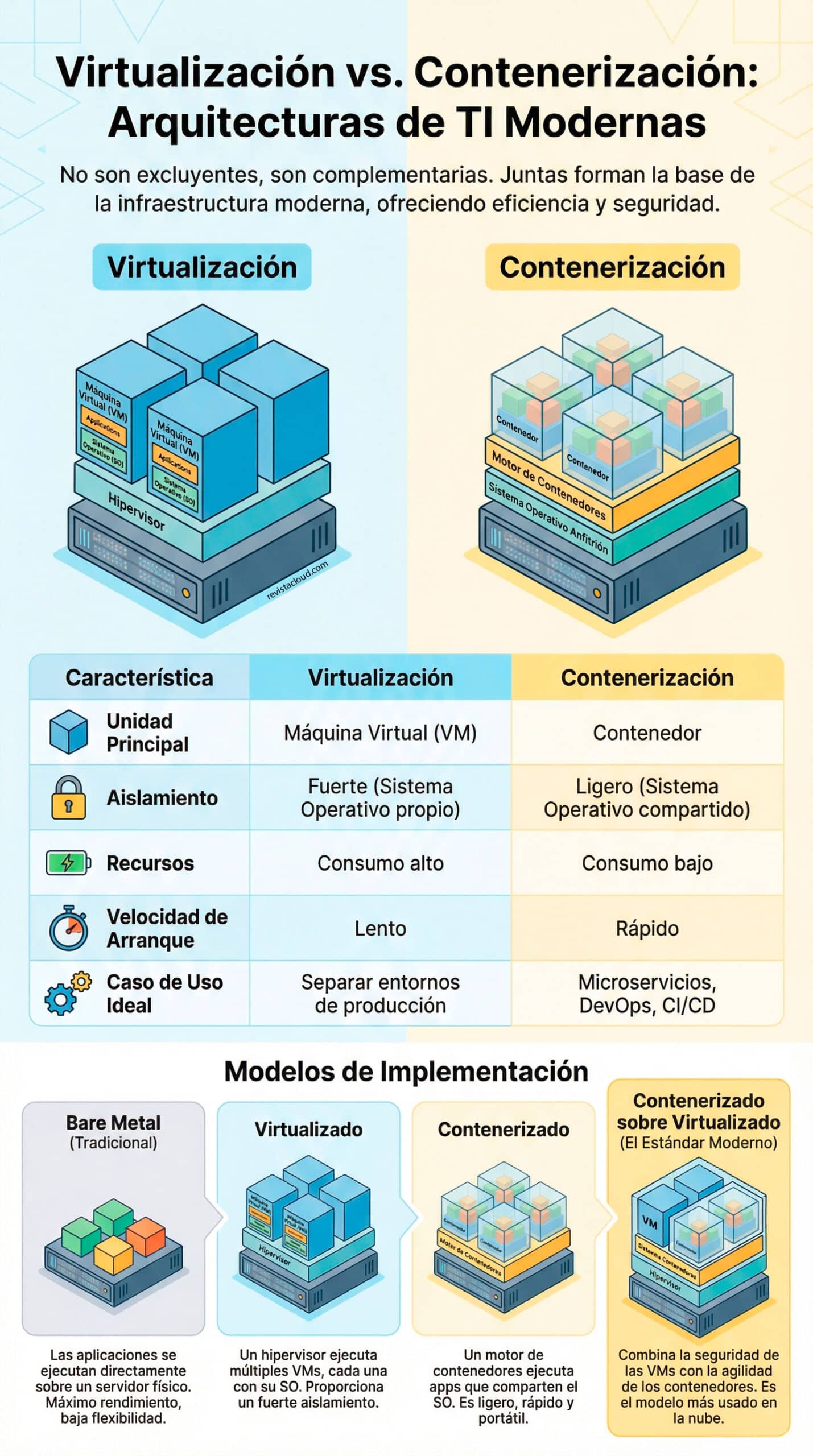

For years, conversations in data centers and IT teams revolved around two extremes: dedicated physical servers for “the important” tasks and, on the other end, virtual machines to consolidate workloads and gain flexibility. Today, however, the landscape is richer. Virtualization and containerization coexist—and in many environments, they are stacked one on top of the other—to respond to the same pressure: doing more with less, deploying faster, and maintaining control over security, costs, and operations.

This comparison is not new, but the context is. The acceleration of hybrid cloud, the rise of Kubernetes, the obsession with automation, and advances in AI have made these two approaches a kind of “grammar” essential for understanding modern architectures. While they are often presented as alternatives, in practice, the real question is usually: what combination provides the right balance for each workload?

Bare Metal: Pure Performance… with Operational Toll

Bare metal (physical server with an operating system and applications running directly on it) remains the benchmark when it comes to performance without intermediate layers. It is the “traditional” approach and is still used for workloads demanding minimal latency, direct hardware access, or highly specific configurations (such as certain databases, high-performance processing, or specialized network and storage needs).

The value is clear: maximum performance and often more predictable behavior. But its limits are also evident: each server tends to become an island, with irregular resource utilization, slower scaling, and less operational flexibility. In a world where teams need to move workloads, test new versions, and automate deployments quickly, pure bare metal is usually reserved for cases where its benefits justify the management costs.

Virtualization: Strong Isolation and Consolidation, at the Cost of “Weight”

Virtualization changed the game by allowing a single physical server to host multiple virtual machines (VMs) via a hypervisor. Each VM includes its own guest operating system, providing robust isolation between environments. This has been key at the enterprise level: separating applications, departments, or clients into more secure compartments, with independence at the system level.

Virtualization shines especially in scenarios where you need:

- Clear separation of workloads (production vs testing, different clients, regulated environments).

- Compatibility with heterogeneous operating systems (e.g., coexistence of Linux and Windows).

- Advanced tools for high availability, snapshots, live migration, and centralized management (depending on the platform).

The known trade-off is that each VM carries the cost of its own operating system. This means more CPU and memory consumption, more patches, a larger management surface, and in some cases, slightly lower performance compared to direct execution (depending on workload and configuration).

Still, the balance remains highly attractive for thousands of organizations. It’s no coincidence that names like VMware, Hyper-V, Proxmox, and solutions based on KVM are part of the basic toolkit for any modern infrastructure.

Containerization: Speed & Efficiency with a Different Kind of Isolation

Containerization popularized a lighter approach: instead of encapsulating a full operating system per application, containers share the host OS kernel and package the application with its dependencies. The result is a much more portable unit, faster to start, and easier to replicate.

This is where engines and runtimes like Docker (historically the most popular in development environments), as well as widely used production technologies such as containerd or CRI-O—especially within Kubernetes ecosystems—come into play.

Their value proposition can be summarized in three ideas:

- Efficiency: less overhead than traditional VMs.

- Speed: deployments and scaling are much faster.

- Portability: “it works the same” across different environments, as long as the underlying OS and runtime are compatible.

That’s why containerization has become the natural language for microservices, CI/CD pipelines, DevOps teams, and cloud-native platforms.

However, it’s important to highlight a frequently debated limit: the isolation. Although containers isolate processes, network, and filesystem at a very useful level, they are not the same as VMs in kernel separation. This doesn’t mean they are “insecure” by default, but it does require discipline: security policies, permission controls, host hardening, image management, vulnerability scanning, and when necessary, tools like seccomp, AppArmor/SELinux, or restrictive profiles.

Containers on Virtualization: The World’s Leading Combination

In practice, the most common model in companies and cloud providers is the hybrid: containers running inside virtual machines. That is, virtualization first—to isolate environments and facilitate operations; then containerization—to gain agility and efficiency within each domain.

The logic is straightforward:

- VMs provide a stronger perimeter of isolation and a well-known operational framework.

- Containers enable rapid application deployment and scaling, standardized packaging, and automation.

This approach is especially prevalent with Kubernetes, where a cluster typically runs on virtualized (or physical) nodes. For many organizations, this VM layer offers peace of mind: segmentation, control over node OS lifecycle, backup/snapshot capabilities, and a clear boundary between teams or clients.

The result is a “best of both worlds” setup that—even with some extra overhead—aligns with the operational model the market has embraced: multi-tenant platforms, hybrid environments, staged deployments, and a continuous automation need.

So, what should you choose?

There’s no universal answer. The decision usually depends on four variables:

- Performance and latency: if hardware performance is critical, bare metal or highly optimized virtualization.

- Isolation and compliance: if separation is paramount, virtualization remains the de facto standard.

- Agility and scaling: if deployments are frequent and scaling automation-driven, containerization is hard to ignore.

- Operations and tools: what the team is proficient in tends to win, as long as it doesn’t compromise business requirements.

The conclusion is not that one replaces the other, but that both have become complementary layers for building more efficient, scalable, and automated infrastructures.

Frequently Asked Questions

What’s the difference between a virtual machine and a container in terms of isolation?

A virtual machine includes its own guest operating system and isolates at the hypervisor level, whereas a container shares the host OS kernel and isolates processes and resources through operating system mechanisms.

When is bare metal preferable over virtualization or containers?

It’s usually suitable for workloads with very strict performance, latency, or direct hardware access requirements (for example, certain databases, high-speed networks, or advanced configurations).

Why do many companies run Kubernetes on virtual machines?

Because VMs add an extra layer of isolation and ease operation with familiar tools (segmentation, snapshots, backup policies, environment or client separation), while Kubernetes offers automation and container scaling.

Does containerization replace VMware, Hyper-V, or Proxmox?

Not necessarily. In many environments, containerization leverages virtualization to reinforce isolation and organization. These technologies often coexist and complement each other rather than compete directly.