There are infographics that, without delving into technicalities, depict a powerful idea: if the world is building data centers as if they were factories, there are a handful of components without which that machinery cannot operate. And in 2026, those components are called memory and storage.

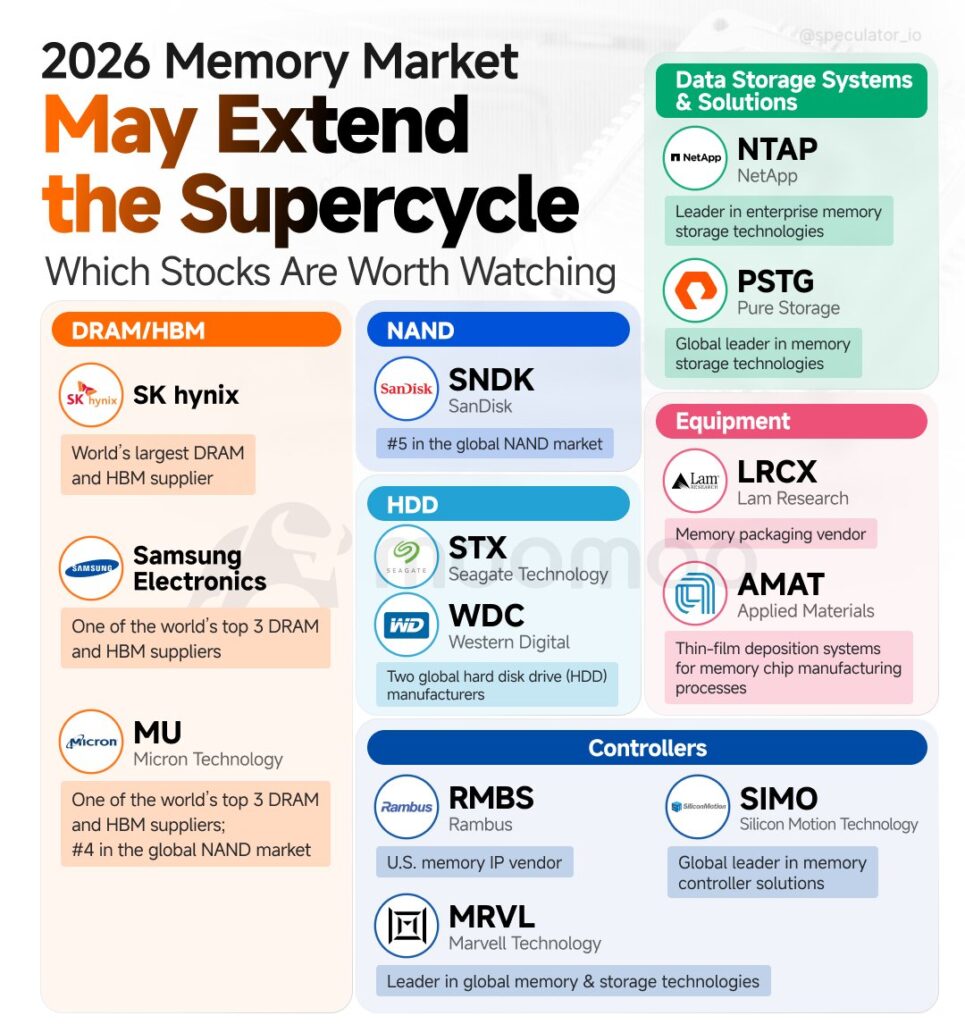

The image circulating these days about “Memory Market 2026” summarizes this with a clear list of categories and companies. It’s not intended as a stock recommendation but rather as a mental map: which players should be monitored if the bullish cycle of memory extends. The core thesis is simple: the rise of AI and the expansion of cloud computing are not sustained solely by GPUs. They also require HBM, DRAM, NAND, disks, controllers, enterprise systems… and, additionally, the machines to manufacture and package all of that.

The main reason: AI doesn’t just consume GPUs, it devours bandwidth

Generative AI and reasoning models have spotlighted graphics cards and accelerators. But the industrial reality is less glamorous: an AI cluster can get “stuck” due to a memory bottleneck.

This is where HBM (High Bandwidth Memory) comes in, which is essentially the ultra-fast memory supporting many training GPUs. The more ambitious the model, the greater the need for bandwidth, parallelism, and continuous data feeding. The infographic highlights SK Hynix, Samsung Electronics, and Micron Technology (MU) as key players, given their role in DRAM/HBM production.

If the pace of AI data center construction continues, it’s logical to think that high-speed memory will have favorable winds: without HBM, the real performance of a GPU farm doesn’t scale as advertised.

DRAM: the “invisible” memory supporting the cloud

Beyond HBM, DRAM remains the silent muscle of mainstream servers. Each compute node—in databases, virtualization, analytics, or inference—requires large amounts of RAM to be effective. And when nodes multiply into the thousands (or tens of thousands), DRAM ceases to be just a component and becomes a strategic factor.

This is key because the memory market often moves through cycles: when demand spikes and supply takes time to adjust (due to manufacturing capacity, yields, inventory, or technological transitions), prices tend to tighten. This is the terrain where the word “supercycle” appears—not as magic but as a perfect storm of sustained demand and limited capacity.

NAND and SSDs: storage is no longer just “disks,” it’s operational speed

The second major segment on the map is NAND, the technology behind SSDs. The infographic mentions SanDisk (SNDK) as a reference in this segment. The argument here is equally straightforward: modern data centers depend on rapidly moving data. And this means solid-state storage isn’t a luxury but a way to maintain low latency and stable services.

AI intensifies this point. Training, retraining, versioning datasets, logging, storing embeddings, and managing “lakes” of operational data require a faster storage stack, often more costly. In this context, SSDs are not just capacity: they are cluster productivity.

HDDs: the old hard drives remain the king of cost per terabyte

At the same time, the world has not shifted entirely to SSDs. For cold data, massive backups, archiving, compliance, and historical copies, HDDs remain unbeatable on cost per capacity. That’s why the infographic places Seagate Technology (STX) and Western Digital (WDC) in the HDD segment.

In a data center supercycle, HDDs often reemerge strongly for a reason that’s seldom discussed: even if computing is “new,” data grows faster than the capacity to manage it. And when the goal is to store large amounts—not necessarily access at ultra-high speed—the traditional disk still plays a key role.

Enterprise systems: NetApp and Pure Storage, the “final product” companies buy

There’s a significant leap between manufacturing memory chips and solving real business problems. That’s where enterprise storage systems come in, with NetApp (NTAP) and Pure Storage (PSTG) mentioned in the infographic.

This segment benefits when spending shifts from “testing AI” to “operating AI.” In production, concepts less glamorous than model parameters matter more: availability, snapshots, replication, consistent performance, disaster recovery, and operational costs. And that often pushes toward integrated platforms rather than individual components.

Equipment: if there’s a supercycle, those selling “peaks and shovels” also benefit

The map includes a block for “Equipment,” featuring Lam Research (LRCX) and Applied Materials (AMAT). The classic tech principle applies here: if the industry decides to expand manufacturing capacity or accelerate transitions, suppliers of tools and industrial processes are usually in the front line.

In memory manufacturing, this is especially relevant due to process complexity: deposition, etching, packaging… along with constant pressure to improve performance, density, and energy efficiency. If demand drives investment upward, equipment companies become leading indicators.

Controllers and IP: the “brain” ensuring storage and memory work smoothly

Finally, the infographic groups Rambus (RMBS), Marvell Technology (MRVL), and Silicon Motion (SIMO) under “Controllers.” It’s a category often overlooked by the general public but is critical: without controllers, protocols, IP, and connectivity, memory and storage can’t deliver their full potential.

Practically, the controller decides how performance is managed, parallel access, error correction, behavior under load, and, in SSDs, some of the reliability. In data centers, this detail directly translates into: cost per operation and service stability.

The conclusion: a new data center is, in essence, a memory factory

The final message from the infographic is clear: the data center boom isn’t just boosting GPU makers. It drives the entire chain that turns electricity into useful computing: fast memory for AI, RAM for servers, SSDs for operations, HDDs for storage, enterprise platforms to manage it, tools to produce it, and controllers to keep everything running.

Whether the “supercycle” extends or not depends on macro factors (capex, energy, rates, actual business demand, model efficiency), but as a snapshot of the moment, this scheme helps make sense of the noise: AI is not just software. In 2026, more than ever, it’s also memory.

Via: X Speculator IO