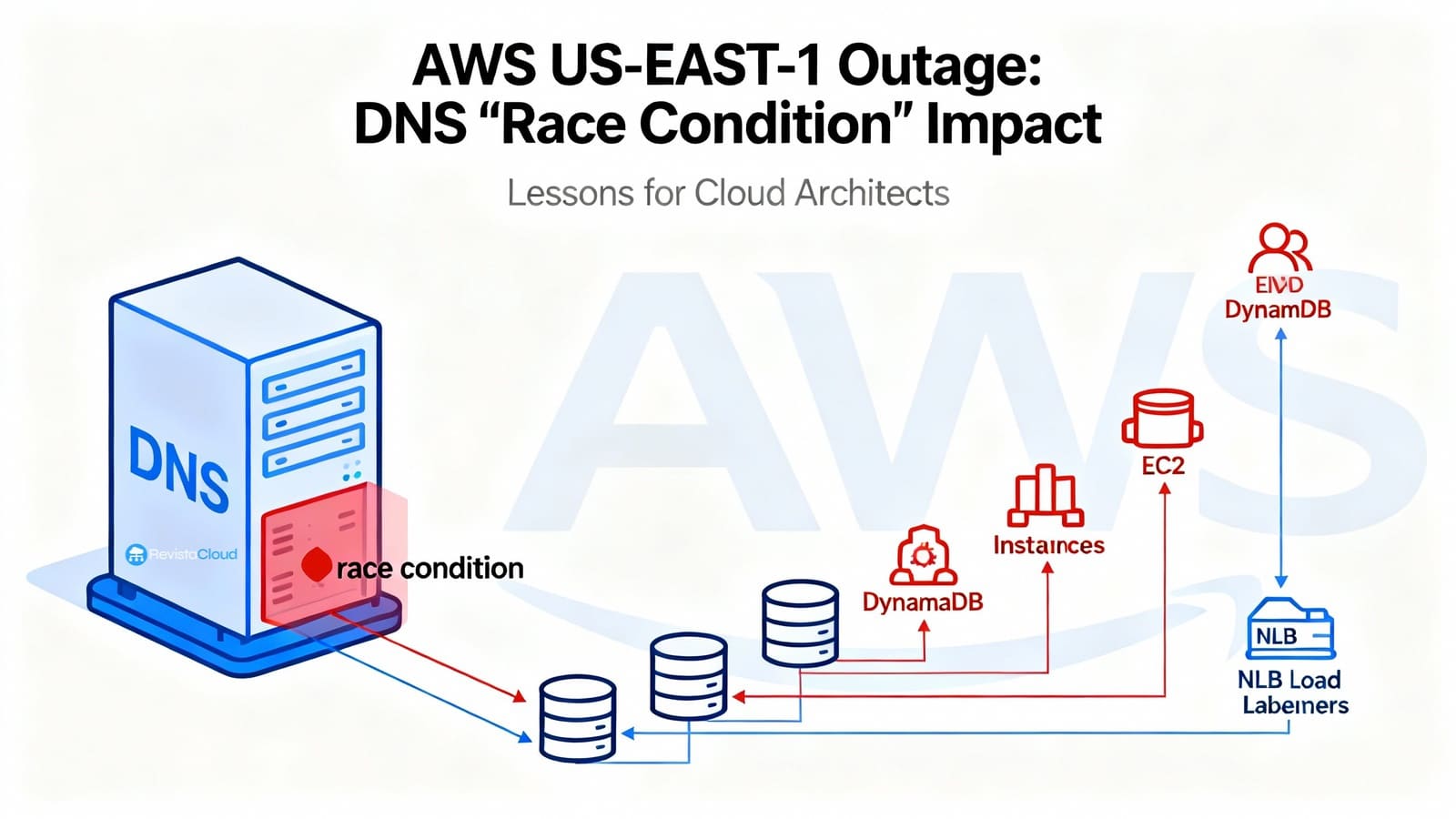

AWS has published the post-mortem report on the outage that October 19 and 20 took the N. Virginia (us-east-1) region offline and impacted dozens of services. The trigger was as subtle as it was devastating: a race condition in the internal automation managing Amazon DynamoDB’s DNS. That bug resulted in applying an empty DNS plan to the regional endpoint dynamodb.us-east-1.amazonaws.com, preventing its resolution. Without the “catalog” that coordinates much of AWS’s control plane, the domino effect quickly followed: IAM, STS, EC2, Lambda, NLB, ECS/EKS/Fargate, Redshift, and other services encountered errors for hours.

The company admits it had to stop the involved automation globally, manually restore the DNS state for DynamoDB, and then progressively unlock subsystems with selective restarts and temporary request throttling to recover the region in a phased manner. The official document details exactly what happened, why it happened, and what changes will be made to prevent recurrence.

Outline of a collapse (overview)

AWS identifies three main impact windows (local time on the US West Coast, PDT):

- 10/19, 23:48 – 10/20, 02:40: DynamoDB experiences increased errors on APIs. Clients and internal AWS services relying on DynamoDB cannot establish new connections because the regional endpoint stops resolving.

- 10/20, 02:25 – 10:20, 10:36: Launching new EC2 instances fails for hours; when launches resume, some newly created instances lack connectivity due to delays in network state propagation.

- 10/20, 05:30 – 14:09: Network Load Balancer (NLB) logs connection errors across parts of the fleet due to spurious health check failures: capacity is brought online before its network was fully propagated, health checks fluctuate, and the orchestration layer removes and adds DNS nodes, degrading the data plane.

Simultaneously, services like Lambda (invocations and event sources), ECS/EKS/Fargate (container startup and scaling), Amazon Connect (call/chat handling), STS (short-lived credential issuance), and even Redshift (cluster queries and modifications) were impacted due to dependencies on DynamoDB, new EC2 launches, or proper NLB behavior.

Root cause: Planner, Enactor… and a DNS void

DynamoDB manages hundreds of thousands of DNS records per region to direct traffic to a heterogeneous pool of load balancers (including variants like public endpoints, FIPS, IPv6, account-specific endpoints, etc.). This complexity is controlled by two modules:

- DNS Planner: periodically computes a DNS plan for each endpoint (list of load balancers and weights) based on capacity and health.

- DNS Enactor: applies these plans to Amazon Route 53 via atomic transactions. For resilience, three Enactors operate in parallel—one in each AZ—acting independently.

The bug occurs when a delayed Enactor tries to finalize the application of an old plan at the same time that another up-to-date Enactor executes a new plan and initiates its cleanup of obsolete plans. The improbable but possible sequence of steps was:

- The late Enactor overwrites the regional endpoint plan with an old plan (the check “this is newer than before” becomes obsolete due to the accumulated delay).

- The up-to-date Enactor performs the cleanup and removes the old plan as “too old”.

- Result: the regional endpoint is without addresses, and the system is in an inconsistent state that blocks further automatic corrections.

- Solution: manual intervention to restore proper state in Route 53 and unlock the cycle.

From that moment, everything depending on DynamoDB resolution started to fail.

EC2: why couldn’t new instances be launched even as “Dynamo was recovering”

Once DynamoDB’s DNS was restored, the next step was to recover the control plane for EC2. Two internal pieces are involved:

- DropletWorkflow Manager (DWFM): manages the physical servers (droplets) hosting EC2 instances and maintains an active “lease” for each droplet.

- Network Manager: propagates the network state (routes, rules, attachments) to instances and appliances.

During DynamoDB’s outage, the periodic checks from DWFM to its droplets failed and leases gradually expired. With DynamoDB back, DWFM attempted to reclaim millions of leases simultaneously; the operation was so volumetric that it caused congestive collapse—queues reattempted and leases expired again. The recovery recipe followed classical SRE principles: throttling intake and selective restarts of DWFM hosts to clear queues and reduce latencies. Once leases were restored, launches resumed.

The remaining component was the Network Manager: it needed to propagate the network state to all new instances and those terminated during the event. The backlog was such that propagation lagged: newly launched instances started without connectivity until their configuration arrived. This delay also confused the NLB health check subsystem, which fluctuated states (flapping), aggressively removing and adding capacity in DNS and causing connection errors.

To cut the bleeding, AWS temporarily disabled the automatic failover from NLB health checks, increased capacity in the service, and later re-enabled it once EC2 and network stabilization was achieved.

Other relevant impacts

- Lambda: initial failures due to DynamoDB endpoint issues (creating, updates, triggers with SQS/Kinesis). Hours later, with EC2 still degraded, AWS prioritized synchronous invocations and limited event source mappings and asynchronous invocations to prevent cascades.

- STS and IAM: authentication errors and latencies (due to indirect dependency on DynamoDB, and then on NLB).

- Amazon Connect: errors in calls, chats, and dashboards caused by a combination of NLB and Lambda issues.

- Redshift: query disruptions and cluster operation delays; some clusters remained “modifying” because they couldn’t replace EC2 hosts for hours. An additional defect affected all regions’ queries using IAM credentials during an interval where an IAM API in us-east-1 was used to resolve groups: clusters with local users were unaffected.

What AWS will change

The post-mortem lays out concrete measures:

- Disabling DynamoDB DNS automation (Planner/Enactor) regionally until the race condition is fixed and additional safeguards are introduced to prevent incorrect plans.

- NLB: rate limiting control to restrict the capacity a single NLB can withdraw when health checks trigger AZ failovers.

- EC2: new testing suite that exercises large-scale DWFM recovery flows and improvements in throttling within data propagation systems, tuning pace based on queue size to protect the service.

Lessons for architects and SREs: designing to lose the “catalog”

- Multi-AZ is not enough; consider multi-region (and multi-cloud if relevant).

If your business cannot tolerate hours of outage, active-active or pilot light in another region is the way. For DynamoDB data, Global Tables help… but remember that traffic to a down regional endpoint won’t switch automatically: your app must know to use replicas when a region is unresponsive (and accept lag temporarily). - Differentiate between data plane and control plane in your design.

Your app can keep providing data plane service with the stable data plane even if the control plane (launching instances, updating configs, issuing credentials) degrades. Do you have hot capacity or instance pools to withstand this without autoscaling? Are you capable of degrading functions that require new resources? - DNS: sensible caching, avoiding extremes.

A reasonable TTL buffers transient resolution failures. Avoid “TTL 0” by default, but don’t set too long either: when the provider fixes the endpoint, you don’t want to wait hours for old caches to expire. For critical clients, consider using retries with backoff and not fixing IPs of managed services. - Patience in health checks (avoid flapping).

Set thresholds and grace periods to prevent removing capacity due to transient checks during ongoing network propagations. Aggressive failover can multiply the problem: it removes healthy nodes whose networking isn’t ready yet, worsening congestion. - Circuit breakers and downgrade routes.

If you can’t write to DynamoDB, can you temporarily switch to read-only? If creating new queues or instances isn’t possible, can you buffer work locally or pause non-critical components? - Credentials and sessions.

Avoid simultaneous expiration of tokens. When STS/IAM face latency, you don’t want all your services to try renewing at once. Stagger and extend safety margins prudently during events. - Runbooks and drills for “us-east-1 down”.

Document who does what and in what order: activate limits, disable parts of the pipeline, switch DNS/region, prioritize queues, etc. Practice these playbooks.

Is Virginia the “canary” for AWS?

In technical forums, the idea is recurring: us-east-1 is the largest, oldest region with the most “production-like” services; therefore, it’s more exposed to complex incidents and systemic effects. Some even consider it AWS’s “canary”. Beyond the nickname, the practical lesson for businesses is clear: don’t concentrate all critical dependencies in that region, especially control plane and single coordination points. Design assuming that a region can be degraded for hours… or become inaccessible outside your architecture’s control.

What to communicate now to business (and customers)

- What happened in your platform (dates, affected services, duration).

- What contingencies were applied, and what residual risks remain (reprocessing, backlogs, delayed data).

- What you will do in the short/medium term: backlog of changes (multi-region, DNS TTLs, health checks, throttling, pre-allocated capacity) with dates and responsible teams.

- How improvements will be measured: indicators of continuity, RTO/RPO, and resilience goals.

Conclusion

The us-east-1 incident was not a “perfect storm” out of nowhere: it was the manifestation of a hidden race failure in a critical and automated system (the DNS management for a central service) that, when triggered, emptied an endpoint and blocked self-healing. From there, the cascade involves cross-dependencies and congestions typical of any hyperscale platform. The good news is that the post-mortem outlines concrete actions; the less good is that there’s no magic: resilience isn’t bestowed by the provider “by decree,” it’s designed. And that design begins by accepting that a region—even the most famous in the world—can fail when it’s least convenient.

Frequently Asked Questions

How can I reduce the impact of an AWS regional outage on an e-commerce or digital media platform?

Implement multi-AZ + multi-region architecture for the frontend and critical services (cache/CDN, authentication, catalog). Use DynamoDB Global Tables or managed replicas where applicable, and ensure your app can switch to replicas when an endpoint is unresponsive. Maintain hot capacity to serve without relying solely on autoscaling.

Why did the Network Load Balancer fail if only DynamoDB was affected?

Because after restoring DynamoDB DNS, EC2 took time to reclaim leases and propagate network state to new instances. NLB added nodes before connectivity was ready; health checks fluctuated, and the failover logic removed healthy capacity. Adjust thresholds and grace periods to avoid flapping during mass deployments.

Should I lower DNS TTLs for faster provider fixes?

A moderate TTL buffers transient resolution issues. Avoid “TTL 0” by default, but don’t make it too long, as waiting hours for caches to expire can delay recovery. Use retries with backoff and avoid fixing IPs for managed services in critical clients.

Does it make sense to avoid us-east-1?

More than avoiding, diversify. It’s a key region due to latency and service breadth, but keep critical points like authentication, queues, and catalogs away from it. Design for controlled degradation and regional failover.

Sources: AWS Post-Event Summary on the DynamoDB outage and its impacts on EC2, NLB, and other services in N. Virginia (us-east-1), along with related AWS technical documentation on the incident and announced measures.