The graphics card memory technology is on the verge of a significant leap with the introduction of HBM4 memory, which promises to double the available bandwidth for GPUs dedicated to artificial intelligence. Unlike its predecessors, the next-generation HBM4 will feature an impressive 2,048-bit interface, opening up new possibilities in terms of performance and capacity.

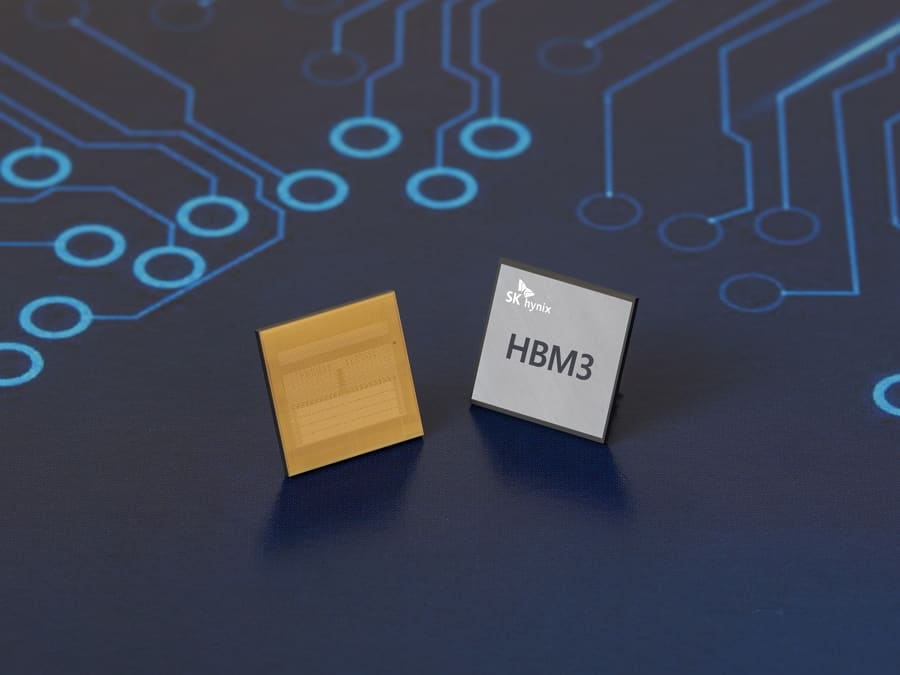

Since its debut in 2015 with the AMD Radeon R9 Fury X, High-Bandwidth Memory (HBM) has been a transformative technology in the realm of graphics cards. However, the initial limitations of capacity and speed have been overcome with each new generation, culminating in HBM3E, which reaches speeds of up to 1.2 TB/s per stack. Now, HBM4 seeks not only to continue this trend, but also to set new standards of efficiency and performance.

Innovations and improvements of HBM4

HBM4 memory is distinguished not only by its impressive bandwidth, but also by its ability to reduce the number of required stacking layers. With a 2,048-bit interface, HBM4 could need only half the stacks compared to HBM3 to achieve an equivalent total interface. This could mean lower power consumption and greater efficiency in chip packaging.

This development is crucial for artificial intelligence applications, where speed and processing capacity are essential. With the promise of HBM4, GPUs will be able to process large volumes of data more quickly, which is essential for training more complex AI models and performing real-time inferences.

Strategic collaborations and the future of HBM4

Leading companies like NVIDIA and SK Hynix are closely collaborating to optimize the integration of HBM4 memory directly onto the GPU. This approach, similar to AMD’s 3D V-Cache technology, aims to minimize latency by placing memory as close as possible to the GPU’s processing core. This integration not only improves performance but also reflects a significant advancement in hardware design for data-intensive applications.

Market impact and future challenges

Although HBM4 will not be available until after the recent unveiling of HBM3E in 2023, its development is closely followed by the industry. The implications of faster and more efficient memory are enormous, not only for hardware design but also for the advancement of emerging technologies like artificial intelligence and deep learning.

However, the challenges are not insignificant, especially in terms of integration and production costs. The widespread adoption of HBM4 will depend on how these challenges are addressed in the coming years, as well as the industry’s ability to keep pace with the ever-increasing demands of data processing.

In summary, HBM4 memory is set to be a game-changer in the world of GPUs for AI, offering significant improvements in bandwidth and efficiency. With its launch on the horizon, it could very well mark the beginning of a new era in the design and functionality of graphics cards.