The unstoppable advance of artificial intelligence (AI) and the development of new generations of HBM (High Bandwidth Memory) are pushing the energy consumption limits of AI accelerator GPUs. This is revealed in a joint report by the Korea Advanced Institute of Science and Technology (KAIST) and the TERA lab, which anticipates an unprecedented escalation in energy needs for high-performance computing systems.

HBM: The Race Towards Ultra-Fast, High-Capacity Memory

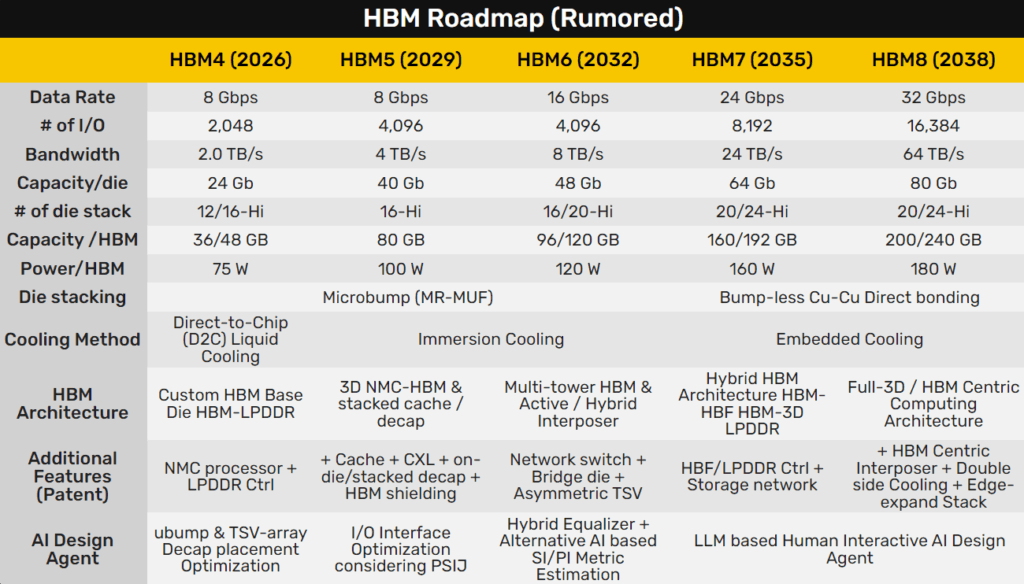

Currently, HBM3E technology has enabled accelerators like the NVIDIA B300 and the AMD MI350 to reach capacities of up to 288 GB of high bandwidth memory. However, the next generation, HBM4, will raise the bar to 384 GB for NVIDIA (Rubin series) and up to 432 GB for AMD (MI400 series), with launches expected next year.

But the true revolution will come with HBM5, HBM6, and HBM7, whose projections place maximum capacities at astronomical figures: HBM5 could reach 500 GB, HBM6 between 1.5 and 1.9 TB, and HBM7 up to 6 TB per accelerator. This represents a qualitative leap for generative AI applications, big data, and scientific simulation.

Next-Generation GPUs: Heading Toward 15,000 W per Card

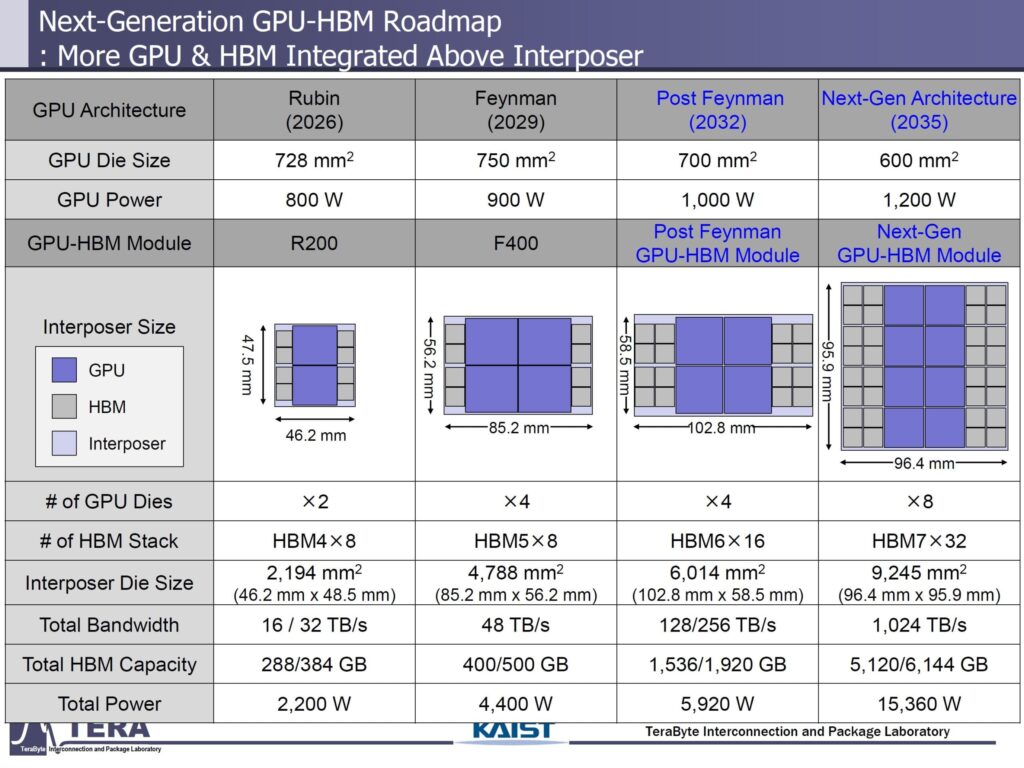

The report details the dramatic increase in energy consumption of AI GPUs. For instance, the upcoming NVIDIA Rubin GPU—set to arrive in 2026—will have a chip consumption of 800 W, and the complete card, featuring HBM4 and dual integrated chips, will reach 2,200 W. The current AMD MI350 is already approaching 1,400 W in liquid-cooled systems.

In the medium term, the NVIDIA Feynman GPU (expected in 2029) will scale up to 900 W of consumption in the core and 4,400 W for the complete card, using four chips and HBM5 to offer a bandwidth of 48 TB/s.

Projections for the next decade are even higher: in 2032, a 4-chip accelerator with HBM6 could approach 6,000 W, and by 2035, a hypothetical 8-chip card with 32 HBM7 modules and over 1 TB/s of bandwidth could exceed 15,000 W.

An Energy Challenge for Data Centers

The exponential growth in energy consumption of AI GPUs presents a serious challenge for data centers, which may require their own energy infrastructures, such as dedicated nuclear or renewable power plants, to supply these systems. The consumption of a single 15,000 W card equals that of an average Spanish household over several days.

According to experts, if the trend is not moderated through innovations in efficiency and architecture, the current growth model could be unsustainable, both environmentally and economically.

Is This Future Viable?

The report concludes with a reflection: “If technological evolution continues down this path, every data center will need its own power plant.” The industry faces the challenge of balancing the raw power demanded by AI with energy efficiency and sustainability, key factors for the digital future.

Source: MyDrivers, KAIST, TERA Lab, Fast Technology, NVIDIA, AMD