Tesla has transformed the onboard computer into the core of its assisted driving and autonomous strategy. What began in 2014 with a Mobileye module has evolved through successive generations — NVIDIA Drive PX2, and since 2019, chips designed by Tesla itself — executing neural networks at increasing speeds with a clear philosophy: fewer “traditional” sensors, more vision, and more computing.

In less than a decade, the company has gone from a 40 nm EyeQ3 with just a few watts to computers housing two Tesla-made chips on 7 nm, with 20-core CPUs and up to 160 W TDP. It openly discusses new iterations (AI 5 / Hardware 5 and AI 6 / Hardware 6) with even more thermal budget. Meanwhile, it has reconfigured its sensor suite: removed radar and ultrasound sensors in much of its lineup to push for Tesla Vision (cameras and deep learning). Additionally, a HD radar was reintroduced in Model S/X with HW4, and a bumper camera was added for 2024. The official goal: achieve “Full Self-Driving (FSD)” functions that depend less on sensors and more on AI that understands the environment.

Next, we review the hardware and sensor evolution across generations, and what the latest changes imply for the system’s future.

From Mobileye to NVIDIA: the foundations (Hardware 1 → Hardware 2/2.5)

Hardware 1 (HW1, 2014) used the well-known Mobileye EyeQ3 platform, a STMicro chip in 40 nm at 500 MHz drawing about 2.5 W. This was the era of traditional ADAS (emergency braking, lane centering, adaptive cruise control) and “everyday” sensors: front radar and 12 ultrasound sensors.

Hardware 2 (HW2, 2016) marked the transition to NVIDIA Drive PX2: a Tegra X2 (“Parker”) processor (16 nm) with 2 Denver 2 cores + 4 Cortex-A57 cores and Pascal GPU. Tesla expanded the camera array (initially with RCCC filters) and added side cameras facing forward and backward mounted on the pillars. Hardware 2.5 (HW2.5, 2017) was a refinement with increased computing power and redundancies; it used a setup with 12 Cortex-A72 cores at 2.2 GHz (14 nm, Samsung) and ~100 W TDP in the driving computer. This cycle laid the groundwork for larger neural networks and a move towards a completely Tesla-designed computer.

Hardware 3 (2019): Tesla’s first “FSD Computer” of its own

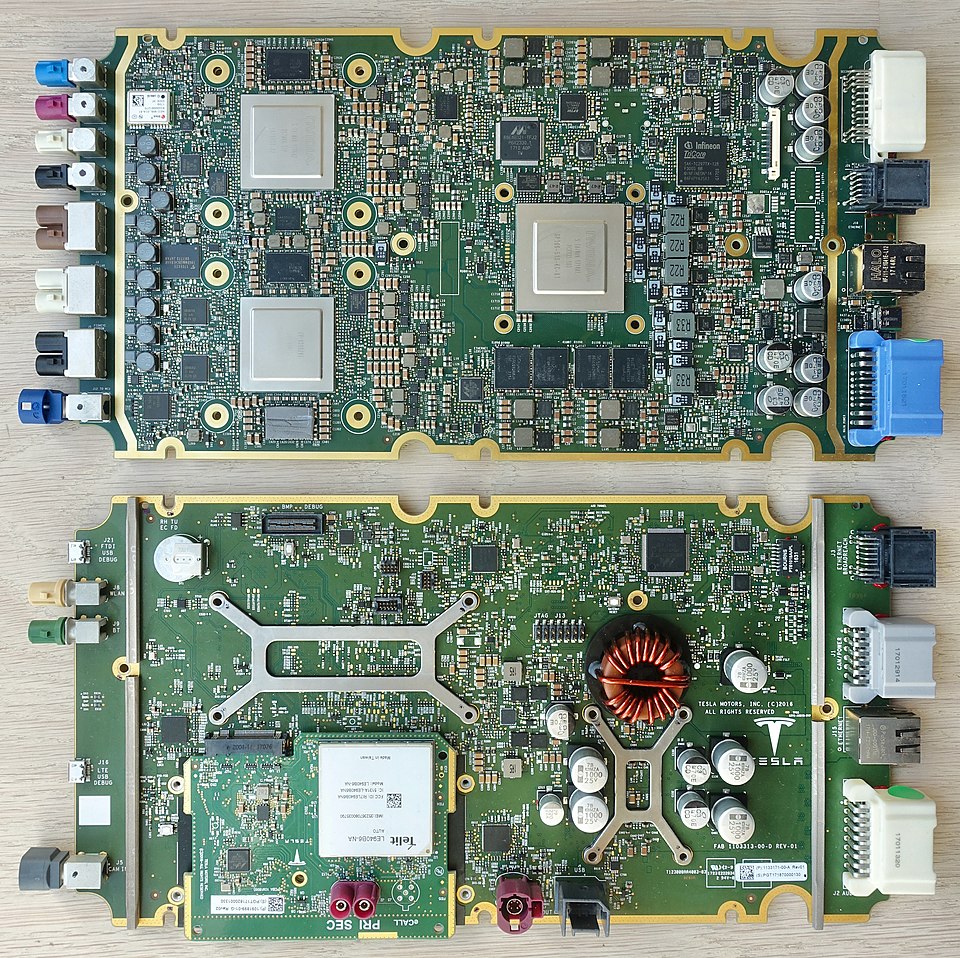

In April 2019, Tesla introduced Hardware 3 (HW3), its first FSD computer with two chips designed by Tesla on Samsung chips. Each board houses two identical SoCs (active redundancy), known in documentation as “FSD 1 Chip”, featuring a 12-core CPU, duplicated safety pathways, and dedicated accelerators for neural network inference. With HW3, Tesla announced all new cars came equipped with “full autonomous driving hardware” and started deploying the FSD Beta.

In terms of sensors, HW3 experienced the major shift to “Tesla Vision”: between 2021 and 2022, the company announced the removal of front radar and shifted to relying on cameras + AI for Autopilot and FSD functions. Simultaneously, ultrasound sensors were phased out in Model 3/Y. From then on, the lineup split: S/X retained a front radar longer, while 3/Y moved to pure vision.

Hardware 4 (2023): More cameras, higher resolution, and “FSD 2 Chip” (7 nm, 20 cores, 160 W)

Hardware 4 (HW4) marked another step up in integration. In January 2023, Tesla started installing its second-generation FSD chip, called “FSD 2 Chip”, with again two Tesla-designed redundant SoCs manufactured by Samsung on 7 nm. This chip features 20 cores at around 3.0 GHz and a total TDP of roughly 160 W.

The camera suite was upgraded — with RCCB filters and 5 Mpx sensors on the sides — along with larger, more sensitive image matrices and better optics. In Model S/X with HW4, Tesla reintroduced a HD radar with a range of approximately 300 meters, while in Model 3/Y with HW4, no radar is present. In March 2024, the company confirmed a bumper camera for S/X to boost frontal visibility at low angles (no official retrofit for earlier models).

The design philosophy was solidified: computing redundancy with two SoCs, vision as primary sensor, and a radar system that, when present, helps improve detection in adverse conditions (rain, fog) in higher-end models.

On the horizon: AI 5 (HW5) and AI 6 (HW6)

Roadmap notes place AI 5 / Hardware 5 around June 2024, with an estimated thermal budget of about 800 W. AI 6 / Hardware 6 is targeted for July 2025, again with Samsung as manufacturing partner. Tesla has not publicly revealed detailed specs or architecture, but the numbers suggest several things:

- Additional dedicated inference accelerators and substantially higher bandwidth for larger, multimodal models.

- Enhanced redundancy and potentially more high-resolution sensors (e.g., cameras with wider dynamic range and lower latency).

- A possible shift from “onboard computer” to a “compute node,” with power and cooling designed to support dense neural networks (trained externally) and multiple tasks (perception, planning, mapping, occupancy networks).

The increase in TDP aligns with the qualitative jump in neural networks — transitioning from 2D camera-based networks to 3D, multi-camera models, fusing HD radar when available, and end-to-end planning — all aimed at reducing human intervention in complex maneuvers.

Evolution table (hardware and sensors)

Note: Data summarized from public documentation and technical sheets; some capabilities depend on version and model.

| Generation | Initial availability | Platform / SoC | Process / CPU / Approx. TDP | Front radar | Ultrasound sensors | Front cameras (FOV/range)** | Side (front/back) | Notes |

|---|---|---|---|---|---|---|---|---|

| HW1 | Nov 2014 | Mobileye EyeQ3 | 40 nm, 500 MHz, ~2.5 W | Yes, ~160 m | 12, ~5 m | Model S: mono camera; later Main 50° 150 m, Wide 120° 60 m | — | Basic ADAS (AEB, ACC, LKA) |

| HW2 | Oct 2016 | NVIDIA Drive PX2 (Tegra X2) | 16 nm; 2× Denver2 + 4× A57; Pascal GPU | Yes, ~170 m | 12, ~8 m | Main 50° 150 m, Wide 120° 60 m | Front: 90° ~80 m; Rear: ~100 m | RCCC filters; more cameras |

| HW2.5 | Aug 2017 | Drive PX2 (rev) + Tesla ECU | 14 nm (Samsung); 12× A72 @2.2 GHz; ~100 W | Yes | 12 | Similar to HW2 | Similar to HW2 | More compute and redundancy |

| HW3 | Apr 2019 | FSD Computer 1 (Tesla) ×2 | 12-core CPU; 2 redundant SoCs | Switch to “Tesla Vision” (gradual removal) | Ultrasounds retired in 3/Y | Enhanced camera suite | Side: 5 MPx | Focus on pure vision (2021–2022) |

| HW4 | Jan 2023 | FSD Computer 2 (Tesla) ×2 | 7 nm (Samsung); 20 cores @3.0 GHz; ~160 W | S/X: HD radar ~300 m; 3/Y: none | No | Upgraded cameras with RCCB filters and higher resolution; bumper camera in S/X (2024) | Side: 5 MPx | Better optics and filters |

| HW5 (AI 5) | Jun 2024* | — | —; ~800 W (compute platform) | To be confirmed | To be confirmed | To be confirmed | To be confirmed | “AI 5” computing platform |

| HW6 (AI 6) | Jul 2025* | — | Samsung (manufacturing) | To be confirmed | To be confirmed | To be confirmed | To be confirmed | New generation computer |

* Dates and features of AI5/AI6 based on industry schedule; public detailed specs not provided.

** Camera ranges are approximate and depend on model/optics.

What does all this mean for the driver?

- More “software-defined cars”: each hardware leap has enabled more functions via software (raising questions about nomenclature and regulation). Tesla has shown that it can upgrade capabilities post-sale, but the onboard computer sets the limit: HW4 opens the door to denser neural networks, and HW5/6 points toward even larger models.

- Vision as primary sensor: the removal of radar and most ultrasound sensors forces cameras and AI models to handle fine-grained tasks (distances, cut-ins, small obstacles). The reintroduction of a HD radar in S/X with HW4 indicates that Tesla sees a complementary value in adverse conditions.

- Redundancy and safety: deploying two SoCs per board isn’t just a design choice; it fulfills safety requirements (if one fails, the other maintains control). As more actuators are integrated under AI control, this redundancy will become even more critical.

- Product cycle considerations: not all hardware updates are retrofits. Though Tesla has offered hardware updates before, universal updates aren’t guaranteed (e.g., no retrofit for the bumper camera in S/X). Choosing a model often means accepting the hardware/software package of its specific generation.

Technical keys on the camera suite: RCCC vs. RCCB, 5 Mpx, and “bumper cam”

- RCCC/RCCB filters: instead of the classic RGGB, Tesla has tested RCCC (three clear channels + red) and RCCB (blue instead of green) to boost sensitivity and night perception. The RCCB filter in HW4 offers more dynamic range and richer color in challenging conditions.

- Resolution: the side cameras with 5 Mpx help in reading lines, curbs, and traffic in secondary lanes.

- Bumper camera (S/X): in low-height maneuvers and close obstacles near the front, it provides angles that the windshield camera cannot capture.

Looking toward 2026: what to expect from HW5/HW6

If AI 5 (HW5) truly scales TDP to around 800 W, we can expect:

- Multiple inference accelerators and significantly higher bandwidth for 3D vision transformers, occupancy flow, and end-to-end planning.

- More aggressive thermal policies and/or improved cooling, especially in warm climates.

- Higher fidelity sensor integrations (better DRange, SNR, lenses, perhaps new FPS rates).

AI 6 (HW6), being the next step, should refine that leap with more advanced processes and design optimizations in collaboration with Samsung. Although detailed public specs are unavailable, the pattern is clear: more AI at the edge, less reliance on auxiliary sensors, and software that manages environmental complexity.

Frequently Asked Questions

What is the practical difference between HW3 and HW4?

HW4 features Tesla’s FSD 2 Chip (dual 7 nm SoCs) with 20-core CPUs and around 160 W TDP. It includes higher-resolution cameras and, in Model S/X, a HD radar and bumper camera. Compared to HW3 (FSD 1, 12 cores), HW4 enables larger AI models, enhanced perception, and increased redundancy.

Why did Tesla remove radar and ultrasound sensors in some models?

To simplify the sensor suite and focus on vision + AI (Tesla Vision). The company asserts that cameras, combined with suitable neural networks, can estimate distances and classify obstacles with less latency and better consistency. In S/X with HW4, a HD radar was reintroduced as support in adverse conditions.

Can I upgrade from HW3 to HW4 in my vehicle?

Tesla has performed retrofitting of computers in specific programs, but there is no universal upgrade policy to HW4. Also, some physical changes (like the bumper camera in S/X) lack official retrofitting options. Buying based on your current hardware is advisable.

What do “RCCC” and “RCCB” filters mean in the cameras?

They are alternative filter matrices to the standard RGGB. RCCC (three clear channels plus red) and RCCB (blue instead of green) improve sensitivity and dynamic range, especially beneficial for night vision and high contrast scenarios. HW4 popularized RCCB in its camera suite.

Sources: Wikichip (Tesla FSD / Autopilot hardware and sensors), Tesla communications and support pages regarding the transition to Tesla Vision (radar/ultrasound removal) and the bumper camera on S/X, and technical coverage of Hardware 4 (HD radar in S/X).