The surge in AI demand is not only transforming data centers: it’s also pushing computing to “leave” the core and move closer to the user. In this battle for latency—and data sovereignty—every meter of fiber counts. That’s why the initiative announced from Barcelona by Submer, an AI infrastructure provider, isn’t just a corporate headline: it’s about integrating a piece that many players have been trying to force into place.

Submer has announced it will acquire Radian Arc Operations Pty Ltd, a provider of infrastructure-as-a-service (IaaS) platforms aimed at deploying sovereign GPU cloud services “embedded” within telecommunications networks. The transaction, announced on February 10, 2026, pursues a clear goal: to offer a complete “stack” that connects AI factories in data centers with accelerated computing capacity at the edge, within the operator’s own network.

From GPU in the data center… to GPU in the carrier

An uncomfortable reality is emerging in the market: AI has become an infrastructure question, and infrastructure increasingly depends on location. For low-latency inference, cloud gaming, smart video, real-time analytics, or live reaction assistants, the journey to a distant data center can be the difference between a usable service and an exasperating one.

Radian Arc approaches this from that angle. According to communications, its platform is used within telco networks to support workloads like cloud gaming and AI inference, where performance and latency are crucial. Additionally, there’s a growing argument in Europe and many other countries: processing data “within the country,” using local infrastructure integrated with operator systems (including billing and data management).

In other words: this isn’t just about renting GPUs; it’s about transforming the operator’s network into a distributed computing fabric.

A “two-plane” stack: core and edge, with sovereignty as the message

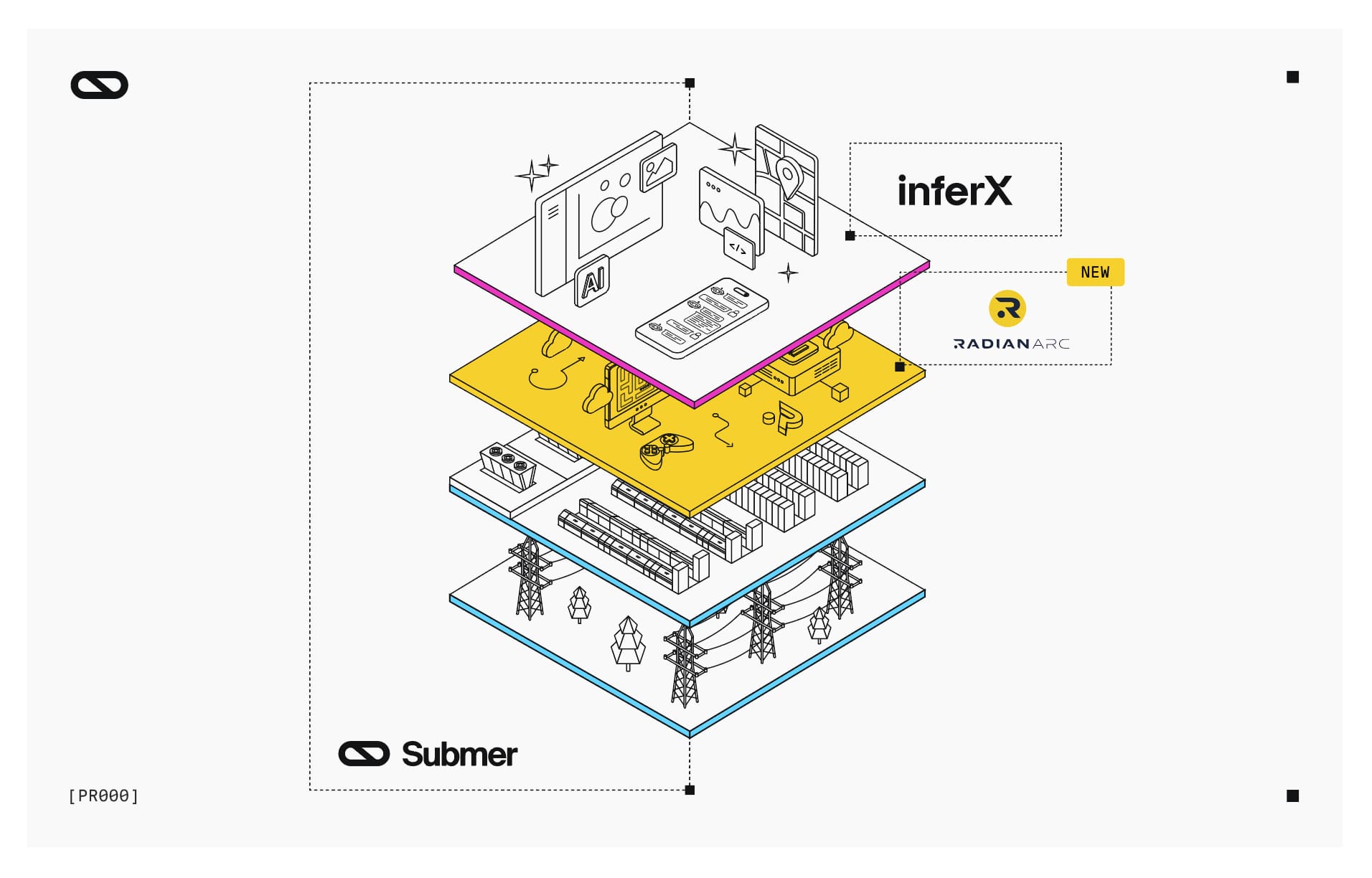

The acquisition aligns with the narrative Submer wants to build around its platform. The company explains that it complements their “full-stack” offering by integrating InferX — their NVIDIA Cloud Partner platform — with Radian Arc’s GPU edge layer.

The result, as presented by Submer, is a “dual-layer” proposition:

- Data center plane (core) for high-density AI deployments.

- Edge plane (telco-embedded) to run services close to the end-user, with lower latency and local data control.

Radian Arc also adds operational momentum: the announcement mentions deployments in more than 70 telco and edge customers worldwide, with thousands of GPUs already in operation. Submer reinforces its narrative as a “single partner” capable of responding “from chip to operation,” leveraging its track record in liquid cooling and in designing and deploying modular infrastructure.

What does this mean for telcos and companies: less theory, more product

Beyond the slogans, the operation reflects a pattern repeating in 2026: operators aim to monetize their reach (and investments in 5G and fiber) with value-added services, but they don’t want to become perpetual integrators of incompatible parts. Unifying platform, operation, and delivery accelerates “time to revenue.”

Practically, this combination could be especially relevant in four scenarios:

- Sovereign AI services for the public sector and regulated industries

When the requirement is “data doesn’t leave the country,” distributed infrastructure within telco networks can become an operational shortcut. - Low-latency inference and interactive workloads

Cloud gaming, industrial co-pilots, streaming analytics, or real-time smart video typically suffer from lengthy latencies and jitter. - Hybrid deployment models

Training or fine-tuning models in the core and serving inference at the edge is an increasingly common pattern. Here, the value is not just technical: it’s also financial (where you pay for transport, energy, and data egress). - Rapid scaling “as-a-service”

Submer emphasizes that the entire setup is geared to accelerate deployments without large upfront investments, especially in scenarios where demand is unpredictable.

Strategic perspective: the edge regains its prominence

For years, “edge” was a vague promise. AI is turning it into a necessity. While massive training pushes toward large clusters, ubiquitous inference pushes toward proximity to the user. Sovereignty—understood as territorial, regulatory, and operational control—has become a buying argument, not just a political debate.

Submer also adds a scalability element: the announcement mentions access to a pipeline of land and energy resources exceeding 5 GW, through partner consortia in the UK, US, India, and the Middle East. In a market where bottlenecks are often not just GPUs but also permits, power, and cooling, this detail signals a clear intention.

Quick overview: what each piece of the “stack” contributes

| Layer | Contribution | Use case |

|---|---|---|

| Submer (infrastructure) | Design, build, and operate high-density AI infrastructure | “AI factories” and large-scale deployments |

| InferX (platform) | Operational/Delivery layer for GPU cloud (NVIDIA Cloud Partner) | Offering GPU capacity as a governed service |

| Radian Arc (telco edge) | Embedded GPU IaaS platform within operator networks | Low-latency AI and cloud gaming with local sovereignty |

Frequently Asked Questions

What does “sovereign GPU cloud” mean in practice?

That computing and data can be processed and stored within a country (or jurisdiction) under local operational control—crucial for public administrations and regulated sectors.

Why is a telco interested in “hosting GPUs” within its network?

Because it allows offering low-latency services (real-time AI, gaming, analytics) and monetizing its reach, while reducing dependence on remote locations.

Does this replace or compete with hyperscalers?

It usually complements them: the core can coexist with public or private cloud, while the edge covers cases where latency, transport costs, or data sovereignty are critical.

What types of workloads benefit most from edge GPU?

Interactive inference, cloud gaming, real-time computer vision, video processing, immediate-response assistants, and event analytics with strict latency requirements.