The race to advance AI computing reaches a new frontier: Earth’s orbit. Starcloud, an accelerator startup in the NVIDIA Inception program, is preparing to launch Starcloud-1 in November. This is a 60 kg satellite — roughly “small refrigerator” size — marking the debut of a NVIDIA H100 GPU in space. The mission aims to demonstrate that inference and data pre-processing can be performed directly where the data is generated, without routing through terrestrial data centers. Additionally, the company argues that space-based data centers could cut energy costs by 10× and ease the load on Earth’s electrical grid.

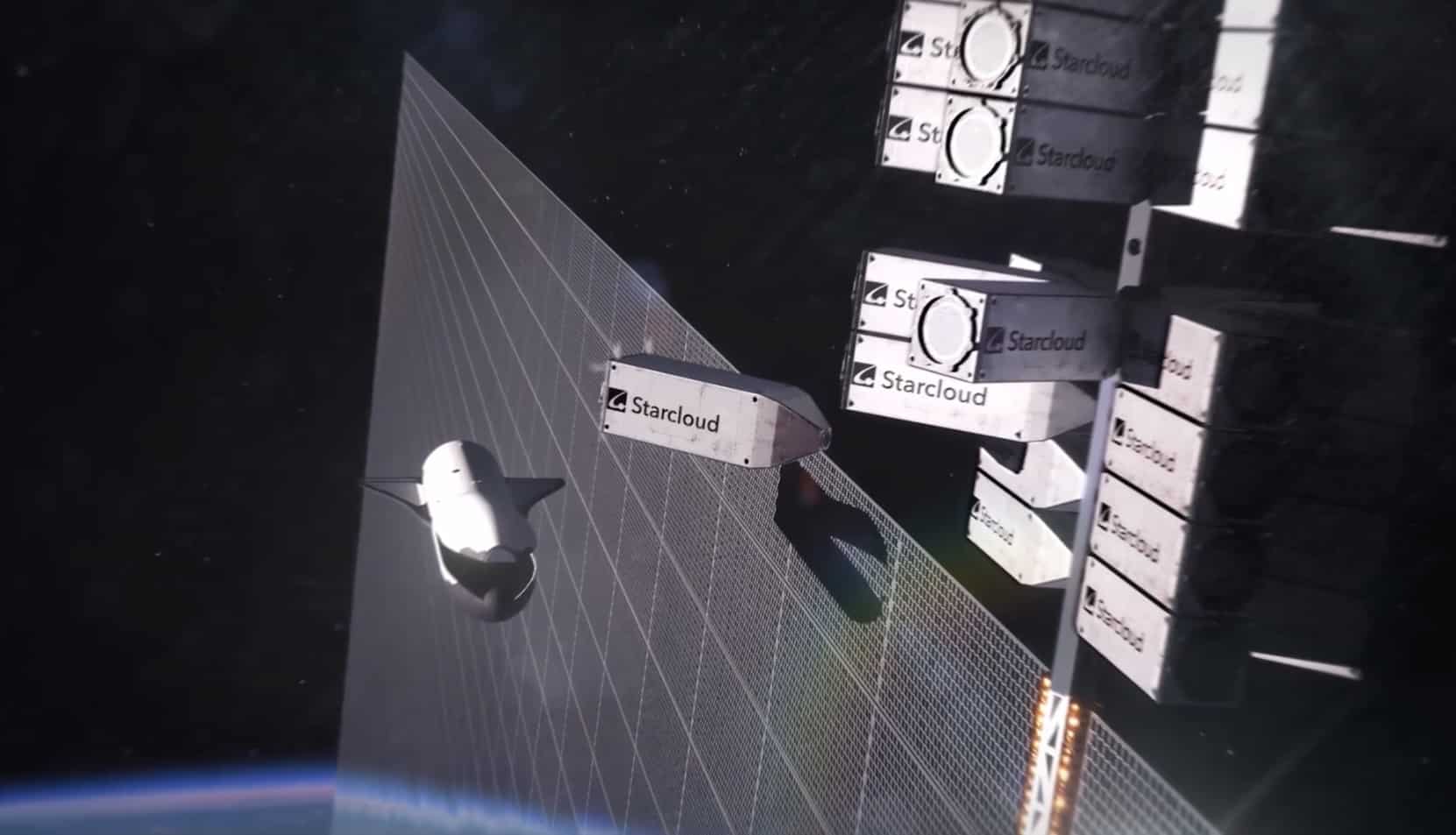

The plan is as ambitious as it is clear: a 5 gigawatt orbital data center featuring solar panels and radiators, covering approximately 4 kilometers on each side to harness continuous sunlight and use space’s vacuum as an infinite thermal sink, without evaporation towers or fresh water. “The only significant environmental footprint is in the launch; over the lifecycle of the data center, CO₂ savings compared to a terrestrial facility would be an order of magnitude,” the company states.

H100 in Orbit and 100× More Computing Power Than Previous Missions

Starcloud-1 will be, according to the company, the first satellite equipped with a data center-class NVIDIA H100 GPU in operation. Its technical goal is to multiply the GPU computational capacity by 100 compared to previous space-based efforts, validating that AI workflows — from computer vision to compression and filtering — can run on-site with latencies of minutes rather than hours.

The mission architecture encompasses capture, pre-processing, and selective transmission. Whereas in the past raw data was sent to Earth, now it is filtered and summarized in orbit. This is useful, for example, for early fire detection, emergency response, or SAR mapping (Synthetic Aperture Radar), a domain that consumes vast amounts of data — up to 10 GB/sec — and benefits from edge inference to decide what to downlink and when.

Energy and Cooling: Why Space Is Tempting for AI

Starcloud’s energy story revolves around two physical facts: a nearly continuous Sun in certain orbits and the vacuum as an “infinite” thermal sink. The company claims that radiating heat via infrared to space avoids the use of water cooling — a critical point for many terrestrial data centers — and that energy costs in orbit could be 10× lower than on Earth, even accounting for launch costs. The consistent solar exposure also reduces dependencies on batteries and traditional backup systems.

Furthermore, the roadmap includes operations near the “terminator line” — the boundary between day and night on the planet — an orbit well-suited to manage thermal loads and optimize photovoltaic collection without impeding the mission.

Use Cases: From Earth Observation to “Sovereign” AI in Orbit

The primary domain where space computing offers advantages is Earth observation:

- Crop detection and local weather prediction using optical, hyperspectral, and SAR.

- Real-time (or near-real-time) analysis for wildfire alerts or emergencies.

- Filtering, compression, and prioritization to reduce downlink bandwidth and accelerate delivery of intelligence to ground users.

Latency drops significantly when processing in orbit and sending only results or relevant chips of data. Privacy and data sovereignty also come into play: part of the content stays on the satellite until necessary, minimizing exposure of sensitive raw data.

Why NVIDIA and Why Now

Starcloud highlights its choice of NVIDIA’s GPU acceleration because training, fine-tuning, and inference need to be competitive with terrestrial data centers, making the orbital model viable. Launching H100 into space aims to close that gap and bring top-tier GPU architecture performance into an environment where weight, energy, and heat dissipation are critical. Being part of NVIDIA Inception provides technical support and access to mature software acceleration.

The Future: From Demonstrator to 5 GW Data Center

Starcloud-1 is just the first step. The company’s vision involves a modular 5 GW data center in orbit, scalable through solar panels and radiators, with laser interconnection for backhaul and distributed processing. Several complex questions remain to be solved, including launch logistics and costs, orbital maintenance and servicing, space debris mitigation, international regulations, space cybersecurity, and — not least — latency to surface users for interactive workloads. Nonetheless, the company argues that the energy, water savings, and scalability benefits justify the venture if addressed with proper design and regulation.

A Trend Indicator: AI Moving to the Edge… Outside Earth Too

Starcloud’s proposal is part of a broader trend: bringing AI to the edge (edge computing). While on Earth, “edge” might mean factory, robot, mobile, or vehicle; in space, the orbital edge adds a layer where sensors and actuators operate with greater autonomy. In this context, the arrival of data center-class GPUs in space signals a turning point: it’s no longer just about capturing and sending; it’s about understanding and deciding where it is captured.

Frequently Asked Questions

What exactly is Starcloud-1, and why is it relevant to AI?

It’s a 60 kg satellite equipped with a NVIDIA H100 GPU designed to multiply space-based GPU compute by 100 and enable inference in space. It allows for filtering, summarizing, and prioritizing data before downlink, reducing latencies and broadband requirements.

Where does the “10×” energy cost savings in orbital data centers come from?

From the constant Sunlight in orbit and the vacuum acting as an “infinite” thermal sink, which eliminates water cooling. With large surface area solar panels and radiators, Starcloud projects energy costs 10× lower than terrestrial data centers, even after considering launch costs.

Which applications benefit most from processing in space versus on Earth?

Earth observation (optical, hyperspectral, SAR), fire detection, emergency signals, and mapping. Real-time inference on-site turns hours into minutes and reduces raw data flows — for example, SAR can generate ~10 GB/sec — by sending only results or extracts.

What challenges remain for a 5 GW space data center?

Logistics and costs of transporting tons of hardware, orbit maintenance and replacement, regulations and international coordination, space debris mitigation, cybersecurity, and latency to ground users for interactive workloads. The company believes that the energy and water savings, along with scalability, justify these challenges if well-designed and regulated.

What role does NVIDIA play in this project?

Beyond NVIDIA Inception (technical support), the H100 is the first space-grade GPU selected for Starcloud-1, chosen for its performance in training, fine-tuning, and inference, plus a mature software ecosystem that facilitates transitioning terrestrial workflows to orbit.

Note: This article is based on NVIDIA’s public information about Starcloud and its Starcloud-1 mission, as well as the technical and strategic descriptions shared by the company.

via: blogs.nvidia and StarCloud