China has moved again in the global race for artificial intelligence. The Automation Institute of the Chinese Academy of Sciences (CASIA) has just unveiled SpikingBrain-1.0, a model that is not only a significant technical breakthrough but also a geopolitical blow: for the first time, a frontier model has been trained without relying on NVIDIA chips or the CUDA ecosystem, and using a different architecture from the dominant sector.

The project introduces two clear breaks from the status quo:

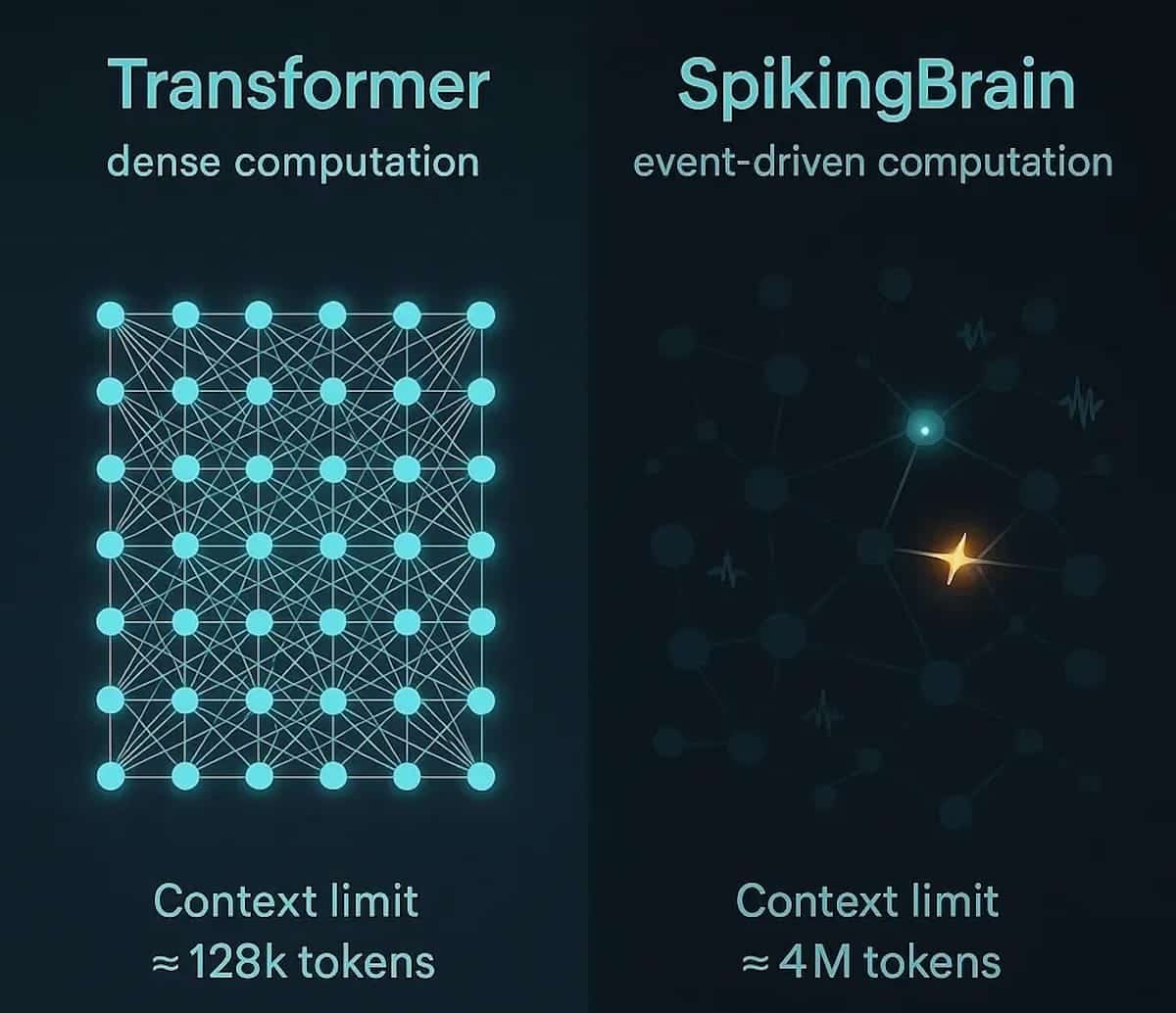

- Innovative architecture: instead of transformers, it uses spiking neural networks (SNNs) inspired by the human brain.

- Technological independence: training was conducted exclusively on MetaX C550 GPUs, manufactured in China.

Spiking Neural Networks: Brain-Inspired Efficiency

SNNs operate differently from transformers. Instead of continuous activations, neurons fire discrete pulses when reaching a threshold, similar to the biological nervous system. This event-driven and highly sparse computing model reduces energy consumption and enhances processing speed for long contexts.

According to their technical report, SpikingBrain-1.0 can process sequences of up to 4 million tokens, a leap forward compared to the range of 128,000 to 1 million offered by conventional LLMs. Furthermore, the 7-billion-parameter version achieves:

- 26 times faster inference at 1 million tokens.

- 100 times faster inference at 4 million tokens.

Training Without NVIDIA: The MetaX C550 Hardware Choice

The second pillar of this breakthrough is hardware. China successfully trained SpikingBrain-1.0 on hundreds of MetaX C550 GPUs, accelerators designed as a national alternative to NVIDIA A100/H100.

This represents a strategic decoupling from the US-dominated technological infrastructure and shows that Beijing can advance frontier models despite export restrictions. It is not only a technical achievement but also a political message, pointing towards the creation of a sovereign AI stack with independent hardware and software.

Comparison: SpikingBrain-1.0 Versus Current Leaders

To better understand this model’s scope, it’s useful to compare it with leading market LLMs:

| Model | Architecture | Training Hardware | Max Context Tokens | Speed in Long Contexts | NVIDIA Dependency |

|---|---|---|---|---|---|

| SpikingBrain-1.0 | Spiking Neural Networks (SNNs) | MetaX C550 GPUs (China) | 4,000,000 | 26× faster (1M), 100× faster (4M) | No |

| GPT-4.1 | Transformer | NVIDIA H100 GPUs + CUDA | 200,000 – 1,000,000 | High, but energy-intensive | Yes |

| Claude Sonnet (Anthropic) | Transformer | NVIDIA A100/H100 GPUs | 200,000 – 1,000,000 | Reasonably efficient in reasoning, but limited in long sequences | Yes |

| Gemini 1.5 (Google DeepMind) | Transformer | TPUv5 + NVIDIA GPUs | 1,000,000 | Optimized for multimodal tasks, not ultra-long contexts | Partial |

| Llama 3.1 (Meta) | Transformer (open source) | NVIDIA A100/H100 GPUs | 128,000 – 256,000 | Standard inference is adequate, not designed for extended contexts | Yes |

A Global-Scale Breakthrough

The release of SpikingBrain-1.0 transcends purely technical boundaries. It marks the beginning of an alternative path outside Silicon Valley’s model, characterized by:

- Architectural diversification: demonstrates AI doesn’t depend solely on transformers.

- Energy efficiency: SNNs could drastically cut data center energy consumption.

- Technological sovereignty: China proves it can train frontier models without relying on the US.

- AI geopolitics: reinforces the idea of a “bifurcated world” in artificial intelligence.

A First Step Toward Neuromorphic AI

Although SpikingBrain-1.0 does not yet match giants like GPT-4.1 in overall capabilities, its value lies in demonstrating an alternative direction. Neuromorphic computing, inspired by the brain, promises to surpass current scalability and sustainability limits, potentially redefining the next generation of AI.

Today is an ambitious proof of concept. Tomorrow might set a new standard.

Conclusion

The debut of SpikingBrain-1.0 is much more than an academic experiment: it’s a warning to the world. China not only aims to compete in AI but wants to do so with its own technological and political model. While it still has a way to go before matching leading LLMs, the focus on neuromorphic architectures and domestic hardware could position the country advantageously in the coming decade.

In short: fewer transformers, smarter neurons, and no NVIDIA on the horizon.

Frequently Asked Questions

What advantages does SpikingBrain-1.0 have over models like GPT-4?

It offers much longer contexts (up to 4 million tokens) and inference speeds up to 100 times faster in long sequences, with lower energy consumption.

What hardware was used to train the model?

It was trained on MetaX C550 GPUs, manufactured in China, without dependence on NVIDIA or CUDA.

Is SpikingBrain-1.0 already a rival to GPT-4 or Claude?

Not yet. While it doesn’t match their overall performance, it paves a promising alternative path toward more efficient and sustainable architectures.

What does this advance mean geopolitically?

It shows China can develop frontier models without US hardware, reinforcing its technological sovereignty and accelerating the decoupling between Eastern and Western AI ecosystems.

via: ArViX