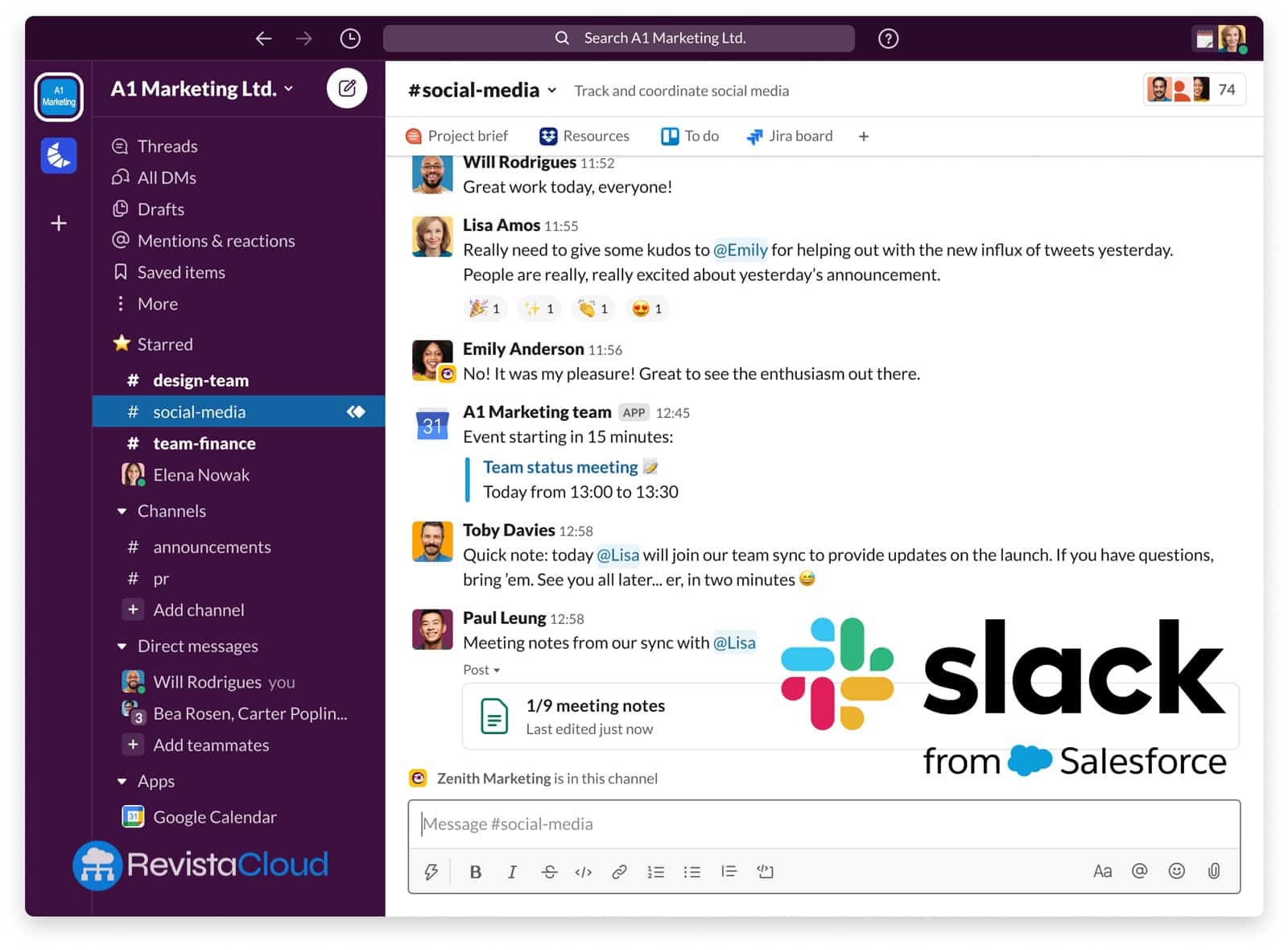

Slack, Salesforce’s corporate messaging system, aims to become the work layer where AI agents live—and are truly useful. The company has introduced two technical components with this vision: a Real-Time Search (RTS) API and a Model Context Protocol (MCP) server. Together, they promise something that had previously been elusive: enabling AI applications to access, with fine permissions and without copying data, the context already in Slack—messages, channels, files—and use it to respond, decide, and act within the workflow itself.

The move isn’t trivial. In the agent economy, conversational data is gold: meetings, decisions, agreements, queries, “to-do’s”… almost everything originates or concludes in a thread. But this corpus is often disorganized and locked away. Slack wants to open it in a controlled manner so that AI stops responding “generally” and begins to respond precisely to things like “what my team said yesterday at 11:03 in #sales-emea”.

Besides the two flagship components, the platform adds Slack Work Objects (a standard way to embed third-party app data within conversations) and new developer tools: best practices for AI, prebuilt Block Kit Tables, an updated CLI for Bolt apps, and lifecycle improvements. All of this hinges on a key promise: enterprise-grade security and full permission respect at user and channel levels.

Why now: too many apps, little usage, and 40% productivity lost between tabs

Slack frames the announcement around a familiar yet persistent issue: fragmentation. According to their data, there are over 200,000 SaaS companies worldwide, and a large enterprise uses more than 1,000 applications. The consequence is constant switching between tools, copying and pasting, and context switching that, they claim, can drain up to 40% of productivity. Nearly half of SaaS licenses end up as shelfware.

Slack’s bet is to bring AI and data into the conversation—where employees already are—not to push staff into another tab. As Slack CEO Denise Dresser sums it up: “The future of work is agentic, and AI’s success depends on its seamless integration into human flows.” If only 2% of IT budgets are allocated to Slack, the goal is for that 2% to maximize the performance of the remaining 98%, the company states.

RTS API: conversation as a knowledge base, without “aspiring” data

The Real-Time Search API is the conduit. It allows an app or agent to query Slack for relevant messages, files, and channels in real-time and according to the caller’s permissions. Instead of copying data to another service or doing bulk download, the agent asks only what’s needed (“bring me the last five messages about ‘Q4 Budget Europe’ in channels I have access to”), receives only what’s necessary, and acts within Slack.

The value proposition:

- Fresh context: responses based on the most recent real conversation, not an outdated mirror.

- Fewer hallucinations: the agent anchors its responses in actual messages/files, reducing the tendency to “make things up.”

- Governance: RTS respects permissions and boundaries; it doesn’t unblind DMs or private channels to unauthorized viewers.

Slack claims that clients leveraging their conversational knowledge can save an average of 97 minutes per week and speed up decision-making by 37%, in addition to responding 36% faster to customers. These are internal numbers, but they guide the overall value proposition.

MCP server: a common language for LLMs to discover context and perform tasks

The Model Context Protocol (MCP) is the other piece. Think of it as a bus for LLMs, apps, and Slack: a unified protocol that standardizes how a model discovers contextual information and executes tasks on behalf of the user, without the developer having to integrate each service individually or specify all possible actions beforehand.

With MCP, an app defines which data it can expose and which actions it supports (“create ticket,” “mark task as completed,” “attach this file”), and the LLM discovers them through a consistent schema. Say goodbye to a jumble of webhooks and endpoints: a single route, respecting permissions.

Work Objects and developer tools: third-party data that “see and touch” the thread

Slack Work Objects add a visual and interaction layer. Developers can connect data from their app—descriptions, images, status, documents—to the conversation with enriched progress. The idea is that the user sees the work item (the ticket from Asana, the case from CRM, the marketing plan) and acts (e.g., mark as completed) without leaving Slack.

For developers, Slack introduces blocks (Block Kit Tables) ready to use, AI best practice guides, and an up-to-date Bolt CLI with resources. The promise: less infrastructure time, more focus on the final experience.

Who’s jumping on board: Anthropic, Google, Perplexity, Writer, Dropbox, Notion…

Slack affirms that major players are already using RTS and/or MCP:

- Claude (Anthropic): the assistant enters Slack via DM, panel, or @mention. With RTS, it searches in channels, messages, and files (with permissions) and returns anchored responses in the real work of the team.

- Google Agentspace: platform to create and manage agents across scale. With RTS, it injects Slack context into Agentspace and vice versa: insights from Agentspace inside Slack.

- Perplexity Enterprise: combines web and team conversations to give contextual answers, whether from Slack or Perplexity.

- Dropbox Dash: uses RTS to provide real-time access to content from Slack and Dropbox, respecting permissions.

- Notion AI: allows querying about threads or missed conversations; RTS makes it easy to search in public, private, and, with permissions, DMs so that Notion can mirror what’s happening in Slack.

The rest of the ecosystem—Writer, Cognition Labs, Vercel, Cursor—follows the same path: bring intelligence into the workflow without breaking security or copying data outside.

Security, compliance, and marketplace: what reassures IT

Slack emphasizes its “enterprise-grade security approach”: granular permissions, privacy, and controls for third parties. The implicit message: it’s not necessary to “export” conversations for AI to understand; AI accesses conversations under the same rules as a user.

All these apps are hosted in the Slack Marketplace, which serves as a one-stop shop for distribution and control. It’s not just about connecting tools—it’s about creating a unified human-AI collaboration ecosystem where discovery, approval, and management of agents become easier.

Availability and roadmap

- RTS API and MCP server: closed beta; general availability expected in early 2026.

- Slack Work Objects: GA for all developers by late October.

- Developer tools for agentic: already available.

- Third-party agents using these capabilities: already in Slack Marketplace.

Slack notes that this information should not be used to make purchasing decisions; timelines and features may change at Salesforce’s discretion.

What it means for companies and developers (beyond the “hype”)

For companies, the promise is twofold: less friction (AI “lives” where employees work) and greater trust (permissions respected, no bulk exports). If a bot needs to see something, it asks; if not, it doesn’t see it. Governance isn’t a separate spreadsheet: it’s the same Slack model.

For developers, the entry point shifts from a generic connector to a contextual agent. With RTS + MCP, an LLM discovers what it can do and asks precisely the context piece needed. The agent becomes more accurate, reliable, and trustworthy. And importantly, distributable: the marketplace solves one of the usual barriers—getting employees to use apps—by bringing them where they already work.

Where do the open questions lie? Three main areas:

- Costs: real-time calls to conversational data aren’t free; a balance must be struck among latency, cost per query, and value.

- Privacy: even if permissions are respected, organizations will want explicit policies for DMs and sensitive channels.

- Avoiding noise: a contextual agent might become intrusive if it intervenes too much. Experience—that is, how to mute—will be as critical as accuracy.

A concrete example: from “search in Drive” to “close case #8412”

Imagine a customer support agent receiving in #SaaS-Incidents: “What did we agree with Daylight regarding the premium support SLA?”. With RTS, the agent searches recent threads discussing “Daylight” and “SLA”, extracts the message where a manager set “response in 2 hours, 24/7”, and returns a response quoting that message. With MCP, the agent also opens a Work Object for the case in CRM and allows closing it as resolved without leaving the thread. If permissions require, it respects them: if the user can’t see DMs where the topic was discussed, the agent won’t see them either.

This is the kind of real productivity—and governance—that companies want to see.

Conclusion: from “glue” bot to context-aware agent

For a decade, Slack served as the glue connecting tools. Now, with RTS and MCP, it aims to become the nervous tissue where AI not only connects but understands the context and acts with permissions. If the promise is fulfilled—and the timelines hold—2026 could be the year when conversations stop being a black box for AI and become the fuel that makes it genuinely useful.

Until then, closed beta and an ecosystem (Anthropic, Google, Perplexity, Writer, Dropbox, Notion…) already demonstrating early use cases. The challenge isn’t small, but the path is clear: contextual AI, where you already work.

Frequently Asked Questions

What is Slack’s RTS API, and how does it differ from data export?

RTS allows an app or agent to query messages, files, and channels in real-time, only what’s relevant for an action, and respecting permissions. It doesn’t imply massive downloads or replication of conversations outside Slack, reducing risk and simplifying compliance.

What purpose does the Model Context Protocol (MCP) serve in Slack?

MCP is a unified protocol enabling LLMs and apps to discover context and execute tasks within Slack without ad hoc integrations. It standardizes how a model accesses data and actions under the user’s permissions.

How does Slack protect privacy if agents can read conversations?

RTS honors existing permissions (public/Private channels, DMs) and returns only relevant snippets. There is no mass downloading or data duplication outside Slack, and administrators maintain granular controls.

When will these capabilities be available to everyone?

RTS API and MCP are in closed beta with general release expected in early 2026. Work Objects will go GA in October, and developer tools are already available.

via: salesforce