In the race for Artificial Intelligence (AI), the big battles are no longer fought only on accelerators or processors. Increasingly often, the bottleneck is in memory: how much can be packed, how fast data moves, and, most importantly, how much energy it consumes doing so. Against this backdrop, SK hynix has chosen CES 2026 to showcase a roadmap pointing directly to the industry’s next big leaps: from high-capacity HBM4 to new modules designed for AI servers and mobile memory aimed at “on-device AI.”

The South Korean company has announced the opening of a client exhibition booth at Venetian Expo (Las Vegas) from January 6 to 9, 2026, with a message that summarizes its strategy: “Innovative AI, Sustainable tomorrow.” In a moment where data centers are measured by density, cooling, and operational costs, the word “sustainable” is no longer just an empty slogan: it’s a way to talk about performance per watt and efficiency across the entire chain, from chip to system.

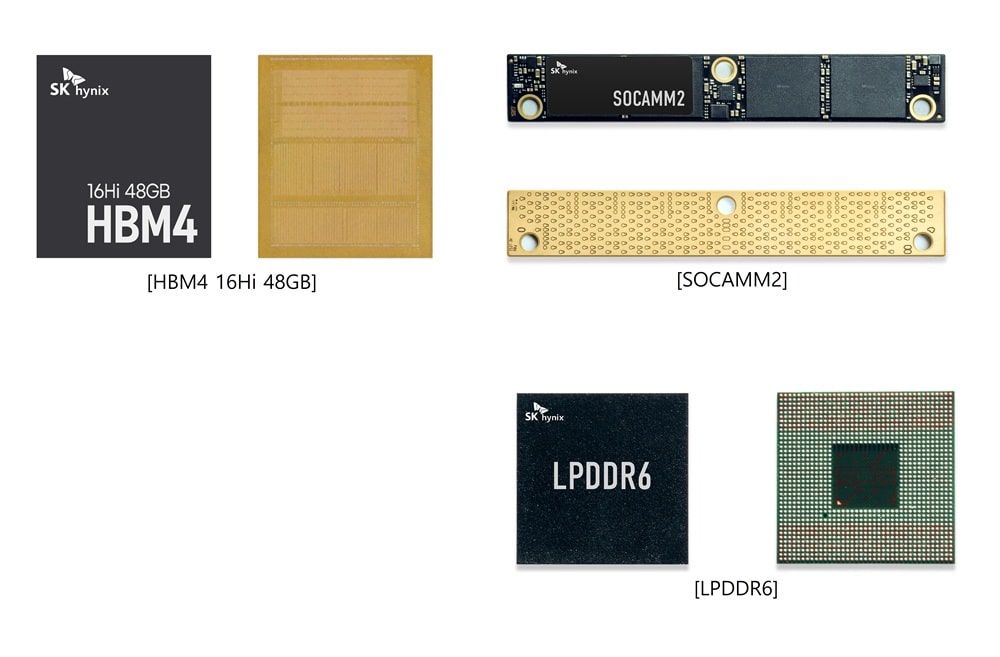

16-Layer 48 GB HBM4: The Showcases the Future of Memory

The centerpiece of the booth will be a memory aimed at the next-generation AI platforms: 16-layer HBM4 with 48 GB. SK hynix is unveiling it for the first time at CES as a direct evolution of its 12-layer 36 GB HBM4, a product that, according to the company, has demonstrated a speed of 11.7 Gbps and is in development “aligned with customer schedules.”

Practically, this kind of announcement reflects how the market is moving: HBM (High Bandwidth Memory) today is the “lungs” of accelerators for training and inference. As models grow and workloads demand more context and parallelism, memory manufacturers respond with two levers: more capacity per stack and faster I/O speeds. The jump to 48 GB in 16 layers is, precisely, a bold message on both fronts.

While SK hynix has not set firm commercial release dates for this 48 GB HBM4 in its booth announcement, it clearly signals an intention: to show partners and customers where its catalog is headed as the industry begins deploying new generations of AI hardware.

36 GB HBM3E: The Memory Set to “Move the Market This Year”

Alongside HBM4, the company will also showcase 12-layer HBM3E with 36 GB, which it describes as the product set to drive the market “this year.” Beyond the headline, this point is relevant: HBM3E is currently a key technology in the existing AI infrastructure, and SK hynix aims to strengthen its role as a leading supplier.

The company has also indicated that it will co-show modules of GPU servers incorporating HBM3E, together with a partner, to illustrate how this memory fits into the complete system. It’s a way of “grounding” the announcement: it’s not just about an isolated chip, but how it integrates into the final product deployed in data centers.

SOCAMM2: Low-Power Modules for AI Servers

Another focal point at the booth will be SOCAMM2, described as a low-power memory module designed specifically for AI servers. Its appearance illustrates a trend acceleration: AI growth demands not only extreme bandwidth near accelerators (HBM), but also efficient and modular memory solutions across the rest of the system—addressing both power consumption and scalability.

According to SK hynix, SOCAMM2 emphasizes that their strategy isn’t limited to HBM but encompasses a complete “system”—highlighting that AI isn’t just built from a “bright chip,” but from the combined efforts of memory, interconnection, and storage working in harmony.

LPDDR6: Faster and More Efficient On-Device AI

Parallel to their data center focus, SK hynix will also display LPDDR6, aimed at on-device AI. The company claims this generation significantly improves data processing speeds and energy efficiency over previous versions.

This type of memory is particularly relevant because the use of local models (on laptops, mobile devices, industrial equipment, or automotive systems) is booming due to concerns around privacy, latency, and costs. For such “nearby AI,” mobile memory must evolve: it’s not enough with NPU or acceleration—feeding systems with data efficiently remains essential.

321-Layer 2 Tb QLC NAND: Storage Capacity for AI Data Centers

The exhibition isn’t limited to DRAM. In NAND, SK hynix will present a 321-layer, 2 Tb QLC memory designed for ultra-high-capacity eSSD, a segment expanding with AI-focused data centers. The company claims that, backed by “industry-leading integration,” this product improves energy efficiency and performance over previous QLC generations—especially relevant where every watt matters.

The underlying message is clear: AI projects not only consume computational resources but also store massive volumes of data, checkpoints, embeddings, and training artifacts. Storage thus becomes a strategic component to control costs and enable scaling.

“AI System Demo Zone”: When Memory Starts Doing Part of the Work

One of the most striking elements of the announcement is the creation of a system demonstration zone (“AI System Demo Zone”) to showcase how SK hynix’s solutions connect and form an ecosystem. Here, SK hynix ventures into more futuristic territory: presenting a large-scale model of cHBM (Custom HBM), an idea that reflects the industry shift from “more raw performance” to “more inference efficiency and cost optimization.”

The concept behind cHBM, according to SK hynix, is to integrate some functions traditionally found in GPUs or ASICs into the “base die” of HBM, with the goal of improving overall system efficiency by reducing data movement energy.

Within this zone, SK hynix also mentions other projects and concepts aligned under a single obsession: reducing the cost of data movement.

- PIM (Processing-In-Memory): memory with computational capabilities to alleviate data movement bottlenecks.

- AiMX: a prototype accelerator card based on a specialized chip for language models.

- CuD (Compute-using-DRAM): aimed at executing simple calculations within memory itself.

- CMM-Ax: a module combining compute capabilities with memory expansion via CXL.

- Data-aware CSD: storage that can process data autonomously.

More than finished products, these initiatives act as signals: the future of AI isn’t just adding GPUs but redesigning the entire system—making memory and storage active components rather than passive ones.

Customer-Centric Strategy and “New Value” in AI

SK hynix also emphasized a shift in focus at CES: this year, they prioritize their client exhibition stand as a hub for key partners to discuss collaboration and development. In the words of Justin Kim, President and Head of AI Infra at SK hynix, customers’ technical requirements “are evolving rapidly” and the company aims to respond with differentiated solutions and close cooperation to “create new value” in the AI ecosystem.

This message aligns with market realities: the deployment pace of AI is pushing the entire supply chain—memory, interconnects, storage—to evolve as quickly as the models. CES 2026 is not just a showcase; it’s a space where the immediate hardware future powering the next wave of AI is negotiated.

Frequently Asked Questions

What advantages does the 48 GB HBM4 memory bring over previous generations in AI infrastructure?

It offers increased capacity per stack and aims for higher transfer speeds, crucial for powering accelerators with increasingly demanding training and inference loads.

What is SOCAMM2 used for in AI servers, and why is low power emphasized?

It’s designed as a specialized low-power module for AI servers, where power efficiency and scalability are critical for expanding capacity without skyrocketing energy costs.

What is cHBM (Custom HBM) and why is “inference efficiency” a hot topic now?

cHBM integrates additional functions into HBM to reduce data movement and boost system efficiency. “Inference efficiency” is gaining importance as organizations seek to cut the cost of serving models in production.

Why is a 321-layer, 2 Tb QLC NAND relevant for AI data centers?

Because AI deployments require high-capacity storage solutions like eSSD for data, models, and workflows; improving density and energy efficiency in NAND helps contain costs at scale.