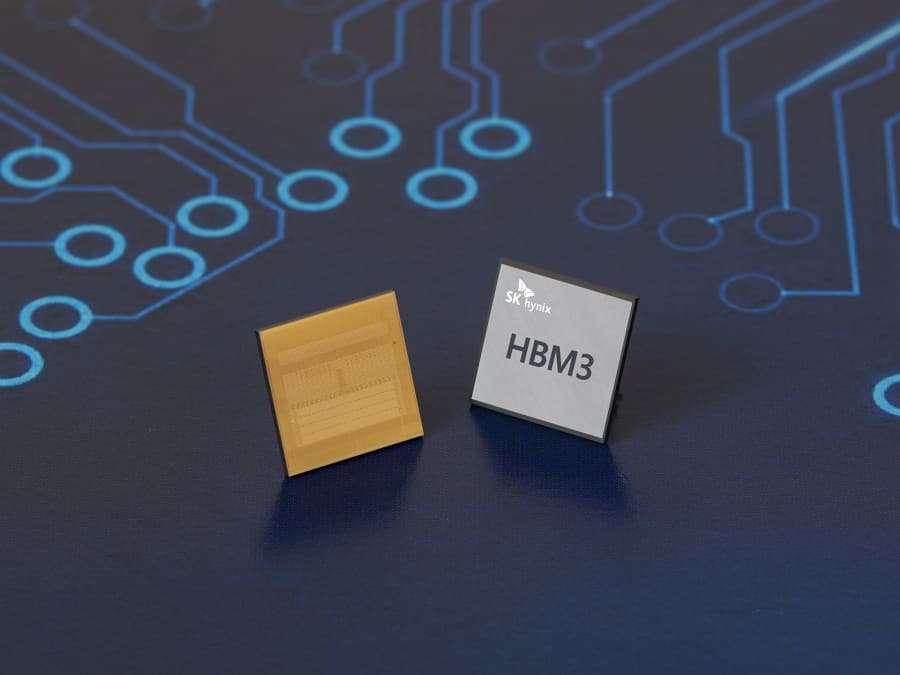

The race in Artificial Intelligence is no longer just measured in GPUs. Increasingly, it’s measured in memory—and specifically in HBM (High Bandwidth Memory), the component that has become a critical piece for powering accelerators that train and run large-scale models. During its Q&A session following the Q4 2025 results, SK hynix conveyed a core message that summarizes the market’s current moment: demand continues to outpace capacity. This situation is pushing major clients toward longer-term contracts, defensive purchasing, and sustained pressure on prices and supply.

The company just closed a historic year. In its earnings report, SK hynix announced for 2025 total revenue of 97.1467 trillion won, an operating profit of 47.2063 trillion won, and a net profit of 42.9479 trillion won. In Q4, revenues reached 32.8267 trillion won with an operating profit of 19.1696 trillion won. Financial media highlighted that the demand for AI memory and the rising prices of DRAM and NAND have been key drivers of the recent performance.

However, the most revealing part wasn’t just in the stock market headlines but in the technical and commercial details: how SK hynix is preparing for HBM4, what kinds of agreements clients are pushing for, and why the industry enters 2026 with a sense of “committed capacity” that’s already affecting PC and mobile sectors.

HBM4: manufacturing underway, performance validated, and a tough promise: “overwhelming share”

The first segment of the Q&A focused on HBM4, the next major generation of high-bandwidth memory. SK hynix emphasized that its goal is to maintain an “overwhelming” market share, following the trajectories of HBM3 and HBM3E. As explained, preparation is progressing with mass production requested by clients according to already agreed schedules and performance levels demanded by customers, even based on the 1b node.

A key nuance emerges here: the challenge isn’t just manufacturing, but manufacturing with stable industrial performance. The company mentioned its aim to ensure yields (performance rates) comparable to HBM3E, supported by its MR-MUF packaging technology. The industry watches this closely because, in HBM, packaging and stacking are just as critical as the silicon itself: transitioning to higher, more demanding stacks penalizes any process deviations.

Even with “maximum current production,” SK hynix acknowledged it cannot meet 100% of demand, opening the door for competitors to gain ground. Nevertheless, the company insists it will remain competitive, aligning with the market context: dominance in HBM has become a strategic advantage impacting the entire AI computing value chain.

The return of LTAs: from “flexible” contracts to strong, multi-year commitments

The second insight into the current market state is the tightening of Long-Term Agreements (LTAs). SK hynix explained that, in the past, LTAs existed but with relatively “lax” volume clauses, adaptable to cycle conditions. Currently, however, negotiating LTAs reflect strong commitments, as suppliers also need visibility. If the industry is to invest more CAPEX and expand capacity in advanced nodes and complex packaging, it needs to know how much product is sold and for how long.

Customers, on their part, prefer multi-year periods as a standard. But SK hynix pointed out a clear limit: capacity (CAPA). It’s impossible to promise everything to everyone when the bottleneck is physical, not commercial. This is crucial to understanding why the memory market is experiencing a phase of scarcity, rising prices, and front-loaded purchases: the industry is trying to turn AI demand into stable contracts to justify investments that take years to materialize.

Inventory levels dropping: servers are “consuming” memory as it arrives

In application breakdowns, SK hynix was straightforward: many clients are struggling to secure volumes and are requesting increased supply. Especially in servers, the situation is almost graphic: secured volume is immediately converted into built systems. Inventories continue to decline, with no sufficient stockpile to “accumulate”: the rate of consumption during manufacturing is very high.

This trend has implications beyond any single manufacturer. If memory is perceived as the bottleneck in data center expansion, it’s logical that procurement continues to secure volume—even Tensing the market and affecting other segments. SK hynix added that PC and mobile sectors are also impacted, directly or indirectly, with inventories going down.

The company also acknowledged its own inventory decreased in Q4 compared to the previous quarter and anticipates that, entering the second half of the year, levels might reduce further. For NAND, a similar pattern was observed: rapid reductions in server inventories, especially related to eSSD, with weeks-long stock levels at levels comparable to DRAM by year-end.

Capacity optimization: 1b in M15X and accelerated transition to 1c and 321-layer tech

Faced with market pressure, SK hynix outlined a strategy that’s not just short-term. The message was that, beyond maximizing volume, it’s critical build customer trust by responding to demand. Operationally, it involves expanding 1b capacity at the M15X plant and improving yields. For general DRAM, the focus is on accelerating the shift toward 1c, while in NAND, the company emphasized progress toward 321 layers, aligned with the push for density and cost per bit reduction.

Rising prices and their effects on product tiers: adjustments in low-end, AI-driven demand boosting high-end

SK hynix admitted that rising costs have led PC and mobile customers to adjust purchasing plans and revise shipment specifications, focusing on lower-tier products less sensitive to price. Nevertheless, they emphasized that this does not necessarily mean a broader market contraction, as AI demand in devices is driving growth at the high end.

This change exemplifies a structural shift: while entry-level segments may see some cutbacks, the industry is moving toward more demanding memory and storage configurations to support new functions and local AI workloads.

Increased CAPEX in 2026 and cautious outlook on U.S. factories

Regarding investment, SK hynix anticipates that CAPEX for 2026 will increase “substantially,” though they aim to keep it within about mid-30% of sales, given expected revenue growth. About setting up fabs abroad—especially in the U.S.—the company remains cautious: many variables are at play, and for now, they are only monitoring ongoing discussions with governments.

Simultaneously, the company discussed creating an “AI Corporation” to go beyond component supply and act as an ecosystem partner, investing in companies with key capabilities. However, it was noted that its investment commitment would be modest relative to its financial scale, and deployment would occur gradually.

FAQs

Why is HBM4 so important for AI data centers?

Because HBM supplies the bandwidth necessary for AI accelerators to handle training and inference. In modern workloads, memory can become the limiting factor before the GPU.

What does it mean that memory LTAs are now “harder” and multi-year?

Customers want to secure volumes over several years, and suppliers require visibility to justify CAPEX and capacity expansion. It’s a sign of a tense market with committed capacity constraints.

How does memory scarcity affect servers, PCs, and mobile devices?

In servers, volumes are consumed as they arrive, leaving little room for inventory buildup. In PCs/mobile, customers adjust purchasing and specifications—especially in lower segments—while AI demand pushes higher-end segments upwards.

What does SK hynix’s increased CAPEX in 2026 imply?

The company plans to invest more to expand capacity and improve yields. But the industry needs time for this investment to translate into actual supply, which explains persistent supply tensions.

via: Jukan on X