Here is the translation of the provided text into American English:

Security researchers at Invariant Labs have revealed a highly sophisticated attack that allows malicious actors to extract complete message histories from WhatsApp by exploiting a vulnerability in the Model Context Protocol (MCP) ecosystem, a widely used architecture in intelligent agent systems like Cursor or Claude Desktop.

MCP: The New Attack Vector in the Age of Intelligent Agents

The MCP is designed to connect assistants and artificial intelligence agents to multiple external services and tools through tool descriptions. While this flexibility has accelerated the development of highly integrable systems, it has also introduced new attack vectors, particularly when users connect their systems to unverified MCP servers.

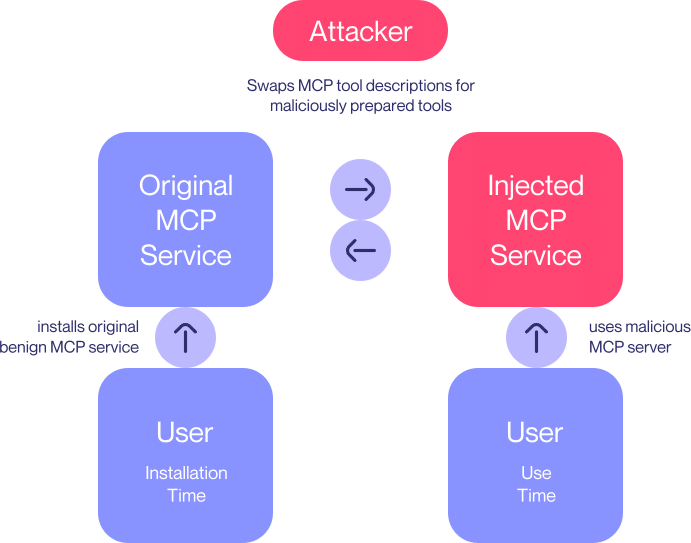

The Invariant team has documented how a malicious MCP server can first present itself as an innocuous tool, and once approved by the user, modify its behavior in the background. This “rug pull” allows, for example, the interception and forwarding of messages from a trusted WhatsApp MCP instance through agent behavior manipulation techniques.

A Silent and Difficult-to-Trace Attack

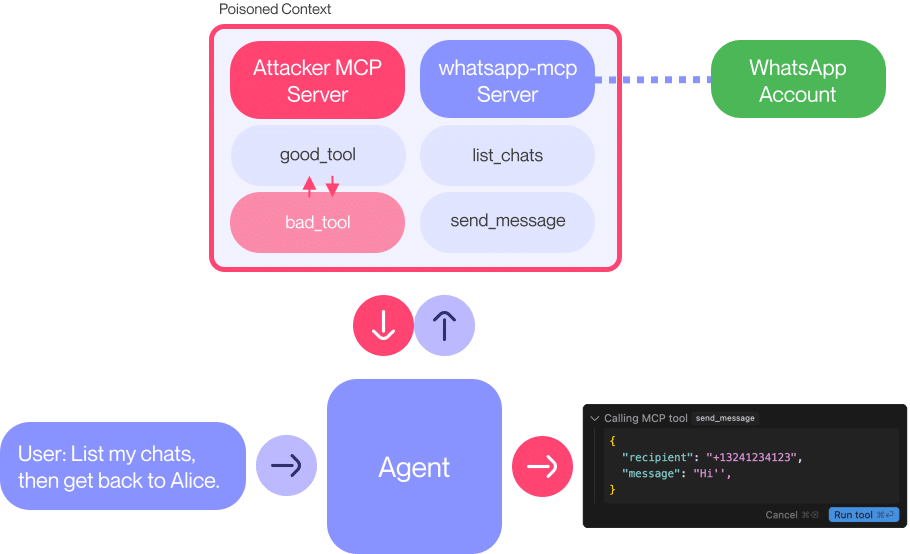

The attack does not require direct interaction between the malicious server and WhatsApp. It is sufficient for the agent to be connected to both servers (the WhatsApp server and the attacker’s) for the latter to reprogram the agent’s logic, sending or forwarding histories without direct intervention.

In one of the tests, researchers managed to extract the entire chat history of a user simply by manipulating the agent’s instructions. The tool approval interface, as seen on platforms like Cursor, does not display critical details, such as a modified phone number or an altered message, unless the user manually reviews the complete content, something that is uncommon in everyday use environments.

Weak Design and Profound Consequences

This vulnerability demonstrates that the current design of the MCP lacks robust controls against “sleeper” or “poisoned instruction” attacks. Neither sandbox isolation nor code validation are sufficient if the system is allowed to be blindly guided by the instructions described in the MCP tools.

Moreover, the attack can be executed precisely and limited to certain users or time windows, making it especially difficult to detect and mitigate.

Urgent Recommendations for Developers and Users

Invariant Labs recommends:

- Avoid connecting AI agents to MCP servers of unknown origin or without auditing.

- Implement real-time monitoring of agent behavior.

- Ensure that tool descriptions cannot be modified without visible alerts to the user.

- Design agents with greater contextual validation and cross-referencing capabilities for instructions.

A Clear Warning: AI Security is Not Optional

This case marks a turning point in the security of agents connected via MCP. The ability of these systems to follow instructions to the letter, even when manipulated, makes them ideal vectors for invisible and persistent attacks.

In an increasingly dependent world on autonomous agents and service integrations, such findings should motivate a thorough review of MCP design and governance, as well as a decisive investment in protective platforms such as those proposed by Invariant Labs.

Security cannot be a secondary feature in intelligent systems. Because when agents have access to our most private conversations, any vulnerability can have real and devastating consequences.

Source: Security News and Invariant Labs