The high-bandwidth flash (HBF) memory aims to redefine the future of AI-accelerated computing in data centers and at the edge.

The AI industry is on the brink of a disruptive leap in memory technology. SanDisk announced the addition of Raja Koduri, a veteran graphics architect who has worked at Apple, AMD, and Intel, to its Technical Advisory Council to develop High Bandwidth Flash (HBF™), a novel flash memory architecture that could enable graphics cards with up to 4 TB of VRAM, revolutionizing AI processing both in data centers and on edge devices.

Both the company and Koduri confirmed the announcement on social media, alongside the appointment of legendary professor David Patterson—co-creator of RISC design and Turing Award winner in 2017—as chair of the advisory board.

What is HBF and why does it matter?

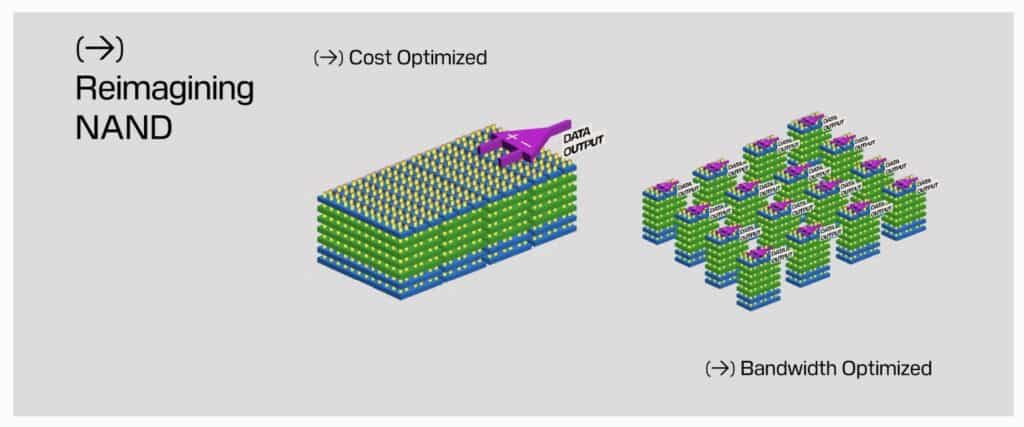

HBF is not a direct replacement for current High Bandwidth Memory (HBM), but a complement designed to scale capacity at low cost while maintaining competitive bandwidth. According to SanDisk’s official statement, HBF can offer 8 to 16 times more capacity than HBM without significantly increasing energy or economic costs.

To achieve this, HBF uses:

– 3D NAND BiCS flash memory with direct CMOS-to-Array (CBA) connection.

– 16 memory cores stacked via through-silicon vias (TSVs).

– A logic die capable of accessing flash subarrays in parallel.

– Configurations designed to prevent wafer warping in high-height stacks.

What impact will this have on AI?

Koduri summarizes it as follows:

“AI model performance depends on three factors: compute capacity (FLOPs), memory capacity (bytes), and bandwidth (bytes/second). HBF improves memory capacity across all fronts: per dollar, per watt, and per millimeter squared.”

This architecture is specifically designed for inference workloads, where GPUs run pre-trained models in real-time, such as in chatbots, computer vision, or edge assistants. Patterson states:

“HBF could play a pivotal role in data centers for AI, enabling large-scale inference workloads previously thought impossible, and could also lower the cost of new AI applications that are currently unfeasible.”

Comparative table of advanced memory technologies for AI:

| Technology | Estimated Max Capacity | Bandwidth | Power Consumption | Use Case | Key Manufacturers |

|————-|—————————|———–|——————|———-|——————-|

| HBM3e | Up to 128 GB per GPU | ~1.2 TB/s | High | Large-scale model training | SK Hynix, Micron, Samsung |

| GDDR6X | Up to 24–48 GB | ~1 TB/s | Medium | Gaming, lightweight AI | Micron, NVIDIA |

| HBF | Up to 4 TB and growing | High (not yet revealed but competitive) | Low to medium | Inference, edge, large-scale analysis | SanDisk |

And what about software?

Koduri emphasized that the architecture will be developed with open standards, easing adoption by hardware manufacturers, custom accelerator developers, and AI startups. HBF aims to integrate into an era where AI models are deployed not only in the cloud but also at the edge—on vehicles, smartphones, smart factories, or even XR headsets.

Next steps

SanDisk hasn’t announced a specific launch date for the first HBF modules yet, but the development leverages the company’s own technical infrastructure and strategic AI partners, with a likely first rollout in the professional segment.

For Koduri, HBF marks a return to a vision focused on how memory architecture can enable new paradigms of computing, much like Polaris, Vega, and Arc did during his time at AMD and Intel.

“HBF is ready to revolutionize AI at the edge by equipping devices with memory capabilities that were previously only available in data centers. This will radically change where and how AI inference occurs,” Koduri affirms.

In conclusion,

The advent of High Bandwidth Flash symbolizes a new frontier in AI architecture design. If it delivers on its promises, it could shift the balance among cost, capacity, and performance in inference tasks, enabling even the most advanced models to run closer to users—whether in a car or a smartphone. In this new era, Raja Koduri and David Patterson are key figures shaping the direction.