GPU virtualization is now a reality in production environments, and Proxmox VE, one of the most popular virtualization platforms, has taken a significant step forward by officially supporting NVIDIA vGPU technology since version 18 of NVIDIA’s software. This integration allows multiple virtual machines to share a single physical GPU, paving the way for more efficient and powerful enterprise environments.

A Commitment to Flexibility and Performance

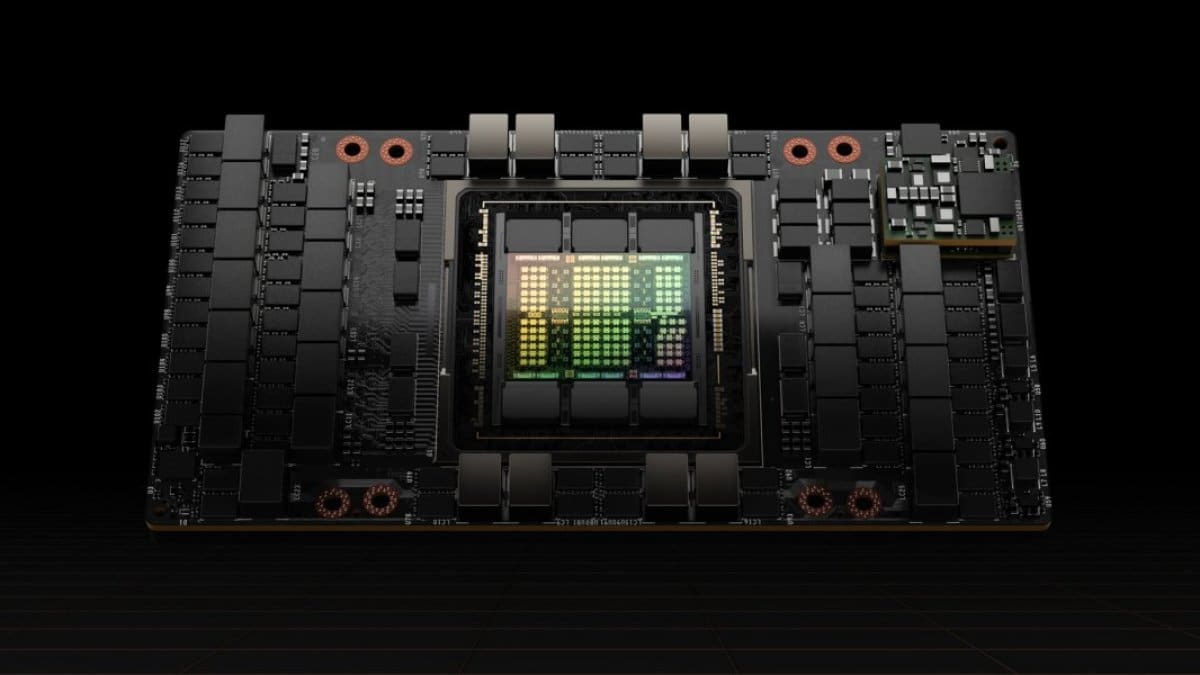

The installation of NVIDIA vGPU on Proxmox VE has been tested in professional environments using GPUs such as the RTX A5000, although it is compatible with a wide range of graphics cards, according to NVIDIA’s official listing. Companies that want to leverage this technology must have an active Proxmox VE subscription (Basic, Standard, or Premium level) and a valid NVIDIA vGPU license.

For production environments, Proxmox recommends using enterprise-grade hardware and checking the system’s compatibility with features such as PCIe passthrough, VT-d or AMD-v, SR-IOV, and Above 4G decoding from the server’s BIOS or UEFI.

Preparation and Technical Requirements

The minimum version of Proxmox VE required to install vGPU support is 8.3.4, combined with updated kernels (such as 6.8.12-8-pve) and NVIDIA drivers version 570.124.03 or later. The pve-nvidia-vgpu-helper tool, included starting from this version, facilitates key tasks such as setting up the environment, installing DKMS packages, and locking incompatible drivers.

The process requires downloading the specific drivers from NVIDIA’s website (selecting Linux KVM as the hypervisor), transferring them to the Proxmox node, and installing them with DKMS parameters to ensure recompilation after kernel updates.

Advanced Configuration: SR-IOV and Resource Mapping

For more recent GPUs based on the Ampere architecture, it is necessary to enable SR-IOV, which can be configured automatically using the systemd service included in Proxmox. This allows for the creation of multiple virtual functions that can subsequently be assigned to different virtual machines.

Once SR-IOV is enabled, it is possible to perform PCI resource mapping from the Proxmox VE data center, allowing for simpler management of the graphics cards and their virtual functions.

Configuring Virtual Machines

The next step involves configuring the virtual machines that will make use of the vGPU. It is necessary to install remote desktop software on the guest machine, as Proxmox’s integrated console (noVNC) does not support the visualization of the virtualized GPU.

For Windows 10/11, the most common solution is to enable Remote Desktop. On Linux systems (like Ubuntu or Rocky Linux), it is recommended to install VNC servers such as x11vnc and configure a compatible display manager like LightDM.

Once remote access is configured, the appropriate NVIDIA drivers are installed within the virtual machine, downloaded from the official website and adapted to the operating system and version of the host driver.

Licensing and Professional Use

Using NVIDIA vGPU in production requires compliance with NVIDIA’s licensing policies. License management is done through the Delegated License Service (DLS), and it is essential to keep the time synchronized on the guest machines using NTP to avoid license validation errors.

A Step Forward for Virtualized Graphics Environments

The combination of Proxmox VE and NVIDIA vGPU represents a robust solution for environments that require virtualized graphic power, from 3D simulators to engineering, design, or AI environments. Although the technical setup is demanding, the result is a powerful and scalable environment that provides companies with solutions previously reserved for much more complex and costly infrastructures.

source: Proxmox GPUs