Proxmox VE 9 has already launched, bringing with it a series of updates aimed at further professionalizing the platform. We’re not talking about a mere “facelift,” but about changes affecting lifecycle management, software-defined networking, storage, and especially the way to manage workloads with affinity and anti-affinity rules. Simultaneously, the upgrade from base to Debian 13 “Trixie” reorganizes repositories and incorporates a more modern kernel. In short: less promises and more practical pieces for service delivery—both in testing environments and, as many are interested in, in production.

Next, a structured and critical walkthrough of what Proxmox 9 truly offers, supported by a hands-on session and field comments. No hype: concrete functionalities, implications, and some advice to avoid pitfalls during adoption.

System Base: Debian 13 “Trixie” and Repository Restructuring

The first noticeable change appears in the base system. Proxmox 9 aligns with Debian 13 and reorganizes repositories for the new distribution. The interesting part isn’t the codenamed name, but what it practically means: fresh packages, an updated kernel, and a patch cycle aligned with a branch expected to see significant activity in the coming months.

- What it adds: support for recent hardware and more agile security updates.

- What to watch: initially, the “new” repos may cause origin changes in packages and minor frictions with drivers or out-of-tree modules. It’s best to plan staging environments and validate playbooks (Ansible/Terraform) before moving to production.

Summary: it’s a worthwhile leap for the underlying technology, but it requires rigorous testing discipline.

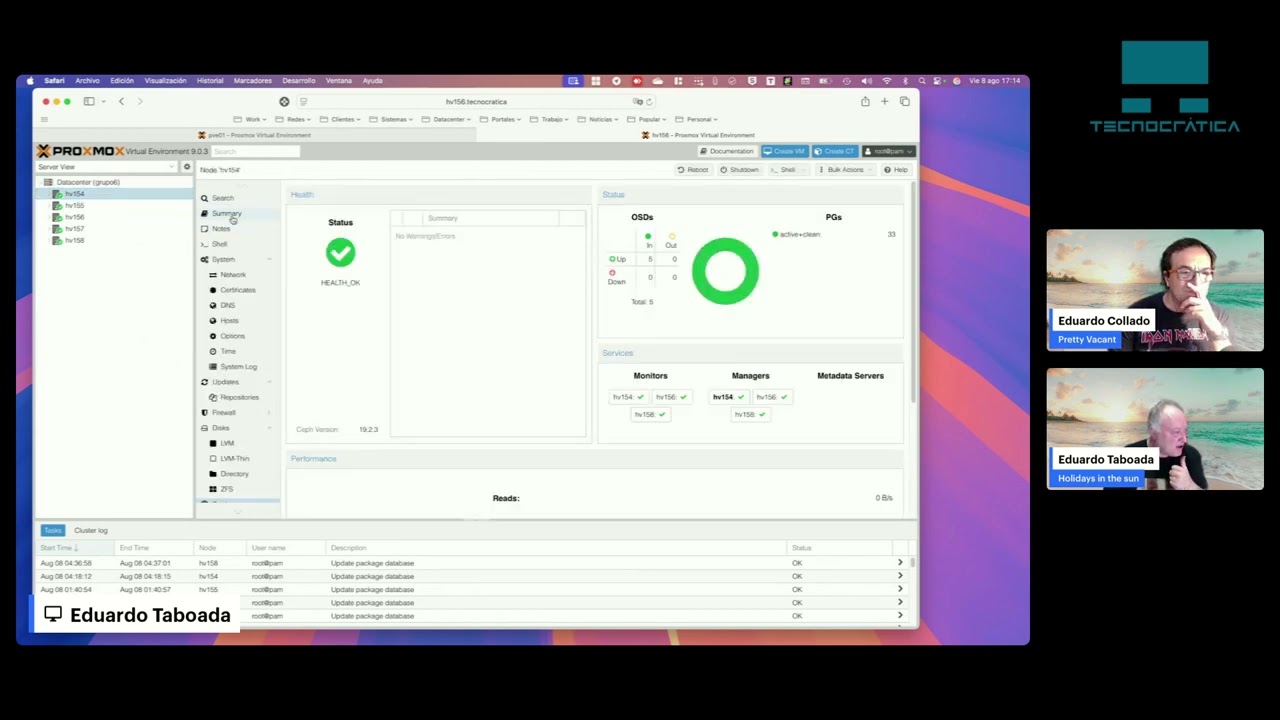

Interface and Management: It’s Not About Button Colors

Elements of the GUI environment have been touched up. No one in the community claims that Proxmox looks like a SaaS giant’s dashboard: it remains utilitarian. But the value lies in small, operator-friendly details:

- Alternative network interface names: simple alias clarity for NICs prevents errors in links, bonding, and VLANs when managed remotely or in shifts. In production, reduces surprises during changes and deployments.

- Navigation and grouping: more coherent in the datacenter tree: as clusters and SDN zones expand, it’s appreciated.

Summary: the UI isn’t winning design awards, but improves operational ergonomics, which ultimately matters.

SDN with Spine–Leaf Fabric: From Lab to Serious Topologies

One of the most striking updates appears in Datacenter → SDN: the definition of a fabric in a spine–leaf style directly from the Proxmox console. It’s not a switch replacement — that remains a network layer responsibility — but it brings operators closer to modeling connectivity declaratively and maintaining node-to-node coherence.

- What it solves: coherence of segmentation, routing, and overlay domains in setups where each host used to be configured individually.

- Who it’s for: on-premises environments that need to standardize logical networks and grow hassle-free.

- What it isn’t: a substitute for physical fabric capabilities. Think of it as a control extension that prevents divergence.

Summary: a step that professionalizes software-defined networking in Proxmox and reduces tech debt.

ZFS: Scaling “Hot” Storage Without Unmounting the Pool

Proxmox 9 introduces an enhancement that, practically, changes the game for those operating ZFS on hosts. The idea is straightforward: expand capacity live by adding disks to the pool without “dismantling” existing configurations.

- What it means day-to-day: you can start small with a few disks and add more as needs grow (or budget allows). This is invaluable for backups or image repositories.

- Operational impact: fewer maintenance windows, reduced human error risk, and incremental expansion (scaled CapEx).

- Technical nuance: the exact mechanics depend on the type of vdev. ZFS’s interface and documentation help decide whether to add or modify a vdev. In Proxmox 9, the process is much safer to avoid breaking working configurations.

Summary: real elasticity in local storage. When combined with Proxmox Backup Server, it enables simple growth planning without re-engineering.

Affinity and Anti-Affinity Rules: Smart HA

Previously a common critique of Proxmox was that it was “just” a lab hypervisor: until now, fine-grained control over where VMs and services should coexist or be separated was missing. Proxmox 9 introduces affinity and anti-affinity rules:

- Anti-affinity (separation): for critical pairs (e.g., two nodes of a service or two controllers), specify that they never reside on the same host. If one node fails, the HA system won’t place “sister” workloads together again.

- Affinity (co-location): for related applications and databases, if latency matters, they can be linked to try to stay on the same host when feasible.

- Goal: align cluster behavior closer to enterprise expectations, with rules following VM failures and migrations.

Summary: this feature elevates Proxmox for placement policies. It’s key for serious HA management.

Adoption Cycle: Deploying Proxmox 9 Without Fears

Beyond the new features, success depends on how you adopt the platform. A conservative approach can make the difference between a smooth Proxmox 9 experience and a painful one.

- Test in staging with real cases

- Replicate a representative environment: storage, networks, typical loads and peaks.

- Validate provisioning playbooks (cloud-init, templates, tags, hooks).

- Network testing

- If deploying SDN and spine–leaf declaratively, document your current topology and overlay plan.

- Test failover scenarios (link outages, leaf shutdowns, MTU loss).

- ZFS planning

- Define which vdev you’ll need and how you’ll expand: it’s not just adding capacity but also changing redundancy.

- Validate resilver times on your disks and their impact on I/O performance.

- HA with affinity rules

- Label roles: db, app, proxy, pbs, etc.

- Write affinity and anti-affinity policies and simulate node failures to test them.

- Rollback planning

- Keep images of Proxmox 8 until the migration is complete.

- Avoid applying major changes simultaneously: SDN, ZFS, and HA, only after they’ve been validated individually.

Vendor Lock-in: What’s the New Position of Proxmox?

The market context matters. Amidst shifts by traditional virtualization vendors, many organizations explore alternatives. In this climate, Proxmox 9 isn’t just “the latest version”: it comes with capabilities that address common concerns—like placement policies, declarative SDN, and scalable ZFS—helping it stand out.

- SMBs and mid-market: Controlled cost + enterprise capabilities make it hard to ignore.

- Service providers: simplified cluster orchestration and management through open tools and APIs fit well with multi-tenant catalogs.

- Enterprise IT: emphasis on governance (affinity rules), observability, and update cycles. Proxmox 9 moves in this direction.

Bottom line: far from an incremental update, Proxmox 9 clears the way for serious deployments where concerns about reliability and control once posed barriers.

Hands-On Insights: Observations After “Touching”

- NIC aliasing: trivial on paper, but a significant error reducer in practice.

- Fabric in SDN: “everything in its place” when managing multiple logical domains; reduces drift between nodes.

- Hot ZFS expansion: freedom to start with 5 disks and grow to 24 without re-creating pools. Also, mitigates correlated failures if disks aren’t purchased simultaneously.

- Affinity rules: not just “HA that starts things,” but HA that places things correctly. Ultimately, this makes a big difference between an operational platform and one with aspirations.

Best Practices for “Day Two” Management

- Strict VM and node labeling: roles, criticality, failure domain.

- Template catalogs: use cloud-init and basic hardening (SSH, sudo, auditing).

- Backups with PBS: test restoration regularly—not just snapshots.

- Observability: cluster metrics (CPU, memory, I/O, latency) and alerting for threshold crossings.

- Runbooks: incident scripts for leaf failures, bond issues, disk failures, ZFS degradation, etc.

- Small, frequent maintenance windows, avoiding “big bang” quarterly updates.

Summary

Proxmox 9 doesn’t aim to dazzle with fireworks. Instead, it does something equally valuable: brings the platform closer to the operational standards of production environments. Support for Debian 13, organized repositories, network aliases, SDN with spine–leaf, live ZFS growth, and affinity rules all outline a solution that prioritizes resilience and control.

If Proxmox VE 8 marked a turning point where many began viewing it beyond a “free hypervisor,” Proxmox 9 is the version that invites users to operate it as a full platform. With method, testing, and governance, it’s prepared for larger challenges.

Frequently Asked Questions (FAQ)

What are the main changes in Proxmox 9 compared to 8?

Beyond the Debian 13 “Trixie” base and repository restructuring, it introduces network aliases (more straightforward operation), SDN with fabric spine–leaf (logical network cohesion), hot ZFS expansion (capacity without pool re-creation), and—key—a set of affinity and anti-affinity rules for smarter VM placement in HA setups.

Can I deploy Proxmox 9 directly into production?

Technically yes, but a cautious approach involving staging tests with real workloads, validating playbooks, testing failure scenarios (HA, network, disks), and planning rollback strategies is advised. Introducing SDN, ZFS, and affinity rules simultaneously should be staged sequentially: first network, then storage, then policies.

Who benefits most from Proxmox 9?

Teams requiring control (via affinity rules), storage expansion without reconfiguration (using ZFS), and logical network organization (via SDN) without vendor lock-in. Service providers, mid-market enterprises, and IT teams favoring infra-as-code will find it particularly advantageous.

What are the risks in upgrading to Debian 13?

Risks include module, driver, and dependency updates moving repos. Mitigate this with staging environments, version control for tooling (Ansible, Terraform, Packer), and thorough observability in the early weeks of deployment.