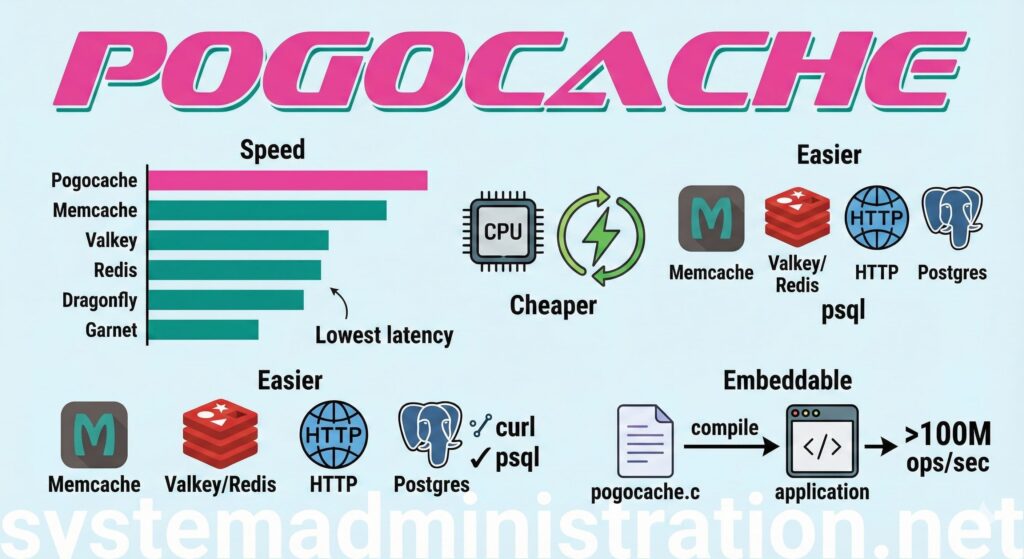

In the world of infrastructure, trends change, but needs often stay the same: less latency, less CPU per request, and fewer surprises in production. In this space — where Redis, Memcached, Valkey, or Dragonfly have been household names for years — Pogocache is gaining attention. It’s a cache server written in C that boasts being optimized “from scratch” for fast response and CPU efficiency, and it also offers a rare feature: multi-protocol support all at once.

The idea is straightforward: a single piece of software that can function as a consumable cache with existing tools and libraries because it understands Memcache, RESP (Valkey/Redis), HTTP, and even the PostgreSQL wire protocol. For teams wanting to change the engine without rewriting half the stack, this compatibility is almost the main “feature”: it can be tested with redis-cli/valkey-cli, with curl, with psql, or with traditional Memcached clients.

What exactly is Pogocache (and why isn’t it just “another Redis”)

Pogocache is presented as a key-value cache designed to run as a service (daemon), but it also offers an uncommon option: embedded mode. Instead of exposing a network port, it can be compiled directly into your application via a self-contained file (pogocache.c), eliminating networking overhead when you need “bare metal” performance.

Its design focuses on:

- Sharded hash map (multiple “shards” to distribute contention).

- Robin Hood hashing (to improve collision behavior).

- Lightweight locks per shard.

- Thread-based network model with event queues (epoll/kqueue) and optimizations such as

io_uringon Linux when available.

Put simply: it’s built so that the basic operations (SET/GET/DEL) are CPU-cheap and latency-stable — exactly where it matters when scaling a service.

Performance comparison: what benchmarks say “under real conditions”

Honesty is key here: there is no universal “fastest”. It depends on hardware, workload, value size, pipelining, persistence, network, etc. But Pogocache has positioned itself with concrete, replicable numbers.

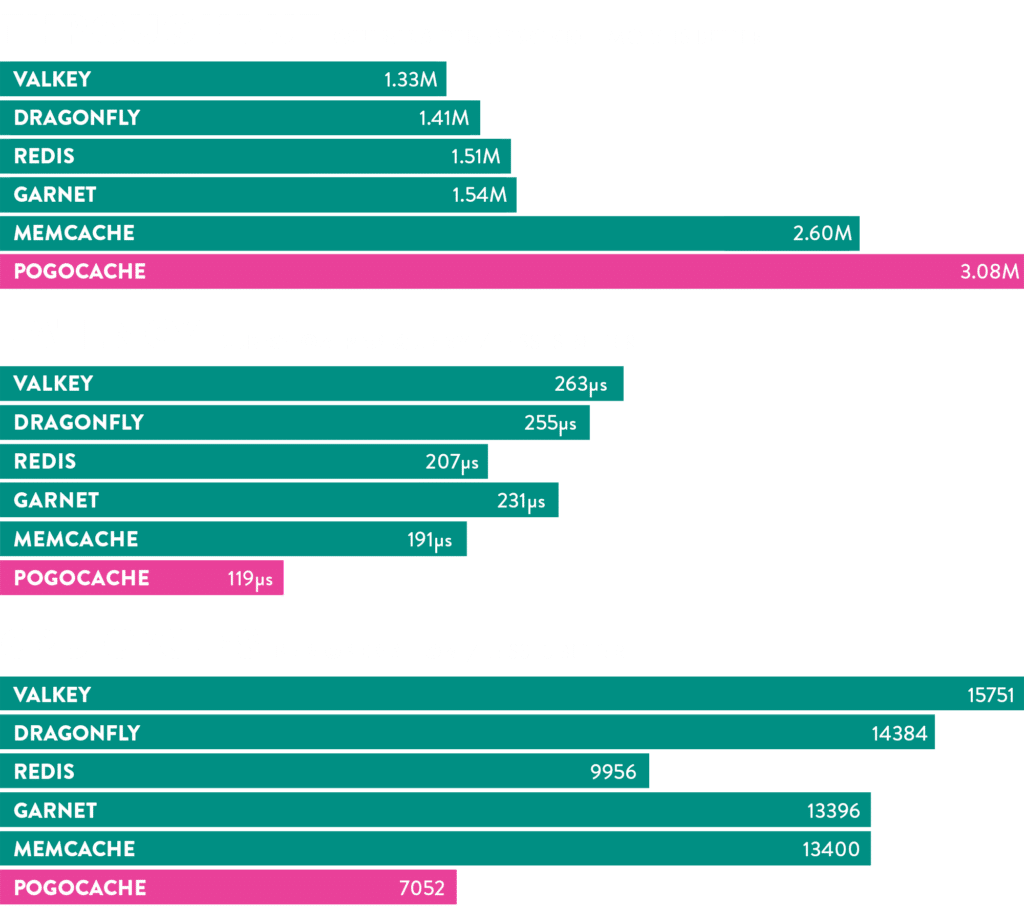

In its public benchmark (8 threads on an AWS c8g.8xlarge instance), Pogocache reaches 3.08 million QPS, outperforming popular alternatives in that scenario:

- Pogocache: 3.08 M QPS

- Memcache: 2.60 M QPS

- Garnet: 1.54 M QPS

- Redis: 1.51 M QPS

- Dragonfly: 1.41 M QPS

- Valkey: 1.33 M QPS

The important nuance is how the measurements are made. The associated benchmark repository details that tests are run using memtier_benchmark, with local UNIX pipes connections, persistence disabled, 31 runs per chart, median as the representative value, plus latency percentiles (p50/p90/p99 and above) and CPU cycles measured with perf. That means it’s not just a “snapshot” without methodology: there’s context and repetition.

Does that mean everyone will see exactly that +X%? Not necessarily. But it highlights a trend: if your bottleneck is cache layer latency/CPU, Pogocache deserves at least a controlled test.

How to install Pogocache

Option 1: compile from source code

On Linux/macOS systems (64-bit), the usual steps are:

git clone https://github.com/tidwall/pogocache

cd pogocache

make

This produces the pogocache binary.

Option 2: Docker

For quick testing without cluttering your machine:

docker run --net=host pogocache/pogocache

(In environments where --net=host isn’t suitable, you’ll need to manually map ports.)

Starting Pogocache (and minimal adjustments for production)

The default start runs the service on 127.0.0.1:9401:

./pogocache

To listen on another IP:

./pogocache -h 0.0.0.0

And some common flags to know:

--threads: number of I/O threads.--maxmemory: memory limit (defaults to a percentage).--evict: whether to evict keys upon reachingmaxmemory.--auth: password/token for authentication.- TLS:

--tlsport,--tlscert,--tlskey,--tlscacert.

Example with password:

./pogocache --auth "MyLongPassword"

How to use it: 3 practical methods (HTTP, Redis/Valkey, and Postgres)

1) HTTP with curl (PUT / GET / DELETE)

Save value:

curl -X PUT -d "my value" http://localhost:9401/mykey

Read value:

curl http://localhost:9401/mykey

Delete:

curl -X DELETE http://localhost:9401/mykey

TTL (in seconds) as query param:

curl -X PUT -d "value with TTL" "http://localhost:9401/mykey?ttl=15"

2) RESP (Valkey/Redis) with valkey-cli or redis-cli

valkey-cli -p 9401

SET mykey myvalue

GET mykey

DEL mykey

If you activated --auth:

valkey-cli -p 9401 -a "MyLongPassword"

3) “Postgres mode” with psql (yeah, like it sounds)

Connect:

psql -h localhost -p 9401

And operate:

SET mykey = 'my value';

GET mykey;

DEL mykey;

This opens an interesting door: using Postgres libraries in languages with mature tooling, or even automating tests with psql without installing Redis clients.

When does it make sense (and when not)

Pogocache fits especially well when:

- Maximizing speed in simple operations (GET/SET/DEL).

- Replacing or supplementing Redis/Memcached without changing clients.

- Wanting an embedded mode to eliminate network overhead.

- Prioritizing low latency + CPU efficiency over complex features.

And it’s wise to be cautious if:

- You need a large ecosystem of modules, scripts, and patterns already “standard” in Redis.

- High availability distributed out of the box is a must (Pogocache talks about future roadmap and approach, but today’s strength is node performance and operational simplicity).

FAQs

Can Pogocache replace Redis in an existing project?

In many cases, yes at the client/protocol level, since it speaks RESP (Valkey/Redis) and supports common commands (SET/GET/DEL/TTL/EXPIRE, etc.). The key is to review whether the app uses advanced Redis-specific features.

What’s the actual advantage of supporting HTTP and Postgres along with Redis/Memcache?

It reduces friction: enabling testing and operation with universal tools (curl) or integration with environments with existing Postgres tooling (psql, drivers), without relying on specialized clients.

Are the QPS benchmarks “real” or marketing?

They are numbers published with a methodology repository and scripts, which ensures traceability. Still, you should validate with your own workload (value size, concurrency, network, persistence, etc.).

How does Pogocache ensure reliability in production?

It includes authentication via password/token, TLS/HTTPS support via startup flags, and options to limit resources (threads, memory, connections) and control eviction policies.

Source: Administración de Sistemas