The multi-billion dollar deal marks a new chapter in the race to dominate large-scale AI model training

Oracle has signed a strategic agreement with AMD to deploy a cluster of 30,000 MI355X GPUs, solidifying its commitment to expand its infrastructure dedicated to training artificial intelligence models. The announcement was made by Larry Ellison, Oracle’s CTO, during the third-quarter earnings presentation for 2025, labeling the contract as a “multi-billion dollar” commitment.

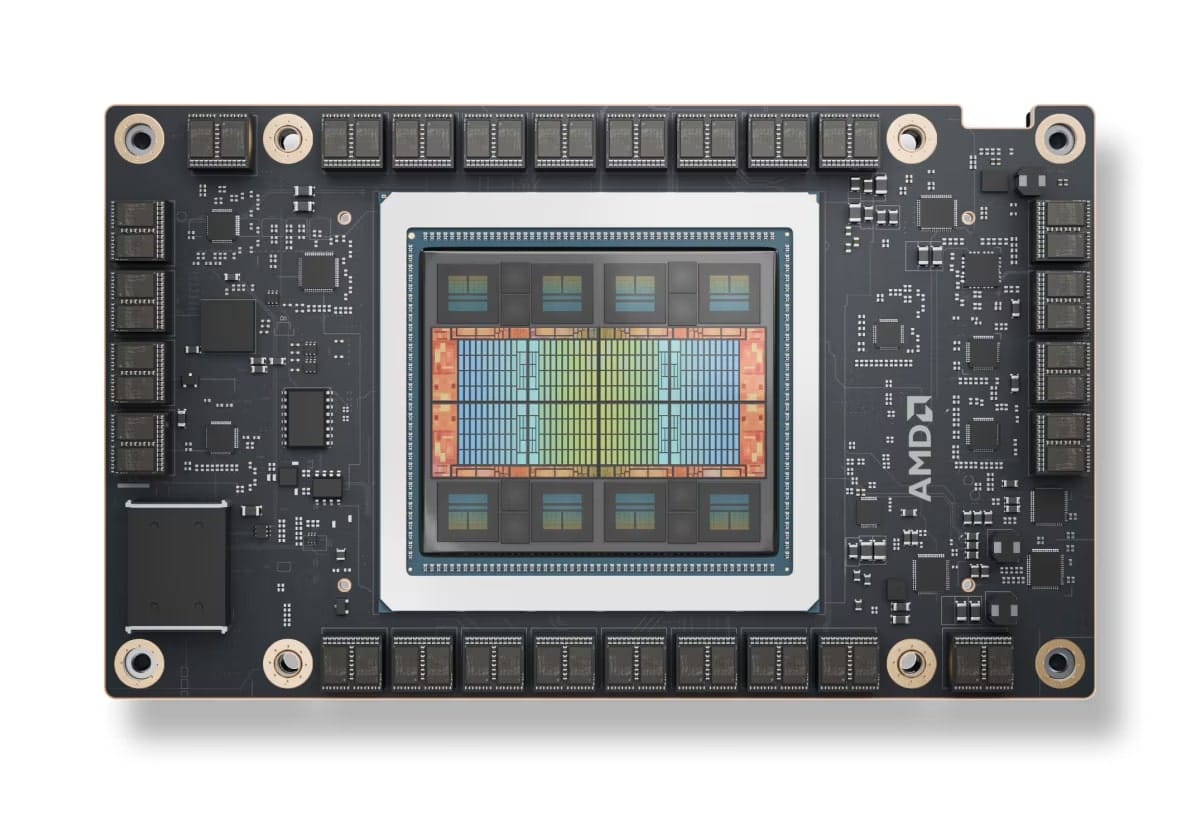

The new AMD GPUs, manufactured using TSMC’s 3nm node and based on the CDNA 4 architecture, are poised to become one of the most powerful alternatives to Nvidia’s current dominance in the accelerated computing segment. Each unit features 288 GB of HBM3E memory and achieves a bandwidth of up to 8 Tbps, making them a direct competitor to Nvidia’s B100 and B200 models.

The design of these cards, which operate with a thermal design power (TDP) of 1,100 watts, necessitates the use of liquid cooling systems, highlighting the technical sophistication and energy demands of these types of high-performance clusters.

Where will the cluster be located?

So far, Oracle has not revealed the location of this new cluster of 30,000 GPUs, although specialized media outlets like DCD have reached out to the company to determine if the infrastructure will be linked to the Stargate project, developed in collaboration with OpenAI.

On another note, in the same quarter, Oracle also achieved another significant milestone by announcing the deployment of 64,000 Nvidia GB200 GPUs for OpenAI, in a data center located in Abilene, Texas, rented from the company Crusoe. However, the agreement with OpenAI has not yet been formalized, as acknowledged by the company during the earnings call, although it is expected to be finalized soon.

AMD strengthens its presence in large cloud deployments

This new contract is not the first between Oracle and AMD. In September 2024, Oracle had already relied on AMD Instinct MI300X chips to power its OCI Compute Supercluster, an infrastructure that can scale up to 16,384 GPUs, presented as a competitive platform against other GPU-based intensive computing offerings.

The addition of the MI355X not only expands Oracle’s capacity to compete in the emerging generative AI market but also confirms AMD’s growing appeal as a high-performance solution provider for companies looking to diversify their technological reliance beyond Nvidia.

A new phase in the race for AI training

Oracle’s move is set against an increasingly intense competition to control the resources necessary for training next-generation AI models. As computational demands escalate, both in size and complexity of models, the availability of specialized and efficient hardware has become a strategic advantage.

Moreover, high energy costs and the need for data centers optimized for liquid cooling are pushing industry players to seek more sustainable and powerful solutions. With this agreement, Oracle positions itself as one of the leading players capable of providing large-scale computational power, both for its own infrastructure and for customers in the cloud and AI ecosystem.

The planned deployment of MI355X GPUs by mid-2025 undoubtedly represents a step forward in the evolution of accelerated computing, with Oracle and AMD as key allies in this new technological chapter.

via: DCD