In the world of artificial intelligence, the quest to enhance reasoning in language models has taken a new step with the emergence of Open-R1, a project that aims to openly reconstruct the training pipeline of DeepSeek-R1. This initiative, which has captured the attention of the tech community, is based on the recent dissemination of DeepSeek-R1 and its innovative approach to using reinforcement learning to boost reasoning in complex tasks.

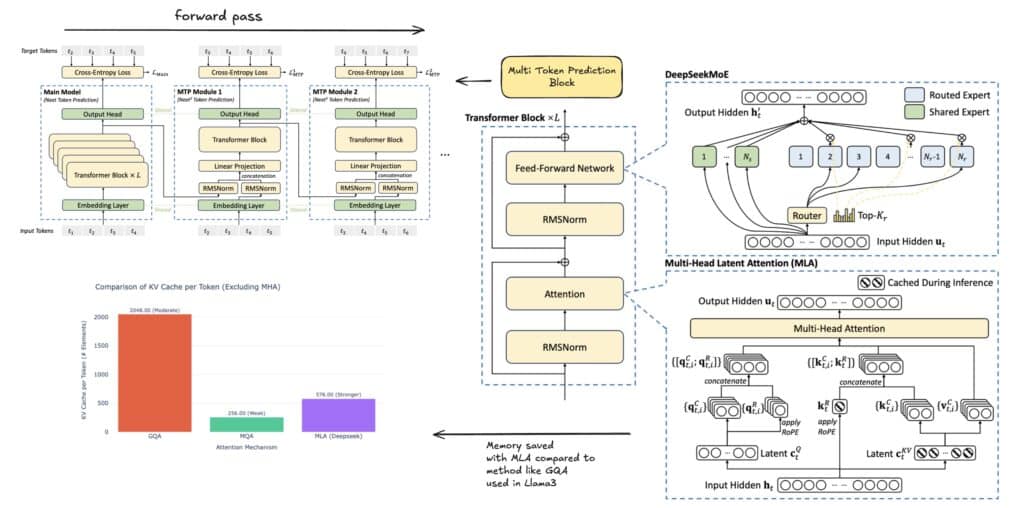

DeepSeek-R1 initially stood out for its ability to solve mathematical, programming, and logical problems, even outperforming benchmark models like OpenAI’s o1. The secret behind its success lies in its intensive use of computing during inference, allowing the model to learn how to break down complex problems and verify its own answers. Furthermore, the model is based on DeepSeek-V3, a robust 671B Mixture of Experts (MoE) model that has demonstrated performance comparable to other giants like Sonnet 3.5 and GPT-4o. One of the most striking aspects of this approach was its cost efficiency, achieved with an investment of just $5.5 million, thanks to architectural innovations like Multi-Token Prediction (MTP) and Multi-Head Latent Attention (MLA).

The DeepSeek-R1 version differs from its DeepSeek-R1-Zero variant in its training methodology. While the “Zero” version did without human supervision and relied solely on reinforcement learning through Group Relative Policy Optimization (GRPO), DeepSeek-R1 incorporated an initial “cold start” phase. This stage involved fine-tuning the model using a small set of carefully selected examples to improve the clarity and readability of the responses. Subsequently, the model underwent successive phases of reinforcement and refinement, including the removal of low-quality outputs through verifiable and human preference-based rewards.

However, despite the impact made by DeepSeek-R1, its launch left some unanswered questions. Although the model weights were released, the datasets and code used in its training remain reserved. In light of this limitation, Open-R1 aims to reconstruct these essential components so that both the research community and the industry can replicate or even improve the results achieved by DeepSeek-R1.

The action plan of Open-R1 is structured around several fundamental steps:

- Replication of R1-Distill models: The goal is to distill a high-quality reasoning dataset from DeepSeek-R1.

- Reconstruction of the reinforcement learning pipeline: Efforts will focus on reproducing the process that created the R1-Zero version, which will involve curating new large-scale datasets oriented toward mathematics, reasoning, and coding.

- Validation of multi-stage training: It will be demonstrated that transitioning from a base model to a fine-tuned one through supervision (SFT) and subsequently through reinforcement learning is possible.

The use of synthetic datasets will facilitate the task for researchers and developers to transform existing language models into models specialized in reasoning. Furthermore, the detailed documentation of this process aims to share knowledge that prevents unnecessary expenditure of computational resources and time on unproductive approaches.

The significance of this initiative extends beyond the realm of mathematics or programming. The potential impact of reasoning models spans diverse fields such as medicine and other scientific areas, where the ability to break down and analyze complex problems can make a significant difference.

Open-R1 thus presents itself not only as a technical replication exercise but as a proposal for open collaboration. By inviting the community to contribute code, engage in discussions on platforms like Hugging Face, and bring ideas forward, the project seeks to lay the groundwork for the development of future artificial intelligence models with advanced reasoning capabilities.

The commitment to transparency and collaboration in the field of reinforcement learning opens new perspectives in the development of artificial intelligence technologies, ushering in an era where science and industry work hand-in-hand to unravel the challenges of automated reasoning.