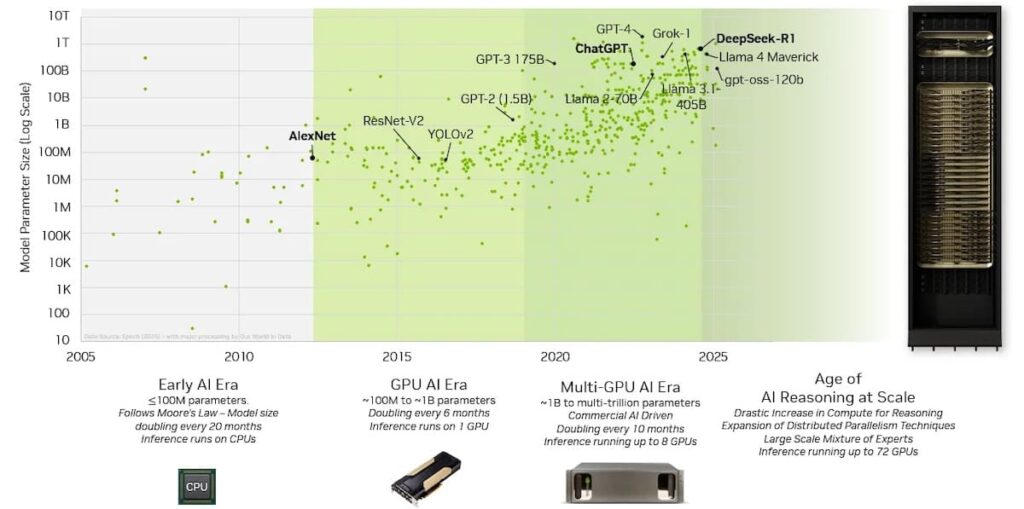

In less than a decade, the field of artificial intelligence has transitioned from training models with tens of millions of parameters to working with trillions. This escalation—driven by LLMs such as GPT-4, Llama 3.1, and Claude Sonnet—not only posed an algorithmic challenge but also an unprecedented infrastructure challenge. Chips alone are no longer enough: the key is how they are interconnected.

In this context, NVIDIA NVLink has evolved into the backbone of AI factories. And with the launch of NVLink Fusion, the company takes another step: providing access to its interconnection technology so hyperscalers, governments, and large corporations can build custom superclusters with CPUs and XPUs integrated into the same communication fabric that currently powers NVL72 and NVL300 racks.

From PCIe to NVLink: a decade of silent revolution

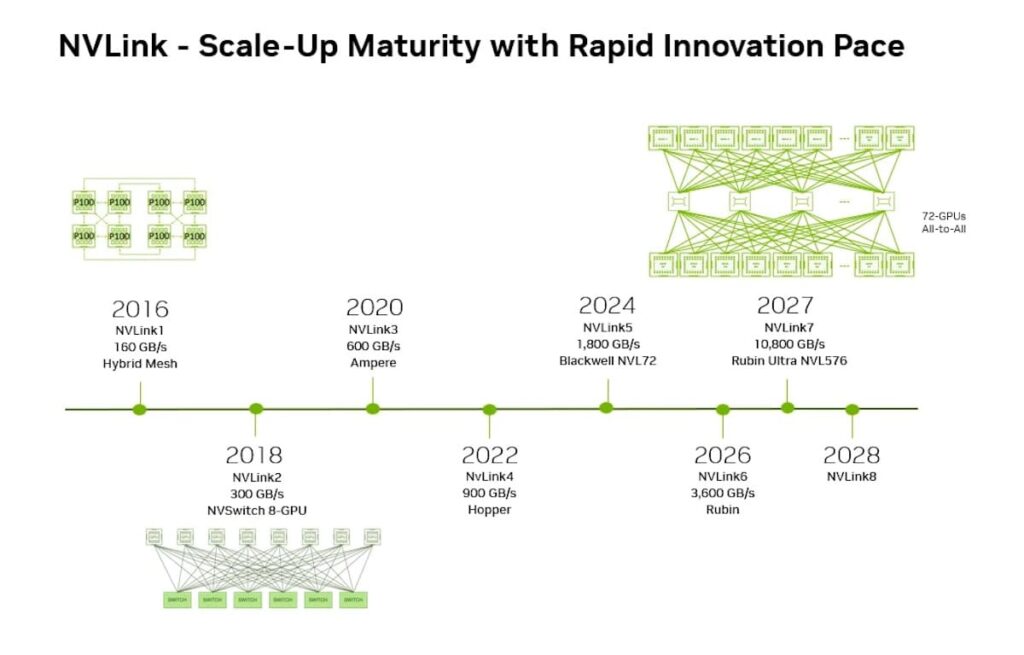

When NVIDIA introduced the first generation of NVLink in 2016, the goal was to surpass the limitations of PCIe Gen3, which had already become insufficient for HPC and deep learning workloads. Since then, the evolution has been meteoric:

- NVLink 1 (2016): Faster GPU-GPU communication than PCIe, with unified memory.

- NVLink Switch (2018): 300 GB/s in topologies of 8 GPUs, marking the first mid-scale fabric.

- NVLink 3 and 4 (2020-2023): Introduction of the SHARP protocol for collective operations, reducing latency in massive clusters.

- NVLink 5 (2024): support for 72 GPUs all-to-all with 1.8 TB/s per GPU and an aggregate bandwidth of 130 TB/s, nearly 800 times that of the first generation.

Today, NVL72 racks — the foundation of systems like Blackwell GB200 — set the de facto standard in exascale AI factories.

The real bottleneck: inference

While headlines often focus on training costs, analysts agree that the true economic challenge lies in inference: delivering real-time results to millions of ChatGPT, Gemini, Claude, or Copilot users.

This is where interconnection is critical:

- Time to first token (latency).

- Tokens per second per user (throughput).

- Tokens per second per megawatt (energy efficiency).

NVIDIA’s internal studies show that:

- In a 4-GPU mesh, performance drops due to bandwidth division.

- In an 8-GPU system with NVLink Switch, all-to-all connectivity is achieved, with a significant efficiency jump.

- In a 72-GPU domain, the result is exponential: maximum throughput and minimal latencies, with a direct impact on inference costs and profit margins.

Practically, each microsecond saved in softmax or memory transfer translates into millions of dollars annually in energy savings or additional service capacity.

NVLink Fusion: NVIDIA’s controlled opening

Until now, these capabilities were limited to NVIDIA-designed infrastructure. With NVLink Fusion, the company provides access to the full interconnection stack to incorporate it into semi-customized systems:

- NVLink chiplets and SERDES.

- Switches and rack-scale topologies (NLV72 and NLV300).

- Liquid cooling ecosystem and energy management.

- Full software support (CUDA, NCCL, Triton, TensorRT-LLM).

What does this change?

Hyperscalers will be able to:

- Integrate custom CPUs with NVIDIA GPUs using NVLink-C2C, gaining access to the full CUDA-X ecosystem.

- Design hybrid XPUs (special-purpose accelerators) with NVLink connectivity via UCIe.

- Build tailor-made AI factories with modular racks that mix GPUs, DPUs, and internally designed processors.

This marks a profound change: moving away from reliance on a single closed design, towards co-designing infrastructure directly with NVIDIA’s interconnection technology.

Comparison: NVLink vs PCIe vs alternatives

| Technology | Bandwidth per GPU | Latency | Scalability | Typical use |

|---|---|---|---|---|

| PCIe Gen6 | 256 GB/s | High | Limited (host-device) | HPC, standard servers |

| NVLink 5 | 1.8 TB/s | Very low | Up to 576 coherent GPUs | AI factories, NVL72 racks |

| Infinity Fabric (AMD) | ~800 GB/s | Medium | Limited to MI clusters | GPUs Instinct MI300 |

| CXL 3.0 | 512 GB/s | Medium | High potential (shared memory) | Extended memory, I/O |

The advantage of NVLink is not just in bandwidth but also in its memory coherence, which allows treating a domain of 72 GPUs as if it were a single entity, reducing programming complexity.

Digital sovereignty and geopolitics

The partial opening of NVLink with Fusion also has geopolitical implications. Europe, India, and the Middle East have been working on AI sovereignty projects for months, seeking alternatives to relying solely on U.S. infrastructure.

With NVLink Fusion, NVIDIA ensures:

- Maintaining control of the CUDA ecosystem.

- Enabling sovereign customization (own CPUs, integrated racks) without losing library compatibility.

- Ensuring that large sovereign AI contracts (such as Indonesia’s deal with NVIDIA + Cisco + Indosat) are executed with NVLink as the de facto standard.

The future: NVLink beyond 72 GPUs

NVIDIA’s roadmap is clear:

- NVLink 6, 7, and 8 are already planned, with annual launches.

- Expect to surpass the 1,000 GPUs threshold in coherent domains before 2028.

- Projected to reach bandwidth of 3-5 TB/s per GPU, with photonic interconnects in development.

- Long-term vision involves multiple distributed AI factories operating as a single global supercluster, interconnected via NVLink and low-latency optical networks.

Conclusion

With NVLink Fusion, NVIDIA not only reinforces its leadership in GPU-GPU interconnection but also opens the door to a more flexible ecosystem where major cloud providers and governments can build custom AI infrastructures without abandoning the CUDA standard.

In an era where latency per token determines the revenue of generative AI applications, NVLink Fusion is not a luxury—it’s the master key to maintaining competitiveness in the superintelligence business.

Frequently Asked Questions (FAQ)

What is NVIDIA NVLink Fusion?

It’s a program that opens NVLink technology (chiplets, switches, racks, SERDES) for integration into custom CPUs and XPUs, enabling the construction of tailor-made AI infrastructures.

How does NVLink differ from PCIe?

NVLink offers up to 1.8 TB/s per GPU, memory coherence, and scalability up to 576 GPUs. PCIe Gen6 is limited to 256 GB/s with higher latencies.

Why is it critical for AI inference?

Because it enables clusters with low latency and higher throughput per watt, reducing costs and improving response times in massive services like ChatGPT or Copilot.

What role does it play in digital sovereignty?

NVLink Fusion facilitates countries and regions in building their own customized AI factories, with native CPUs or hybrid XPUs, while maintaining CUDA compatibility.