Nvidia’s Blackwell artificial intelligence (AI) chips, touted as a revolution in data processing, are facing significant challenges due to overheating issues in the servers designed to host them. This situation has raised concerns among customers, who fear further delays in the rollout of new data centers, according to a recent report from The Information.

Technical Issues with Servers

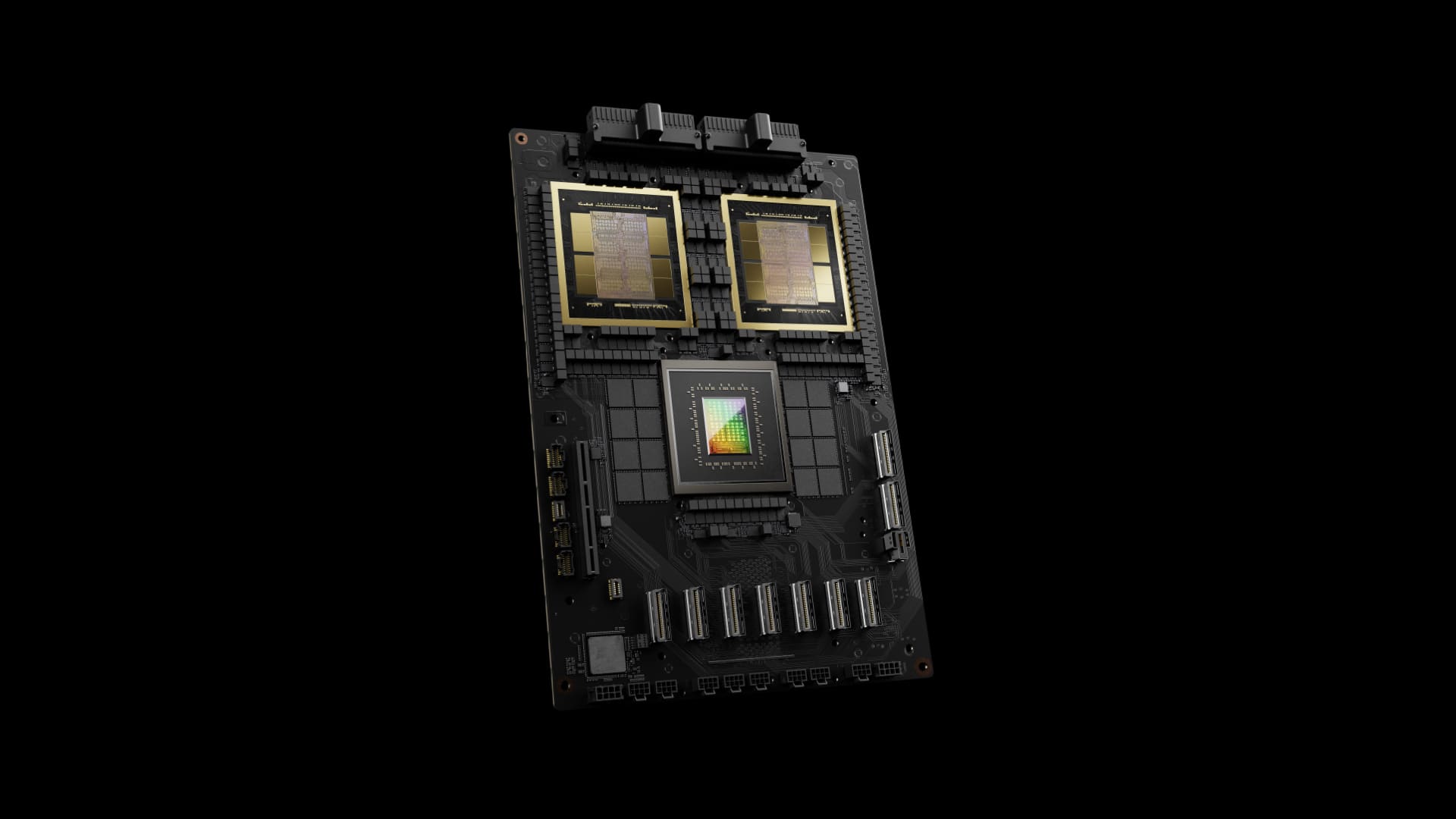

The Blackwell chips, representing Nvidia’s latest advancement in high-capacity graphics processing units (GPUs), have exhibited inadequate performance when connected in server racks capable of housing up to 72 units. According to sources close to the matter, overheating occurs during simultaneous operation, compromising stability and expected performance.

This issue has prompted Nvidia to request multiple design revisions for the racks from its suppliers in an effort to mitigate the risks of excessive heating. While specific details about the suppliers have not been made public, Nvidia employees and partners with direct knowledge have confirmed the technical difficulties and the efforts to resolve them.

A Nvidia spokesperson told Reuters that the company is working closely with cloud service providers to address these issues, characterizing the engineering iterations as “normal and expected.”

Impact on Key Customers

The delay in resolving these issues could significantly affect tech giants like Meta Platforms, Alphabet (Google), and Microsoft, who had planned to incorporate the Blackwell chips into their AI infrastructures. Initially slated for shipment in the second quarter of 2024, the chips have already faced production delays and are now impacted by integration issues in the servers.

These chips, considered revolutionary, combine two silicon units the size of previous models into a single component. This innovation allows for 30 times the performance in tasks like chatbot responses, positioning Blackwell as a key component for driving generative AI applications.

The Importance of Cooling Design

Overheating in server racks highlights a recurring problem in the tech industry: the need for advanced cooling systems to manage the increasing energy consumption of high-power chips. Experts suggest that cooling system designs must evolve alongside hardware advancements to avoid performance bottlenecks.

Furthermore, with the growing demand for data processing driven by AI, ensuring thermal stability and efficiency has become a strategic priority for companies like Nvidia and its cloud customers.

Future Outlook

Despite the current challenges, Nvidia is confident that it will resolve the design issues and maintain its position as a leader in chip technology for artificial intelligence. With AI playing a crucial role in sectors like cloud computing, chatbots, and advanced analytics, addressing these problems will be key to meeting industry expectations and maintaining customer trust.

While delays and technical difficulties are common in the development of new technologies, the impact on implementation timelines and associated costs could pose a significant challenge for Nvidia and its partners. For now, all eyes are on the upcoming project updates and how the company plans to ensure that the Blackwell chips meet performance and reliability expectations.

References: The Information and Reuters.