The race for artificial intelligence isn’t just happening in the data centers of big tech companies. It’s also being reshaped in laboratories, universities, and research centers that aim to answer much deeper questions: how galaxies form, how earthquakes propagate, which materials will enable longer-lasting batteries, or how to model an increasingly extreme climate.

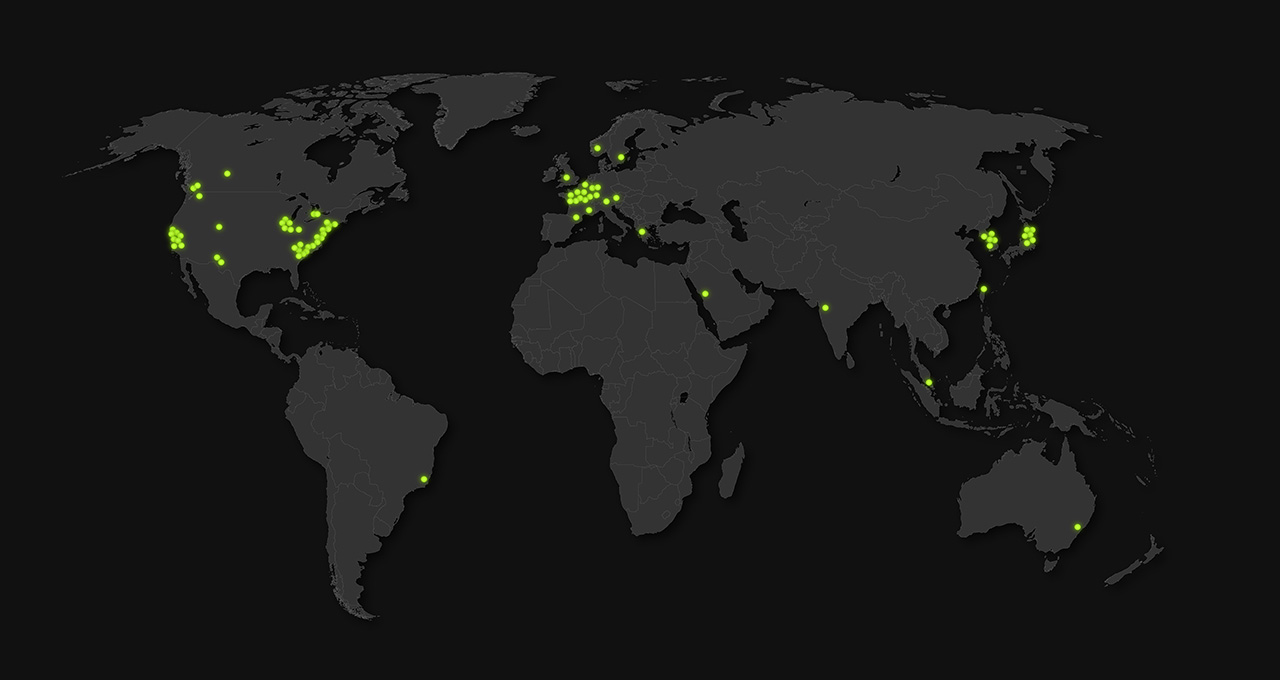

At SC25 Conference in St. Louis, USA, NVIDIA has quantified this scientific revolution: more than 80 new science systems based on its accelerated computing platform have been deployed in the past year, reaching a total combined capacity of about 4,500 exaflops of AI performance. In practice, this is a global network of “digital microscopes” using GPUs, specialized CPUs, and ultra-low latency networks to conduct science at an unprecedented scale.

Among them is Horizon, the new and most powerful academic supercomputer in the United States; the seven AI systems of the Department of Energy (DOE) located at Argonne and Los Alamos; the European exascale system JUPITER; and a growing constellation of AI machines in Japan, South Korea, and Taiwan.

Horizon: The new academic giant in Texas

The Texas Advanced Computing Center (TACC) is gearing up to debut Horizon, a supercomputer aiming to become the backbone of U.S. academic science starting in 2026.

Its technical details are impressive:

- 4,000 NVIDIA Blackwell GPUs

- Up to 80 exaflops of AI compute in FP4 precision

- Servers with NVIDIA GB200 NVL4 and NVIDIA Vera CPUs

- Interconnect network based on NVIDIA Quantum-X800 InfiniBand

Far from being a generic machine, Horizon is designed with very specific scientific use cases in mind:

- Disease mechanics simulation, using molecular dynamics and AI models to understand how viruses and proteins act at an atomic scale.

- Large-scale astrophysics, modeling star and galaxy formation while reinterpreting James Webb Telescope observations.

- New quantum materials, studying turbulence, complex crystal structures, and conductivity in advanced materials.

- Seismology and seismic risk, simulating wave propagation and fault rupture to improve earthquake risk maps.

For the U.S. scientific ecosystem, Horizon is more than just a new entry in rankings: it’s an infrastructure designed from the ground up to combine classical simulation and generative AI, making this capability accessible to thousands of researchers in biology, physics, engineering, and geosciences.

Seven AI supercomputers for the DOE: Solstice, Equinox, Mission, and Vision

The other big announcement comes from the U.S. Department of Energy (DOE), which has partnered with NVIDIA to build seven new AI supercomputers across two key laboratories: Argonne National Laboratory (ANL) in Illinois, and Los Alamos National Laboratory (LANL) in New Mexico.

Argonne: Solstice and Equinox

At Argonne, several systems based on NVIDIA Blackwell GPUs and NVIDIA networking will be deployed:

- Solstice, the largest, will feature 100,000 Blackwell GPUs. Such a system, utilizing NVIDIA GB200 NVL72 chassis, can reach about 1,000 exaflops of AI training compute. To put it in perspective: this is more than 50% above the combined AI training capacity of all systems in the June 2025 TOP500.

- Equinox, with around 10,000 Blackwell GPUs, will complement this capacity for other scientific and energy applications.

- Three additional systems —Minerva, Janus, and Tara— will focus on AI model inference and training skilled personnel in advanced AI techniques for science.

The DOE’s vision is clear: connect these supercomputers with the nation’s scientific instrument network— from reactors to accelerators and telescopes— and turn the data they generate into AI models capable of accelerating discoveries in fusion, power grids, new fuels, or advanced materials.

Los Alamos: Mission and Vision

At Los Alamos, this effort materializes in two systems built in collaboration with HPE, based on the NVIDIA Vera Rubin platform and Quantum-X800 InfiniBand networks:

- Mission: intended for classified applications of the National Nuclear Security Administration, where advanced simulation and AI are essential for nuclear security without testing.

- Vision: focused on open science, foundation models, and AI-agnostic systems applied across multiple disciplines.

Both systems are expected to be operational by 2027, reinforcing the message that AI is no longer just a supplement for scientific simulation but a key player in the strategic goals of major national laboratories.

Europe joins the exascale race with JUPITER and plans new sovereign AI systems

On the European front, focus is on Jülich Supercomputing Centre (JSC) in Germany. Its supercomputer JUPITER has surpassed the exaflop barrier in the HPL benchmark, which measures double-precision floating-point performance (FP64).

Its key components:

- 24,000 NVIDIA GH200 Grace Hopper superchips

- NVIDIA Quantum-2 InfiniBand interconnect

- Primary use cases include high-resolution global climate simulation

With over 1 exaflop of power, JSC systems can perform climate simulations at kilometer resolution, a density previously impractical, dramatically enhancing modeling of extreme events, ocean circulation, and cloud patterns.

But JUPITER isn’t alone. Over the past year, other NVIDIA-accelerated European systems have been announced:

- Blue Lion (Germany, LRZ): planned for 2027 and based on NVIDIA Vera Rubin, supporting research in climate, turbulence, fundamental physics, and machine learning.

- Gefion (Denmark): the country’s first AI supercomputer, a NVIDIA DGX SuperPOD operated by DCAI, aimed at sovereign AI applications in quantum computing, clean energy, and biotech.

- Isambard-AI (UK): the country’s most powerful AI system, at the University of Bristol, supporting projects like Nightingale AI (a multimodal model trained on NHS data) and UK-LLM, an initiative to develop native language models tailored to British English, Welsh, and other local languages.

Together, these projects depict an Europe beginning to see accelerated computing as a pillar of its scientific and digital sovereignty, from climate to public health.

Japan, Korea, and Taiwan: AI factories for science and industry

In Asia, deploying AI supercomputers is driven by both sovereign AI strategies and major industrial players.

Japan: from FugakuNEXT to quantum AI

The RIKEN institute, Japan’s leading research center, announced during SC25 that it will incorporate NVIDIA GB200 NVL4 systems into two new supercomputers:

- A system with 1,600 GPUs dedicated to applied scientific AI.

- A system with 540 GPUs for quantum computing research.

Additionally, RIKEN is collaborating with Fujitsu and NVIDIA on FugakuNEXT (codename), the successor to the iconic Fugaku. This future supercomputer will combine Fujitsu FUJITSU-MONAKA-X CPUs with NVIDIA technologies linked via NVLink Fusion. It will target Earth modeling, drug discovery, and advanced manufacturing.

Tokyo University of Technology has launched an AI supercomputer using NVIDIA DGX B200 systems, capable of reaching 2 exaflops of FP4 theoretical performance with fewer than 100 GPUs. Its mission: develop large language models and digital twins to train the next generation of AI specialists.

Meanwhile, AIST (Japan’s National Institute of Advanced Industrial Science and Technology) has introduced ABCI-Q, considered the largest research supercomputer dedicated to quantum computing, with over 2,000 NVIDIA H100 GPUs.

South Korea and Taiwan: industrial-scale AI factories

The South Korean government announced plans to deploy more than 50,000 NVIDIA GPUs across sovereign clouds and “AI factories.” Major corporations like Samsung, SK Group, and Hyundai Motor Group are building their own GPU-based infrastructure to accelerate everything from chip design to autonomous vehicles and industrial robots.

In Taiwan, NVIDIA collaborates with Foxconn (Hon Hai Technology Group) to establish an AI factory supercomputer with 10,000 Blackwell GPUs, aiming to support startup projects, universities, and local industries.

A universal scientific instrument… and a challenge of energy efficiency

Behind all these systems lies a common architecture: the complete NVIDIA accelerated computing platform, which integrates:

- GPUs (H100, Blackwell, GB200, GH200 Grace Hopper, etc.)

- CPUs such as NVIDIA Vera

- DPUs and smart network cards

- High-speed networks like Quantum-2 and Quantum-X800 InfiniBand

- CUDA-X libraries and NVIDIA AI Enterprise software

This hardware and software integration enables researchers to reuse models, pipelines, and tools across a wide range of systems—from laboratory DGX units to exascale platforms like JUPITER or future systems like Solstice.

Simultaneously, the leap to exaflops and thousands of GPUs raises an inevitable question: how to make this infrastructure energy sustainable. NVIDIA’s approach involves high-efficiency architectures (more computation per watt), optimized networks, and new programming techniques to maximize GPU cycle utilization. However, the debate over AI’s energy impact and science’s role in mitigating it will remain vital in the coming years.

What’s clear is that the combination of simulation, data, and AI has moved from experimental to becoming the new universal tool of science. From global climate modeling to digital biology, particle physics, or materials engineering, NVIDIA-accelerated supercomputers are redefining what “limit” means in scientific computing.

Frequently Asked Questions

What does it mean that these systems total about 4,500 exaflops of AI performance?

One exaflop equals a quadrillion (10¹⁸) floating-point operations per second. Having over 80 science systems accelerated by NVIDIA reach roughly 4,500 exaflops indicates the scientific community now has a massive capacity to train and run AI models, far surpassing what was seen a few years ago in traditional supercomputing centers.

How does an AI supercomputer differ from a “traditional” supercomputer?

Traditional supercomputers have historically focused on high-precision numeric simulations, primarily using CPUs. AI supercomputers combine this with large GPU clusters, high-bandwidth memory, and low-latency networks, optimized for training AI models, processing massive datasets, and running hybrid workloads where simulation and AI complement each other.

Why do many countries talk about “sovereign AI” when building these systems?

Sovereign AI refers to a country’s ability to train and run its own AI models using local data, without relying entirely on infrastructure controlled by external parties. Systems like Gefion in Denmark or Isambard-AI in the UK enable governments, universities, and companies to develop models tailored to their language, healthcare system, regulations, and industrial fabric, maintaining control over data and infrastructure.

What real impact will this new wave of supercomputers have on daily life?

While these machines are far from end users, their impact will manifest in more precise and efficient products and services: better weather and climate predictions, new medicines developed with AI assistance, safer cars and aircraft, longer-lasting batteries, optimized industrial processes, and overall faster translation of scientific advances into market-ready technologies.

Sources:

– NVIDIA Blog – NVIDIA Accelerates AI for Over 80 New Science Systems Worldwide

– Public NVIDIA documentation on Blackwell, GB200, GH200, Vera Rubin, and Quantum-X/Quantum-2 architectures

via: blogs.nvidia