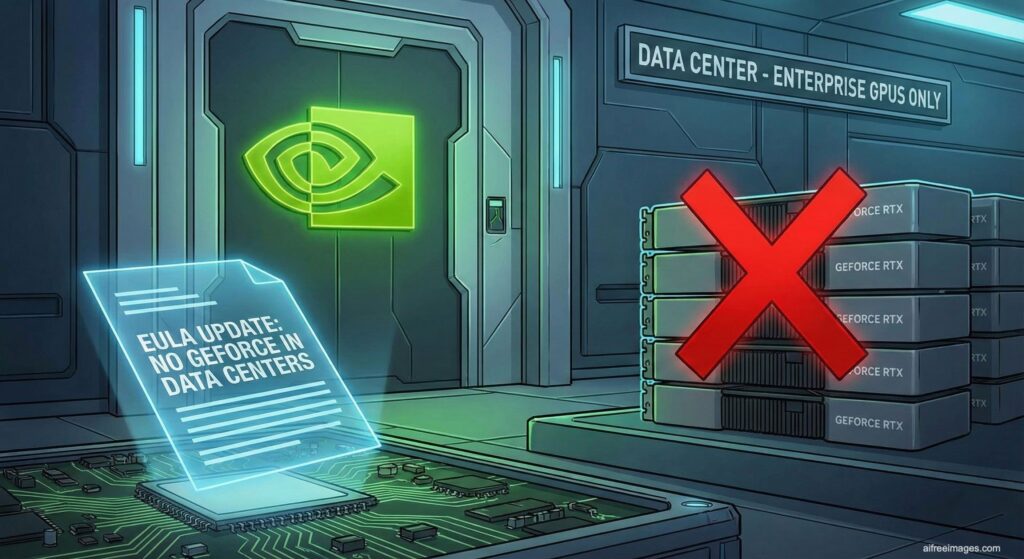

NVIDIA has introduced a key change to the license governing the drivers powering their graphics cards GeForce and Titan: from now on, the software cannot be used in data center deployments, with a single explicit exception for blockchain processing.

What appears on paper as a change to the “fine print” of the End User License Agreement (EULA) has direct implications for companies, universities, cloud providers, and any organization that was leveraging consumer GPUs for AI workloads, HPC, or virtualization in their racks.

“No Datacenter Deployment”: the new conflicting clause

The NVIDIA published EULA literally states:

“No Datacenter Deployment. The software is not licensed for datacenter deployment, except that blockchain processing in a datacenter is permitted.”

In other words, GeForce/Titan drivers are no longer licensed to run in data center environments, understood as professional deployments 24/7, multi-rack, multi-user.

The company clarifies that it does not intend to target researchers or individual users using these cards for non-commercial projects or laboratories not operating “at data center scale.” However, this clarification is not in the legal text itself but in public statements:

- According to NVIDIA, GeForce and Titan “are not designed for complex hardware, software, and cooling requirements of 24/7 data center deployments”.

- The stated goal is to “discourage misuse” of consumer products in demanding enterprise environments.

Practically, the company reserves the right to consider any use of GeForce/Titan in a data center or public cloud as outside the license scope.

A move directly targeting Tesla/RTX in data centers

Although the official justification focuses on technical and reliability issues, the sector interprets the change as a way to redirect demand toward professional lines:

- The Tesla, A-series, and data center RTX series offer features designed for enterprise environments: extended support, specific drivers, remote management, validation for 24/7 workloads, etc.

- But they also represent a significant price jump compared to similar consumer GeForce GPUs in terms of compute capacity.

For years, many labs, startups, and hosting providers have built AI nodes and GPU computing setups using GeForce “gaming” cards: same CUDA cores, much lower cost. The new EULA makes this model legally uncertain.

Meanwhile, the AI market has driven GPU demand sky-high: back in 2017, NVIDIA’s stock surged on the back of early deep learning projects, and since then, it has established itself as the go-to provider of AI accelerators, thanks to its CUDA + cuDNN ecosystem. Limiting GeForce use in data centers aligns with a clear strategy to differentiate consumer and data center products.

What about Linux, FreeBSD, and academic use?

The license includes a specific exception for software exclusively designed for Linux or FreeBSD, which can be copied and redistributed as long as binaries are not modified. However, the restriction on data center use remains: this exception covers redistribution, not where the software can be used.

Nonetheless, NVIDIA has sent reassuring messages to the research community:

“We recognize that researchers often use GeForce and Titan for non-commercial or research purposes that do not operate at data center scale. NVIDIA does not intend to prohibit these uses.”

This leaves a small gray area for university labs, research groups, and small teams, as long as they don’t operate as a large-scale computing service or commercial cloud. Still, from a legal standpoint, the license text is clear: the driver is not licensed for data center deployments.

Impact on companies, hosting providers, and small cloud vendors

For many organizations, this change isn’t just theoretical. It affects several levels:

- Hosting and colocation providers offering servers with GeForce GPUs for AI, rendering, or VDI.

- Companies operating internal training or inference farms with consumer cards to cut costs.

- Startups delivering services on third-party infrastructure that employ GeForce GPUs at scale.

In all these cases, continuing to use GeForce in the data center violates the driver’s EULA. This may lead to:

- Loss of official support from NVIDIA.

- Contractual risks with clients demanding license compliance.

- Problems during software compliance audits.

The alternatives include migrating to data center-grade GPUs (Tesla/RTX professional lines) or exploring other brands with different licensing policies, such as AMD’s Radeon Instinct with ROCm, which is trying to gain ground in AI and HPC.

What if “nobody finds out”? The practical dilemma

Many administrators wonder whether NVIDIA can reliably detect if a GeForce card is installed in a gaming PC or a data center server. From a technical perspective, detection is tricky, but the issue isn’t espionage; it’s compliance:

- A service contract, an audit, or an incident could reveal unlicensed use.

- For projects with public funding, corporate clients, or certifications (ISO, ENS, etc.), ignoring the EULA is generally a bad idea.

NVIDIA’s move effectively requires conscious decision-making: either accept the cost of switching to “official” data center hardware, or risk operating outside the license terms.

Another note on the growing power concentration in the GPU ecosystem

Beyond legal considerations, this change highlights the dependence of the AI ecosystem on a single provider. When the same entity controls hardware, software stack, and development tools, decisions like this can suddenly reshape the economics of many projects.

The NVIDIA move could accelerate:

- The shift towards open alternatives (ROCm, multi-backend frameworks).

- The emergence of new hardware players specialized in AI.

- And in the medium term, increased regulatory pressure to curb abusive segmentation practices in key markets like AI.

Frequently Asked Questions about the GeForce ban in data centers

Can I continue using a GeForce GPU in my company’s server?

If that server is part of a data center deployment (rack in a data center, multi-user service, 24/7 operation, etc.), the EULA states that the software is not licensed for that use. Legally, the recommended option is to switch to professional GPUs (Tesla/RTX data center) or other alternatives.

Does the prohibition also affect small or research projects?

NVIDIA has publicly stated that it does not intend to restrict non-commercial research uses outside “data center scale.” However, this intent isn’t explicitly clear in the license text, so each organization should assess the risks with their legal counsel.

Is there any exception to the data center ban for GeForce and Titan?

Yes: the EULA indicates that “blockchain processing in a data center” is permitted, i.e., mining or other blockchain activities within data centers. For other purposes (AI, HPC, VDI, rendering, etc.), the license does not allow these uses.

What options does a company have if it previously used GeForce in its data center?

Main options include:

- Migrating to NVIDIA data center GPUs (Tesla/RTX with specific drivers and licenses).

- Considering GPUs from other manufacturers, such as AMD Radeon Instinct under ROCm or other acceleration solutions.

- Redesigning architecture to rely on third-party cloud services, where license compliance is handled by the provider.