Artificial intelligence continues to advance rapidly, along with the hardware development capable of supporting increasingly complex and demanding models. NVIDIA has announced Rubin CPX, a GPU that not only represents an evolution over previous generations but also inaugurates a new category within the CUDA ecosystem: massive context inference processors.

The company claims that Rubin CPX will enable AI systems to work with context windows of up to one million tokens, something unimaginable just two years ago, and will have a direct impact on two high-growth areas: and multimodal video generation.

A new category in AI hardware

NVIDIA describes Rubin CPX as the first CUDA GPU designed to support massive-scale contexts, where a model processes not just a few thousand units of information but hundreds of thousands or even millions.

This opens the door for programming copilots to go beyond generating code snippets and to understand entire projects: complete repositories, accumulated documentation, and even interaction histories. Meanwhile, video applications — from semantic search engines to cinematic generation — will be able to process one hour of content in a single context, which requires on the order of a million tokens.

To achieve this, Rubin CPX incorporates long-range attention accelerators, video codecs, and a silicon design focused on energy efficiency and high performance. According to NVIDIA, the new GPU offers up to 30 petaflops in NVFP4 precision, includes 128 GB of cost-optimized GDDR7, and triples the attention capacity of systems like GB300 NVL72.

Vera Rubin NVL144 CPX: an 8 Exaflop AI rack

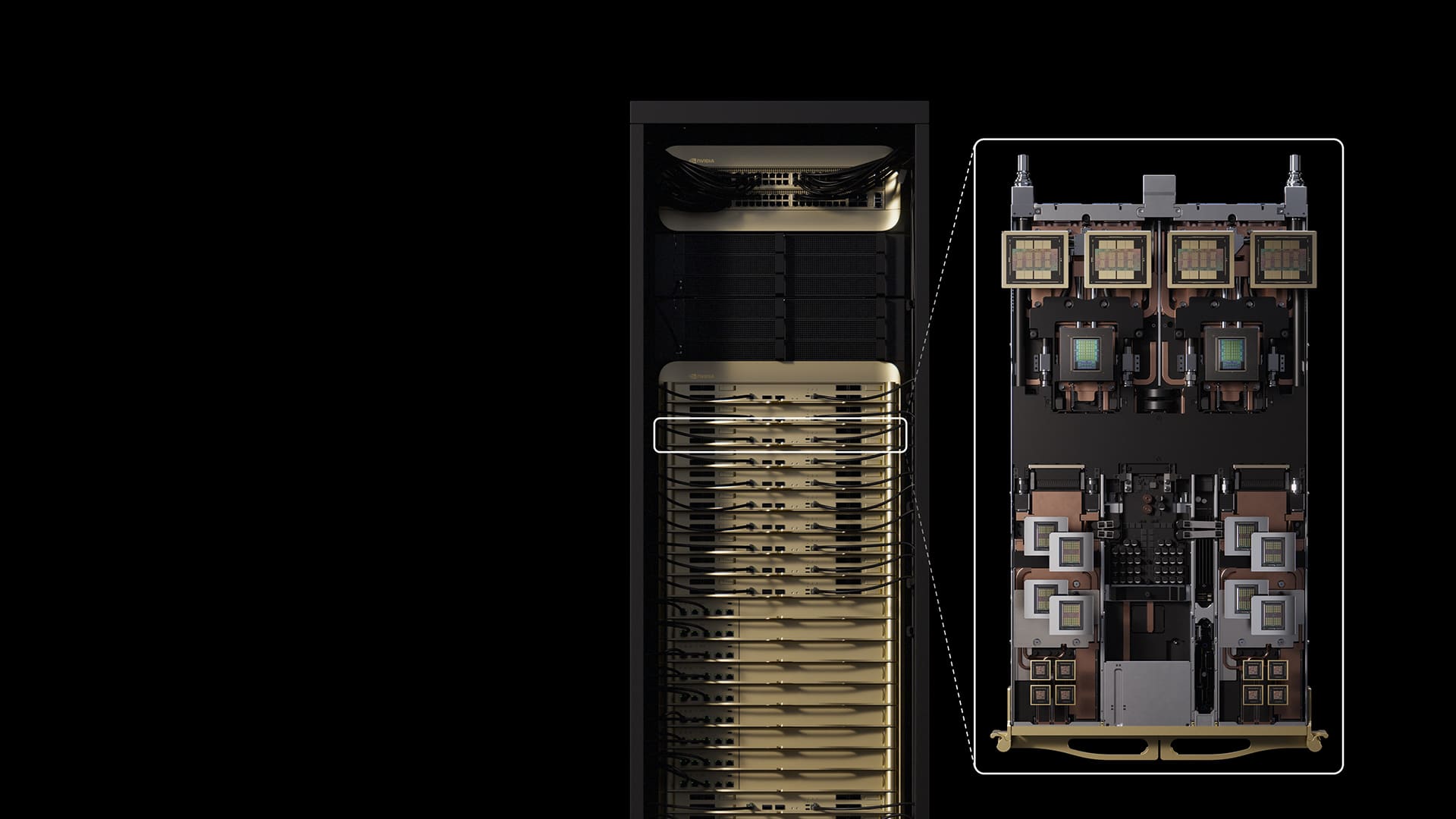

The launch isn’t limited to the GPU. Rubin CPX debuts within the NVIDIA Vera Rubin NVL144 CPX platform, a system combining Vera CPUs, Rubin GPUs, and new CPX processors in a configuration capable of delivering 8 exaflops of AI in a single rack.

This setup also includes 100 TB of fast memory and 1.7 petabytes per second of memory bandwidth, representing a performance leap of 7.5 times compared to previous generations. For those operating existing NVL144 systems, NVIDIA will also offer a CPX compute tray, designed to expand capabilities without replacing entire infrastructure.

Jensen Huang: “Massive context AI marks the next frontier”

During the presentation, NVIDIA CEO Jensen Huang compared this launch to other milestones in the company’s history:

“Just as RTX revolutionized graphics and physical AI, Rubin CPX is the first CUDA GPU designed for massive-scale contextual AI. Models no longer work with thousands of tokens but with millions, demanding a completely new architecture.”

With this strategy, the company aims to respond to a market that is rapidly moving toward context windows of six or seven figures, in both language models and multimodal applications.

From research to business: the “per token income” metric

Beyond technical specifications, NVIDIA emphasizes an economic perspective. The company estimates that systems based on Rubin CPX could generate $5 billion in revenue per tokens per every $100 million investment in infrastructure.

This figure depends on factors like market price per token or the type of model used, but it sends a clear message: hardware can be a direct multiplier of business revenue in AI platforms that bill based on processed tokens, whether in code copilots, video search engines, or multimodal assistants.

Initial use cases: software, cinema, and autonomous agents

Various companies share how Rubin CPX will impact their products:

- Cursor, an AI-powered code editor, states that Rubin CPX will enable ultra-fast code generation and agents that understand entire software bases, improving human-machine collaboration.

- Runway, focused on generative video AI, sees the GPU as allowing work with longer formats and agentized flows, giving creators greater control and realism in their productions.

- Magic, a developer of models for software engineering agents, claims that with 100-million-token windows, their systems can access years of interaction history, libraries, and documentation without retraining—bringing us closer to autonomous programming agents.

These examples reflect a common pattern: scale jump. Rubin CPX aims not just to improve existing workflows by 10 or 20%, but to enable workloads that were previously infeasible.

Software ecosystem: Dynamo, Nemotron, and NIM

As is typical with NVIDIA, the launch is accompanied by a software stack that facilitates adoption:

- NVIDIA Dynamo: scalable inference platform to reduce latency and costs.

- Nemotron™ models: a multimodal family designed for advanced reasoning in enterprise agents.

- NVIDIA AI Enterprise: distribution including NIM™ microservices, libraries, and frameworks ready for production across clouds, data centers, and accelerated workstations.

- CUDA-X™: with over 6 million developers and nearly 6,000 applications, remains the ecosystem foundation.

The goal is clear: to enable clients to go from prototypes to production seamlessly, leveraging Rubin CPX capabilities with familiar software tools.

Availability and roadmap

NVIDIA anticipates that Rubin CPX will be available by late 2026. This timeline offers hyperscalers and large corporations ample time to plan deployment and adjust their data architectures.

Meanwhile, the company will continue expanding the Rubin family and integrating with its network platforms, such as Quantum-X800 InfiniBand and Spectrum-X Ethernet, aiming to support both traditional HPC and cloud environments based on Ethernet.

Industry implications

The Rubin CPX announcement sends a dual message to the market:

- Technological: the frontier is no longer in increasing parameters but in expanding the context a model can handle in real-time.

- Economic: value will be measured in useful tokens processed with competitive latencies and manageable costs. Those who optimize this equation will gain an advantage in the generative agents and assistants market.

Rubin CPX aims to be the core of this equation, providing both the technical power and economic framework to make large-scale AI deployments profitable.

Conclusion

With Rubin CPX, NVIDIA is not just launching a new GPU but proposing a paradigm shift: shifting from measuring performance in FLOPS to measuring it in tokens processed within vast contexts.

As demand for AI grows exponentially and use cases become more sophisticated, this initiative positions the company at the forefront of the future of AI inference.

Frequently Asked Questions (FAQ)

What is NVIDIA Rubin CPX?

It’s a new GPU designed for massive context inference, capable of handling up to millions of tokens in code and video applications.

What are the key technical specifications?

Rubin CPX delivers 30 petaflops in NVFP4 precision, features 128 GB of GDDR7, provides 3x faster attention than previous generations, and is part of the Vera Rubin NVL144 CPX platform with 8 exaflops of AI in a single rack.

Which applications will benefit most?

Programming assistants analyzing complete codebases, multimodal video generation and search, and autonomous agents managing large amounts of information.

When will it be available?

NVIDIA expects to release Rubin CPX by late 2026, with full support integrated into their AI software ecosystem.

via: nvidianews.nvidia