ROSCon 2025. The global community behind Robot Operating System (ROS)—the most widespread open framework for building robots—comes together again with a clear message: the next wave of robotics will be open, GPU-accelerated, with physical AI at its core. In this context, NVIDIA has announced a series of technical contributions and partnerships within the ecosystem—including the Open Source Robotics Alliance (OSRA)—aiming to standardize critical capabilities, accelerate the development of real robots, and close the gap between simulation and deployment.

The announcements combine code and standards (direct contributions to ROS 2 and support for a new Special Interest Group), open-source tools (for performance diagnostics), and production-ready platforms (accelerated libraries and AI models), all with one goal in mind: ensuring ROS 2 becomes the open, high-performance framework for robotic applications in the physical world.

Political and technical signaling: an “FMI” SIG for physical AI within OSRA

Starting with governance, NVIDIA has confirmed its support for the new Physical AI Special Interest Group (SIG) within OSRA. This SIG will focus on three fronts that currently define the boundary between a prototype and a production robot:

- Real-time control: determinism and consistent latencies for control loops.

- Efficient GPU-based AI processing: local inference (vision, scene understanding, planning) using integrated or discrete GPUs.

- Enhanced development tools: from telemetry and profiling to reproducible sim-to-real workflows for autonomous behaviors.

This move is significant: it places these needs within the umbrella of open standards, aligning manufacturers, labs, and startups to grow ROS 2 with native primitives capable of leveraging heterogeneous hardware.

Enhancement to the ROS 2 core: “GPU-aware abstractions”

Beyond the discourse, there’s code: NVIDIA is contributing “GPU-aware” abstractions directly to ROS 2. What does this mean? That the framework can better understand and manage different processor types (CPU, integrated and discrete GPUs), coordinate data flow, and schedule tasks coherently with the underlying architecture, all in a high-performance, consistent manner.

This layer of future-proof abstraction safeguards the ecosystem: as new NPU, GPU, or hybrid SoCs emerge, ROS 2 will have hooks to maximize silicon capabilities without breaking software portability. For developers, this translates into less ad-hoc glue code, fewer invisible latencies, and more throughput in perception pipelines, SLAM, planning, or manipulation tasks.

Greenwave Monitor: open-source performance diagnostics

A common missing piece in field robotics is observability. NVIDIA has announced the open-source release of Greenwave Monitor, a tool that allows quick identification of bottlenecks during development. The goal: shorten the cycle of measure-find-optimize so teams spend less time hunting down node “stalls” and more refining their robot architecture.

In a modern stack—featuring ROS 2, CUDA acceleration, AI models, and sensor drivers—a performance viewer that speaks the framework’s “language” is a direct shortcut to more reliable robots with predictable performance.

Isaac ROS 4.0 arrives on Jetson Thor: CUDA libraries and AI models ready for “manipulation and mobility”

At the platform level, NVIDIA confirms the availability of Isaac ROS 4.0, a set of GPU-accelerated ROS-compatible libraries and AI models—designed for manipulation and mobility—on the new Jetson Thor platform. For developers, this means:

- Access to CUDA-accelerated libraries (perception, point cloud processing, DNNs, image pipelines) integrated with ROS 2.

- AI models trained and optimized for edge inference (detection, segmentation, pose estimation, grasping).

- Workflows aligned with the framework (nodes, messages, launch files), reducing friction between R&D and on-robot deployment.

The Jetson Thor + Isaac ROS 4.0 combo echoes the physical AI mantra: “process where things happen”, with low latency and no cloud costs per perception-action cycle.

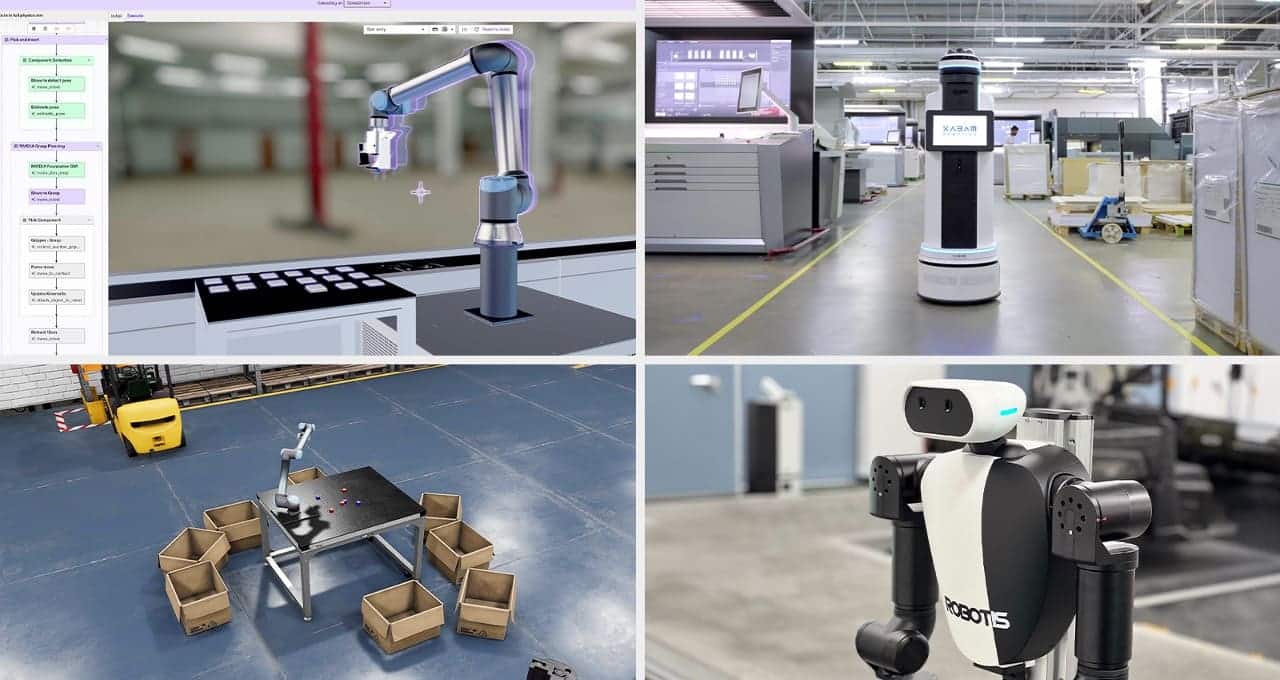

Partners and use cases: from photorealistic simulation to outdoor security robots

These announcements are not in isolation: the official post cites real-world cases of partners already exploiting acceleration, simulation, and standards:

- AgileX Robotics: uses Jetson for autonomy and perception in their mobile robots, leveraging Isaac Sim (built on Omniverse) as an open-source simulation framework for testing behaviors.

- Canonical (Ubuntu): simplifies development while showcasing an open observability stack for ROS 2 devices running on Jetson AGX Thor, aligning edge and robotics with modern devops.

- Ekumen Labs: integrates Isaac Sim into their workflow for high-fidelity simulation, validation, and photorealistic synthetic data for training purposes.

- Intrinsic: combines Isaac foundational models and Omniverse simulation tools in Flowstate for advanced grasping, real-time digital twins, and AI-driven automation in industrial robotics.

- KABAM Robotics: their Matrix robot uses Jetson Orin and Triton Inference Server on ROS 2 Jazzy for outdoor security and facility management.

- Open Navigation (Nav2): демонстрирует Isaac Sim и NVIDIA SWAGGER на keynote о продвинутых маршрутах для AMR.

- Robotec.ai: collaborates with NVIDIA on a new ROS simulation standard, now integrated into Isaac Sim, to unify cross-simulator development and enable robust automated testing.

- ROBOTIS: employs Jetson onboard and Isaac Sim to validate; their AI Worker, powered by Isaac GR00T N1.5 model, aims for greater autonomy and scalable edge AI.

- Stereolabs: confirms full compatibility of ZED cameras and ZED SDK with Jetson Thor, supporting high-performance multi-camera perception, low-latency perception, and real-time spatial AI.

The picture is coherent: GPU on the robot, realistic simulation in the loop, ROS standards to orchestrate everything, and open-source tools for observability and optimization.

Why does this matter (beyond acronyms)

1) From “runs on a laptop” to “behaves identically on the robot”

The GPU-aware abstractions at the core of ROS 2 are a decisive step: they shorten the gap between portable code and real-world performance on robot hardware, crucial for real-time control and low-latency AI.

2) Native observability: less time chasing ghosts

With Greenwave Monitor, the community gains a profiler that understands modern pipelines (ROS 2 + CUDA + DNNs). Identifying “bottlenecks” in buses, nodes, or GPU kernels becomes more straightforward.

3) Simulation as a contract, not an afterthought

A standardized simulation framework for ROS, integrated into Isaac Sim, reduces the friction when switching simulators or porting to real robots. Photorealistic synthetic data and automated testing reinforce validation.

4) From lab to factory (and street)

The availability of accelerated libraries and ready-made models for Jetson Thor/Orin enables teams to move faster from POC to pilot and then to deployment, supported by Ubuntu and devops tools for edge systems.

What it means for the ROS developer (practical checklist)

- Evaluate Isaac ROS 4.0 if working on manipulation or mobility: it includes accelerated blocks for common pipelines.

- Use Greenwave Monitor during development to catch latencies and hotspots early.

- Follow the Physical AI SIG within OSRA: this will shape APIs and best practices.

- Explore the simulation standard (Robotec.ai + NVIDIA) if your team mixes simulators or wants robust automatic tests.

- Leverage Isaac Sim if you need synthetic data or high-fidelity scenes for perception and planning validation.

- Check compatibility if using ZED, Jetson Orin/Thor, Triton, Ubuntu: support is out-of-the-box.

Risks and open questions

- Portability vs. performance: GPU-aware abstractions must balance portability with hardware utilization—avoiding lock-in without sacrificing performance.

- Determinism: physical AI and demanding control require temporal consistency. Guarantees under load with DNNs/GPU pipelines are yet to be fully established.

- Emerging standards: the new ROS simulation standard will coexist with diverse ecosystems; its adoption will determine real impact.

- Licensing and community: keeping open source code useful (and maintained) is crucial, as is the governance of OSRA.

Conclusion: a push for ROS 2 into the decade of physical AI

Direct contributions to ROS 2, support for a Physical AI SIG, open-source monitoring tools, and the deployment of Isaac ROS 4.0 on Jetson Thor form a coherent package: pushing ROS 2 not only to be the most popular framework but also the most capable in real robots powered by accelerated AI.

The community’s message is twofold: open standards above, ready bricks below. If the ecosystem responds—as it likely will—the promise of robots that perceive, decide, and act in the real world with industry-level speed and reliability will come that much closer.

Frequently Asked Questions

What exactly does “GPU-aware” add to ROS 2?

An abstraction layer that enables ROS 2 to detect and manage CPUs and GPUs (integrated or discrete), orchestrating data and tasks with coherence and high performance. It reduces ad-hoc glue code and enhances the temporal consistency of AI-intensive pipelines.

What is Greenwave Monitor and why is it useful?

An open-source tool for diagnosing performance bottlenecks and locating bottlenecks (like latencies in nodes, queues, or GPU kernels) during development, accelerating the move from prototype to reliable robot.

What does Isaac ROS 4.0 include on Jetson Thor?

A set of libraries compatible with ROS, accelerated by CUDA, and AI models ready for manipulation and mobility, designed for on-device inference with low latency and high throughput.

How does Isaac Sim relate to the “simulation standard” for ROS?

NVIDIA and Robotec.ai collaborate on a simulation standard already integrated into Isaac Sim. The goal is to facilitate cross-simulator development and enable robust automated testing, reducing sim-to-real friction.

via: blogs.nvidia