The B30 chip, specifically designed for the Chinese market, promises a 40% savings compared to the H20 and could become the new standard for inference in medium-sized models amid US export restrictions.

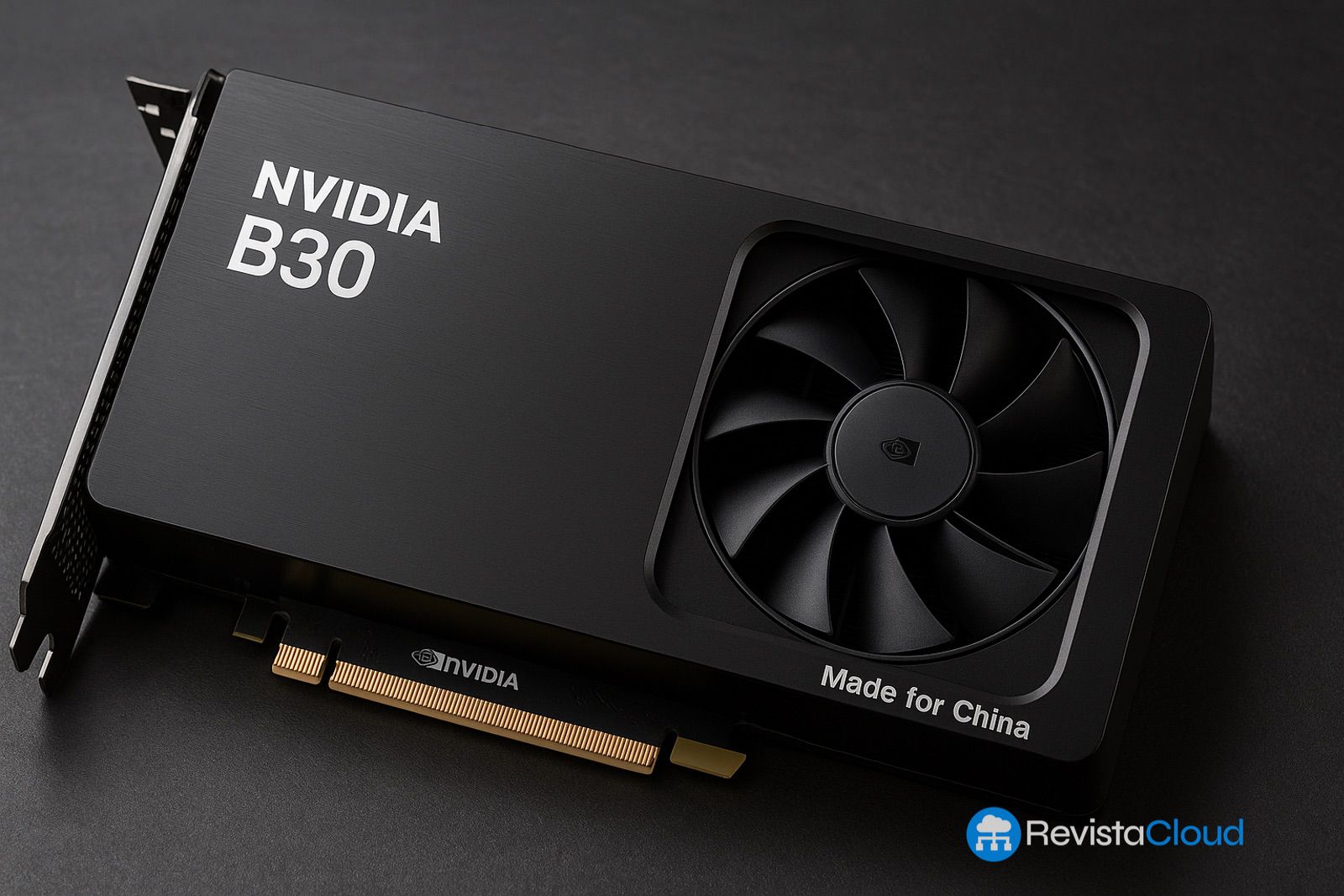

NVIDIA continues its strategy to maintain a presence in the highly competitive Chinese AI market despite increasing US-imposed restrictions. According to leaks from the reputable analyst @Jukanlosreve and shared by Wccftech, the Santa Clara company is finalizing the launch of the Blackwell B30 chip, an adapted and more affordable version of its AI accelerator line, tailored to meet export limitations while satisfying local demand.

A chip 25% slower… but with high demand

The new B30, although offering 25% less performance than the already limited H20 — with reduced power — stands out for its efficiency and low cost. Key technical differences include the substitution of HBM memory with GDDR7 modules and cuts in advanced support technologies. Despite these limitations, demand is expected to be significant, especially among cloud service providers (CSPs) and Chinese tech companies seeking cost-effective inference solutions after training their models with previous chips such as the H100.

The goal of this new design isn’t to compete in the high-end market but to provide a practical option for running small and medium-sized models. Industry sources indicate NVIDIA plans to distribute millions of units before year’s end, with major Chinese companies eager to acquire these chips, especially as training has already been addressed and inference now becomes the new bottleneck.

Price 40% lower and 30% more efficient

The B30 chip approach prioritizes energy efficiency and cost-effectiveness. Sources suggest it will be roughly 30% more efficient in power consumption compared to the H20, with a purchase price 40% lower. These features make it particularly attractive in an ecosystem where optimizing computational resources is crucial for scaling large-scale AI services.

In terms of infrastructure, a cluster of eight B30 cards may achieve up to 1.2 TB/s bandwidth, positioning it as a competitive rack environment solution. While its raw power won’t reach the levels of the H100 or even the H20, its cost-performance ratio makes it a strategic component to keep inference operations within US legal margins.

A geopolitical and business move

This development is part of a complex geopolitical landscape. Tensions between Washington and Beijing have led the US government to block the export of high-performance chips to China, forcing companies like NVIDIA to rethink their strategies. CEO Jensen Huang has increased engagement on both fronts: recently meeting with former President Donald Trump — who praised the company’s $4 trillion valuation — and expected to visit China soon to strengthen commercial ties.

In this context, the B30 chip is more than just a technical product; it’s a demonstration of NVIDIA’s attempt to navigate regulatory restrictions without losing its dominance in the global AI market. By adapting its Blackwell architecture into a legally exportable version, the company aims to remain relevant within the Chinese AI ecosystem, where giants like Alibaba, Baidu, and Tencent need new solutions to stay competitive.

Conclusion: pragmatism over power

The arrival of the B30 chip could signal a new paradigm in AI hardware development: less emphasis on maximum power and more on adaptability, efficiency, and cost. In a constantly evolving market under international political scrutiny, NVIDIA’s new approach might be crucial for balancing technological innovation with regulatory compliance.

Everything indicates that, despite obstacles, NVIDIA is determined not to cede ground in China, betting on a more pragmatic, distributed, and accessible AI. In this new landscape, the B30 could be the frontline tool with which Team Green continues to lead, albeit through alternative paths.

Source: wccftech