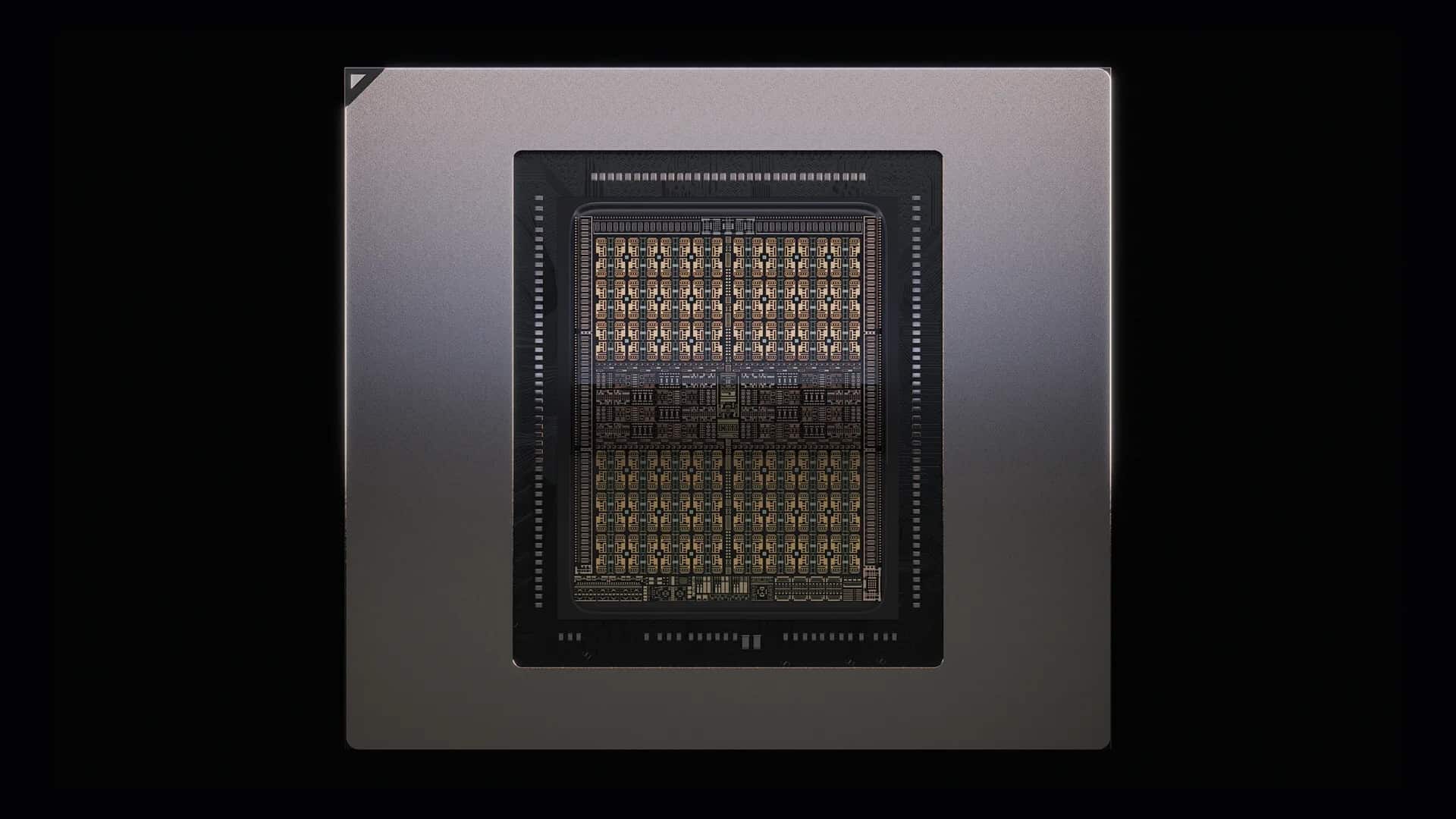

NVIDIA faces a challenge as mundane as it is decisive: heat. As each generation of AI accelerators increases power consumption and density within full chassis, the question is no longer just “how many TOPS” or “what’s the memory bandwidth,” but how to evacuate watts in a sustained and safe manner. According to advanced information from the account @QQ_Timmy, the company has begun to coordinate with partners on a significant shift in focus for its upcoming Rubin Ultra family: microchannel cold plates with direct-to-chip cooling (direct-to-chip, D2C). The goal is no small feat: maximize performance per watt and provide thermal margin for a generational leap that, from Blackwell to Rubin, targets higher power budgets in rack-scale configurations.

The technical hint isn’t alone. In parallel, Microsoft has unveiled its microfluidic cooling proposal, a similar microchannel approach that, in its case, emphasizes “in-chip cooling”: the fluid circulates inside or behind the silicon itself to maximize heat exchange. Beyond nuances, the coincidence sends a clear message: the industry needs new options to handle the megawatts brought on by the AI era.

What are “microchannel cover plates,” and why do they matter now?

Microchannel plates (or MCCP) are, broadly speaking, an evolution of traditional cold plates already used in high-performance chassis. Just as overclocking enthusiasts know “direct-die cooling” in modern CPUs, MCCPs bring the fluid as close as possible to the die by means of microscopic channels (typically in copper) carved into the plate. These microchannels increase local convection and reduce thermal resistance between the die and the liquid, resulting in lower junction temperatures, or in other words, more margin to sustain frequencies and loads over time.

The difference from a conventional liquid plate isn’t just cosmetic: here, the internal design — channel width and height, geometry, roughness, distribution — is optimized to tackle hot spots on the die and homogenize flow. This is exactly the level of detail that makes a difference when dealing with accelerators with tens of thousands of cores, multiple stacks of HBM, and interconnects totaling terabytes per second. NVIDIA documentation mentions Rubin Ultra in NVL configurations geared toward FP4 inference of around 15 EF and links up to 115.2 TB/s (CX9 8x). Such figures not only drive computational power but also increase thermal loads.

From Blackwell to Rubin: why cooling is taking the lead

The product cadence of the company leaves little room for breath. Each leap —Ampere, Hopper, Blackwell, and now Rubin— has brought improvements in architecture, memory, and networks… and, almost always, more overall system consumption. It’s not just the GPU: it’s the rack-scale topologies, backplanes, switches, and the combined hardware supporting AI accelerators in a modern “pod”. At this level, relying solely on air cooling no longer suffices; and traditional liquid cooling may fall short when aiming to compact without sacrificing stability.

Therefore, according to leaks, NVIDIA has contacted thermal solution providers to incorporate D2C microchannel cooling into Rubin Ultra. One name under consideration is Asia Vital Components (AVC), a Taiwanese manufacturer experienced in cooling solutions. This wouldn’t be entirely new —the company already works with cold plates across various chassis— but it would represent a step forward in efficiency compared to current designs.

However, it’s important to clarify the timing: the same sources indicate that this approach was on the table for Rubin, but tight schedules accelerated the migration toward Rubin Ultra. NVIDIA maintains its iteration rhythm without publicly revealing dates or final configs.

“Direct-to-chip” microchannels: advantages and trade-offs

The benefits

- Lower thermal resistance: bringing the fluid closer to silicon improves the temperature gradient and uniformity across the die.

- Sustained performance: less throttling during long loads; easier to maintain boost without penalizing hot spots.

- Density: allows for more compact rack configurations without sacrificing reliability.

- Performance/Watt: enhanced thermal efficiency translates into better utilization of each watt.

The challenges

- Pressure drops: microchannels imply higher pressure requirements and, accordingly, larger pumps and finer filtration to prevent blockages.

- Materials: compatibility issues (copper, joints, fluid additives) to minimize corrosion and degradation.

- Maintenance: service procedures, leak testing, module replacement, and circuit cleaning.

- Integration: manifolds, quick disconnects, sensors (flow, pressure, leaks), and monitoring integrated into the system’s BMC.

These considerations aren’t new to HPC. In-chip cooling coexists with other approaches —immersion of 1 or 2 phases, or Microsoft’s microfluidic— each with their own balance of efficiency, complexity, and operability. What’s important is that the sector’s starting point has shifted: from “liquid cooling, yes or no?” to “which liquid, and with what architecture?”

What sets Microsoft’s “in-chip” microfluidics apart?

Microsoft’s approach pushes beyond the plate-die contact to place the fluid “inside” or behind the silicon (backside cooling). It’s a more aggressive concept in terms of manufacture and packaging, which might offer advantages when heat must be evacuated from multiple chip layers (think 3D stacks). NVIDIA’s microchannel plates — if confirmed — are opting for a more pragmatic approach: optimizing the existing cold plate, reducing resistances and improving convection without invading the stack of the die.

Both paths aren’t mutually exclusive. Over time, we may see combination approaches — microchannels in the plate paired with improvements on the backside of the chip — once architectures and suppliers are ready.

From the plate to the data center: implications

For operators, this move raises practical questions:

- Water plants: What entry/exit temperatures do these systems require? Higher return temperatures are better for the PUE of the building.

- Secondary circuit: What pressures and flows per “pod”? Is filtration necessary for microchannels?

- Leak detection and traceability: sensors and telemetry integrated with the BMC and DCIM.

- Service: Times and tools needed to replace a module without shutting down the chassis (quick-disconnect fittings, dripless).

- Compatibility: Can immersion and direct-to-chip systems be mixed in the same row? What manifold systems allow scalable growth without redesign?

None of this is insurmountable. Many leading HPC installations already operate with D2C and immersion cooling. The novelty is that we’re now discussing AI-scale deployments, with pods capable of scaling from tens to hundreds of kW per rack and supply chains that must standardize and massify components.

Partners, timeline, and what’s yet to come

The name Asia Vital Components (AVC) has circulated as a thermal partner for RUBIN Ultra’s MCCP solutions. According to sources, the plan was to introduce this technology with Rubin, but tight timelines accelerated the move to Rubin Ultra. NVIDIA, meanwhile, maintains its iteration pace without publicly sharing dates or final configurations.

Beyond leaks, watch for signals such as:

- Joint announcements with cold plate manufacturers and OEM chassis.

- Guidelines for manifolds, sensors, and services.

- Thermal specs in upcoming NVL notes — both W and temperature entries.

- Comparisons of PUE/TUE and performance stability between Rubin and Rubin Ultra.

- Eco-system: whether other hyperscalers follow the D2C microchannel or in-chip microfluidic route.

All this points toward a strategic shift in thermal management—favoring innovation driven not just by aesthetics but by performance and efficiency needs.

Background: performance per watt and total cost

The motivation behind this shift isn’t superficial. If MCCPs can significantly lower thermal resistance, NVIDIA can support higher boosts and loads without risking silicon or HBM reliability. In a context where computing is purchased by time and air conditions utilization ratios, any thermal improvement impacts the TCO: more work done per energy unit and less throttling window in overheated rooms.

This creates a virtuous cycle: better operation—lower temperatures, pressures, and flow — with proactive maintenance and fine telemetry to preempt sediments or microleaks. The risk of rushing — without proper plants, procedures, or spare parts — is unnecessary incidents.

Summary

Nothing better exemplifies the state of AI than this move toward precision cooling. Rubin Ultra aims for more compute, wider buses, and denser packing; NVIDIA appears ready to respond with direct-to-chip microchannels to tame thermal issues without slowing down the pace. And it’s not alone: Microsoft’s in-chip microfluidic approach confirms that the battle for useful wattage is also a battle of engineering ideas.

The coming months will reveal whether this leap translates into robust products and procedures at scale. For now, the message is clear: in the race for performance per watt, the cold plate has transitioned from an accessory to a strategic technology.

Frequently Asked Questions

What exactly is a microchannel plate (MCCP), and how does it improve on a standard cold plate?

An MCCP is a plate—usually copper—with microscopic channels where the fluid flows just above the die. This geometry increases convection and reduces thermal resistance compared to simpler cavity plates, enabling lower junction temperatures and better sustained performance.

What does “direct-to-chip” cooling mean for an AI data center?

It involves delivering liquid directly at chip level via manifolds and quick disconnects, with flow, pressure, and leak sensors. It requires warm water plants, fine filtration, and service procedures. In exchange, it offers density, efficiency, and less throttling.

How does Microsoft’s “in-chip” microfluidic approach differ from NVIDIA’s strategy?

Microsoft’s approach pushes the fluid inside or behind the silicon (backside cooling), being more aggressive in manufacturing and packaging. NVIDIA’s plate-based microchannel approach, if confirmed, aims to optimize the existing cold plate system to reduce resistances and improve convection without invading the die stack. Both paths aren’t mutually exclusive and could intertwine over time.

Why do thermals requirements jump from Blackwell to Rubin?

Each generation adds more compute, memory, and interconnects. This increases the power need per system (not just GPU), especially in rack-scale pods. The shift makes more efficient thermal solutions like D2C microchannels essential.

via: wccftech