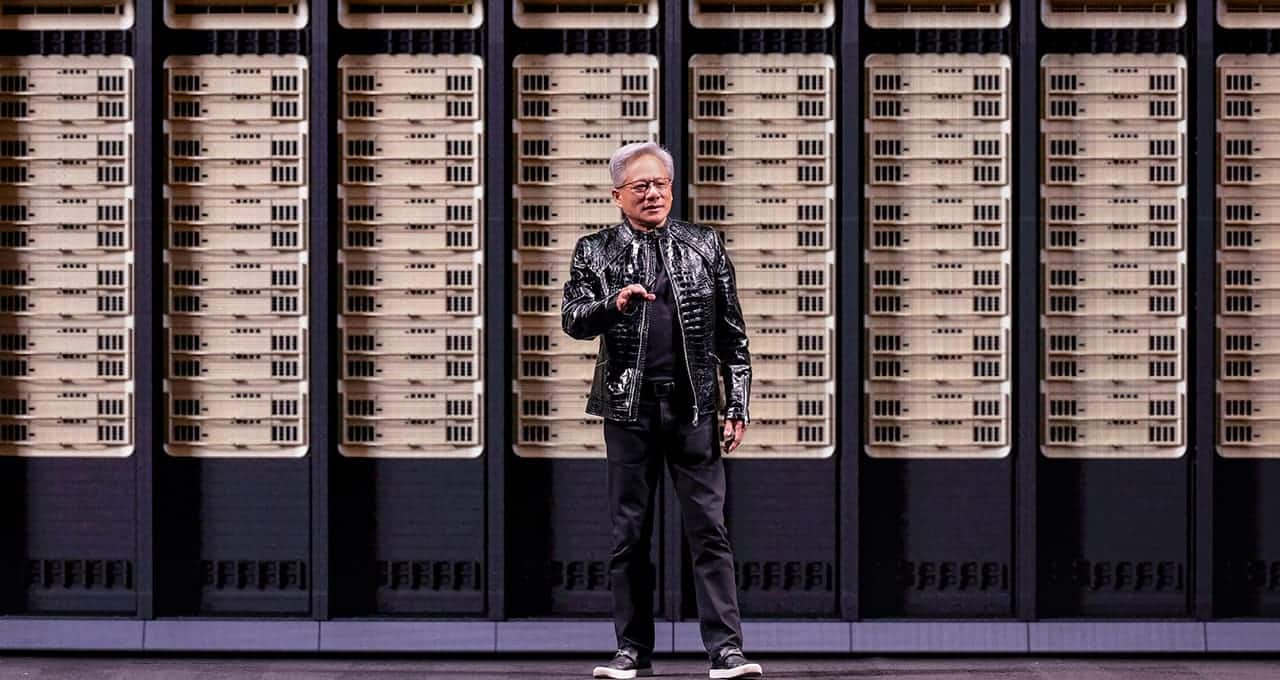

NVIDIA took advantage of the CES 2026 kickoff in Las Vegas to present something more ambitious than a product catalog: a platform vision. Jensen Huang took the stage at the Fontainebleau with a clear message: artificial intelligence is no longer just another software layer but the engine rebuilding the entire compute stack, from silicon and networking to storage and models.

In this context, the company unveiled three pillars that summarize its strategy for the coming years: Rubin, its new “extreme-code-designed” AI platform; a domain-specific open models strategy; and an infrastructure designed for agentic AI, where context — the operational “memory” — becomes a critical resource.

Rubin: the six-chip platform aiming to reduce the “cost per token”

The main announcement was NVIDIA Rubin, successor to Blackwell, presented as the first extreme-code-designed six-chip AI platform. The idea is straightforward: as AI scales to “gigascale,” building a faster GPU alone isn’t enough; there’s a need for coordinated design across GPU, CPU, interconnect, network, DPUs, storage, and software, because bottlenecks shift.

Rubin is described as a “data center from the ground up” platform, and NVIDIA specifies its components as:

- Rubin GPUs delivering 50 petaflops inference in NVFP4.

- Vera CPUs focused on data movement and processing related to agentic systems.

- NVLink 6 for “scale-up” expansion.

- Spectrum-X Ethernet Photonics for “scale-out”.

- ConnectX-9 SuperNICs and BlueField-4 DPUs as the backbone of connectivity and infrastructure acceleration.

Huang’s stated goal is to drastically reduce the cost of deploying large-scale AI: the platform promises to deliver tokens at about one-tenth the cost of the previous generation, pushing the AI economy into a more “industrial” than experimental phase.

The new buzzword: context and the KV cache

NVIDIA’s outlook for 2026 anticipates AI moving beyond “single-response” chatbots to systems that maintain long conversations, chain tasks, consult tools, and keep a history — what’s known today as agentic AI.

This introduces a bottleneck that the industry is starting to treat as a top-tier infrastructure issue: the KV cache (key-value cache), which is the context memory allowing models to reason over multiple turns without losing coherence.

NVIDIA stresses a key point: the KV cache cannot live indefinitely on the GPU without penalizing real-time inference. Therefore, alongside Rubin, the company introduced a concept of native AI storage: a dedicated layer for context storage.

“AI-native storage”: a memory tier outside the GPU for context

Under the BlueField-4 umbrella, NVIDIA unveiled the Inference Context Memory Storage Platform, defined as a KV cache tier for long-context inference and multi-turn agents.

The promised performance — in numbers — is aggressive:

- Up to 5× more tokens per second

- Up to 5× better performance per dollar of TCO

- Up to 5× better energy efficiency compared to traditional storage.

Beyond the multiplicative improvements, the strategic message is clear: if agents require “shared memory” across nodes and persistent context, storage ceases to be a passive repository and becomes an active performance component.

Open models by domain: Clara, Earth-2, Nemotron, Cosmos, GR00T, and Alpamayo

Another major focus of the keynote was NVIDIA’s emphasis on a catalog of open models, trained on its own supercomputers, organized by domains, and envisioned as a reusable “base” for companies and industries.

The portfolio covers six areas:

- Clara (healthcare)

- Earth-2 (climate)

- Nemotron (reasoning and multimodality)

- Cosmos (robotics and simulation)

- GR00T (embodied intelligence)

- Alpamayo (autonomous driving)

This strategy’s narrative emphasizes that models evolve through increasingly shorter cycles and that the ecosystem — downloads, adaptations, evaluation, “guardrails” — is part of the product. NVIDIA positions itself as a “frontier model builder,” but with an “open” approach to allow third parties to tune, evaluate, and deploy.

Alpamayo: reasoning for the long tail in autonomous driving

In automotive, Alpamayo appears as a family of VLA (vision-language-action) models with reasoning capabilities, aimed at tackling the main challenge in autonomous vehicles: the long tail — the rare and difficult scenarios rarely seen in data.

During the announcement, NVIDIA highlighted:

- Alpamayo R1, as the first open reasoning VLA model for autonomous driving.

- AlpaSim, as an open high-fidelity simulation blueprint for testing.

One of the most striking claims was Huang’s connection of this line with a consumer automotive implementation: the first passenger vehicle with Alpamayo based on the complete NVIDIA DRIVE platform will “soon” be on roads, in the Mercedes-Benz CLA, featuring “AI-driven driving” set to arrive in the US this year, and noting its recent five-star EuroNCAP rating.

AI “personalization”: DGX Spark, local agents, and desktop robots

Another segment of the presentation aimed to shift AI away from the studio/center-of-data mindset. Huang argued that the future is also local and personal, showcasing demos of agents running on DGX Spark, embodied in a robot (Reachy Mini), and using models from Hugging Face.

NVIDIA added that DGX Spark offers up to 2.6× performance for large models, supports LTX-2 and FLUX in image generation, and will soon support NVIDIA AI Enterprise for this platform.

Gaming and content creation: DLSS 4.5 and the RTX ecosystem as showcase

While CES is also about entertainment, NVIDIA didn’t omit the traditional showcase: gaming and content creation. The company announced DLSS 4.5 with Dynamic Multi-Frame Generation, a new 6X Multi-Frame Generation mode, and a second-generation transformer for DLSS Super Resolution.

It was also highlighted that over 250 games and apps already support DLSS 4, with several titles expected to incorporate it at launch, including 007 First Light, Phantom Blade Zero, PRAGMATA, and Resident Evil Requiem. There were also mentions of RTX Remix Logic, NVIDIA ACE demonstrations, and new GeForce NOW deployments across more devices.

FAQs

What does it mean that Rubin is an “extreme-code-designed” six-chip platform?

NVIDIA designs it as a coordinated set comprising GPU, CPU, network, DPUs, and interconnect to reduce rack-scale bottlenecks and lower overall training and inference costs.

Why has the KV cache become an infrastructure problem?

Because agents and long contexts generate large amounts of state memory. Keeping this always on GPU limits real-time inference and increases operational costs, especially in multi-turn and multi-agent systems.

What is NVIDIA’s “AI-native storage” intended to solve?

To create a dedicated layer for storing and sharing context (KV cache) outside the GPU at high speed, aiming to increase tokens per second and improve energy efficiency in large-scale inference.

What is Alpamayo and why does it matter for autonomous vehicles?

It’s a family of models and tools (including simulation and datasets) focused on reasoning in rare “long tail” scenarios — the key obstacle to safely scaling autonomous driving.

via: Nvidia