The Artificial Intelligence industry is changing lanes. For years, media and technical focus has been on training—larger models that are more expensive and increasingly dependent on computing capacity. But as AI becomes a product, the real bottleneck shifts to a different area: inference, the moment when the model responds in real-time to users, companies, and automated systems.

In this context, Groq announced on December 24, 2025 a deal with NVIDIA that isn’t a “classic” acquisition but sounds impactful: a non-exclusive technology licensing agreement for inference, along with a top-tier talent transfer. Groq’s founder, Jonathan Ross, and its president, Sunny Madra, along with other team members, will move to NVIDIA to help develop and scale the licensed technology. Groq, for its part, insists it will continue operating as an independent company, with Simon Edwards as the new CEO and GroqCloud functioning “without interruption”.

“Non-exclusive” license… but with key team departure

The “non-exclusive” aspect is significant on paper: it means Groq isn’t tied to just one partner and, at least formally, its technology could be licensed to others. However, market insiders read between the lines: when the founder and core technical team leave for the sector’s dominant partner, the deal shifts from a simple contract to a strategic signal.

Furthermore, the announcement is encased in inevitable speculation: some reports pointed to a deal valued around $20 billion, citing TV reports and sector sources. Yet, neither Groq nor NVIDIA issued official figures in the licensing announcement. This contrast—rumors of a major deal versus a sparse public statement—fuels a common Silicon Valley interpretation: licensing technology and recruiting talent can be a quick way to boost capabilities without the full costs (and regulatory scrutiny) of a traditional acquisition.

Why inference has become the “center of gravity” in AI

Inference is where AI is tested with a stopwatch and calculator. It’s not enough for a model to be brilliant: companies need to serve millions of queries with low latency, predictable costs, and reasonable energy efficiency. This is precisely what, in 2025, companies are demanding—they’re no longer just “testing AI,” but integrating it into customer service, internal productivity, code generation, document analysis, or agent systems.

In other words: training can be the spectacle, but inference is the recurring business. And in that business, every millisecond counts. That’s why there is growing interest in architectures and solutions specifically designed for low latency and high performance per watt.

What NVIDIA gains with Groq: speed, architecture… and a profile with TPU history

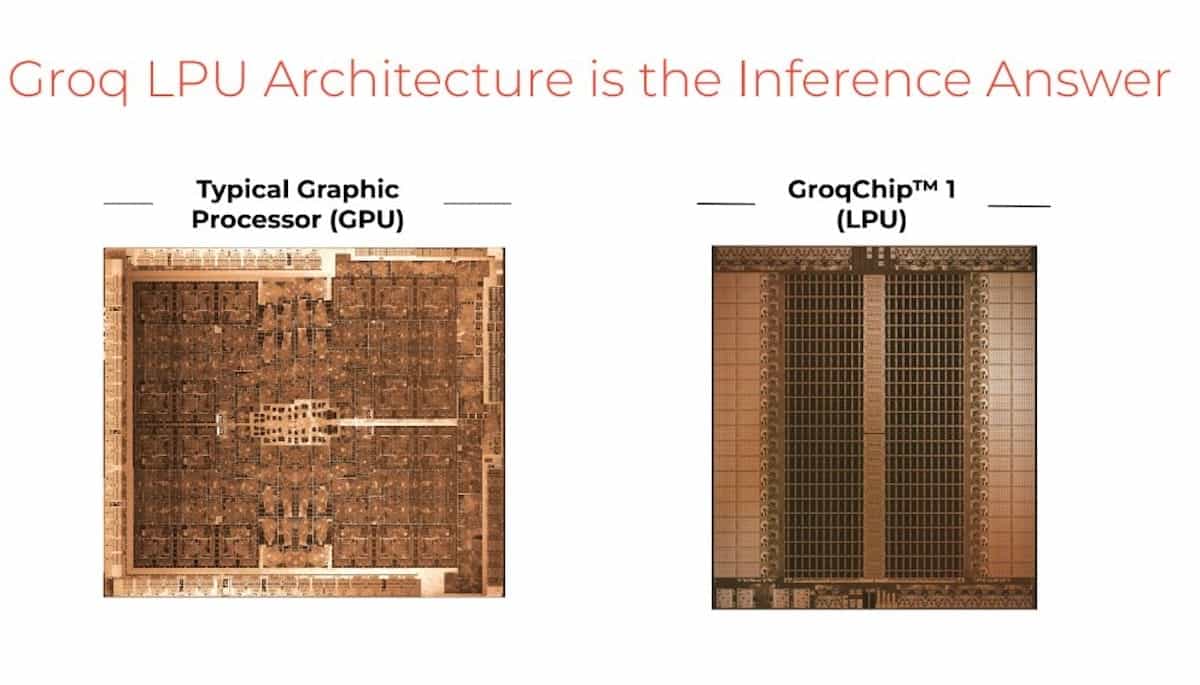

Groq has long positioned itself as an inference-focused alternative. The hiring of Jonathan Ross adds a powerful symbolic element: Ross is known in the industry for leading Google’s effort that launched the TPU (Tensor Processing Units), Google’s “Plan B” to reduce dependence on GPUs for AI workloads.

For NVIDIA, the message is twofold. First, it acquires talent linked to one of the most notable stories in alternative AI hardware. Second, it gains—via licensing—technology aimed at inference at a time when the market is filling with competitors: proprietary chips from hyperscalers, specialized accelerators, and startups trying to carve out a share in model hosting services.

At the same time, NVIDIA isn’t abandoning its core: its historical advantage remains the ecosystem (software, tools, data center integration) and its ability to scale products globally. The Groq license acts as an “accelerator” to reinforce an area of growing competitive pressure.

What remains for Groq: continuity, GroqCloud, and the challenge of maintaining traction

Groq emphasizes it will remain an independent company and that GroqCloud will continue unaffected. It also formalizes the leadership change with Simon Edwards as CEO—an executive previously announced as CFO several months ago, marking a stage of corporate maturity.

The big question for the tech community is how Groq will evolve without its founder day-to-day, and how much weight it will retain as a provider given that part of its technology and talent now falls under NVIDIA’s umbrella. If GroqCloud maintains its roadmap, it could continue offering inference options for those seeking alternatives or complementarity. But if the license becomes the core piece shaping its future, Groq will need to prove that its commercial proposal and operational execution remain competitive without its original “engine.”

What this means for the industry: increased inference competition, fewer blind bets on a single path

From a tech perspective, this move encapsulates the evolving AI landscape:

- Inference becomes strategic: not an afterthought, but the battlefield.

- Architectural talent is gold: those who design key systems (TPU, low-latency inference) are as valuable as manufacturing capacity.

- Hybrid agreements normalize: licensing, partial “acqui-hire,” and structures aiming for speed without triggering all regulatory alarms.

For developers and infrastructure leaders, the practical takeaway is clear: the near future will see coexistence of multiple approaches—GPUs, specialized accelerators, cloud solutions, and hybrid stacks—where the question won’t be “which model is better,” but which platform delivers faster, cheaper, and with less friction.

FAQs

What does it mean that it’s a “non-exclusive” license between Groq and NVIDIA?

This means Groq, in theory, can license its technology to other partners and continue operating independently. In practice, the impact depends on how integrated that technology becomes into NVIDIA’s roadmap and how Groq evolves after the departure of its technical leadership.

Is this a disguised acquisition of Groq by NVIDIA?

The official statement mentions licensing, not a purchase. Rumors and speculations about the deal’s value have circulated, but there’s no public confirmation of a full acquisition.

Why does inference matter so much more in 2025?

Because that’s where AI “lives” in production: user queries, automated agents, enterprise services. At scale, cost per query, latency, and energy efficiency are paramount.

Will GroqCloud continue operating for customers and developers?

According to Groq, yes: GroqCloud will continue running smoothly. The key will be the pace of innovation and support under the new leadership.

Source: Artificial Intelligence News