NVIDIA has announced its next-generation AI supercomputer, the NVIDIA DGX SuperPOD™ powered by the NVIDIA GB200 Grace Blackwell Superchips, designed to process models with trillions of parameters with consistent uptime for large-scale generative AI training and inference workloads.

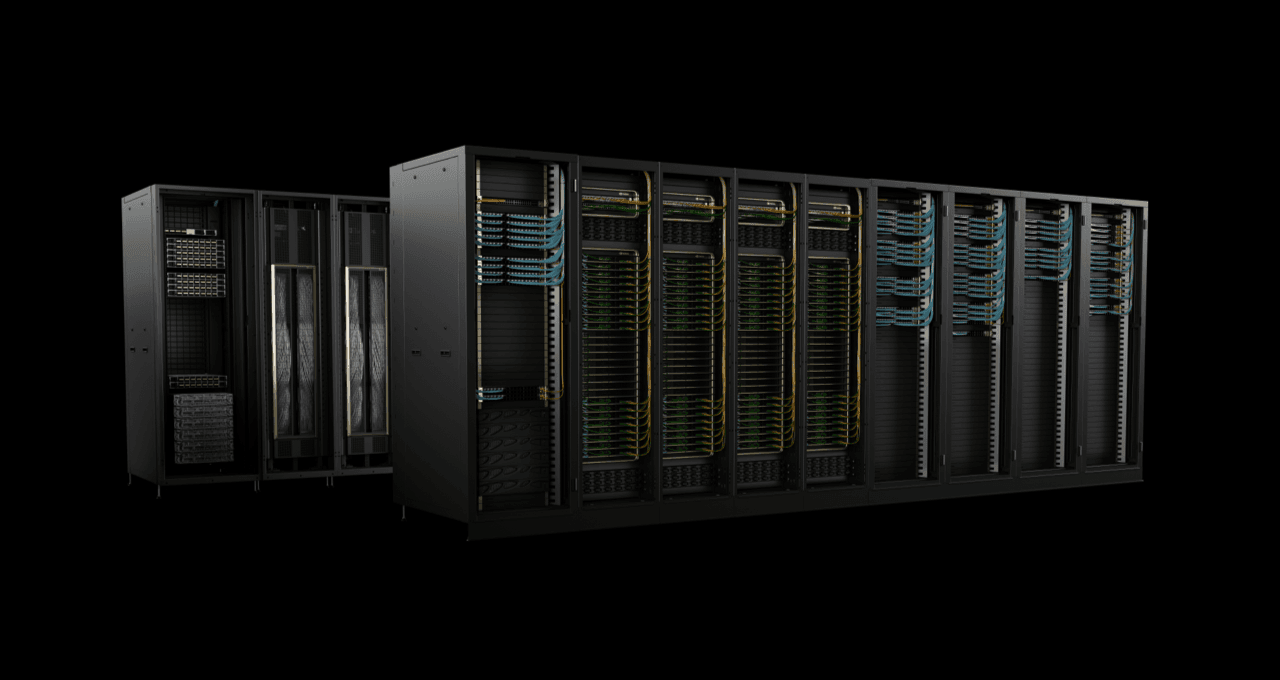

Equipped with a highly efficient liquid-cooled rack-scale architecture, the new DGX SuperPOD is built with NVIDIA DGX™ GB200 systems and provides 11.5 exaflops of AI supercomputing precision FP4 and 240 terabytes of fast memory, scaling even further with additional racks.

Each DGX GB200 system features 36 NVIDIA GB200 Superchips, which include 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell GPUs, connected as a supercomputer through the fifth-generation NVIDIA NVLink®. The GB200 Superchips deliver up to a 30x performance boost compared to the NVIDIA H100 Tensor Core GPU for large language model inference workloads.

“NVIDIA DGX supercomputers are the factories of the AI industrial revolution,” said Jensen Huang, founder and CEO of NVIDIA. “The new DGX SuperPOD combines the latest advancements in accelerated computing, networking, and NVIDIA software to enable every enterprise, industry, and country to harness and build their own AI.”

The Grace Blackwell-powered DGX SuperPOD features eight or more DGX GB200 systems and can scale to tens of thousands of GB200 Superchips connected via NVIDIA Quantum InfiniBand. For the massive shared memory space needed to power next-generation AI models, customers can deploy a configuration connecting the 576 Blackwell GPUs in eight DGX GB200 systems linked via NVLink.

The new DGX SuperPOD with DGX GB200 systems introduces a unified computing fabric. In addition to the fifth-generation NVIDIA NVLink, the fabric includes NVIDIA BlueField®-3 DPUs and will support the separately announced NVIDIA Quantum-X800 InfiniBand network. This architecture provides up to 1,800 gigabytes per second of bandwidth to each GPU in the platform.

Furthermore, the fourth-generation Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ technology provides 14.4 teraflops of Network Computing, a 4x increase in the next-generation DGX SuperPOD architecture compared to the previous generation.

The new DGX SuperPOD is a complete AI supercomputer at data center scale that integrates with high-performance storage from NVIDIA-certified partners to meet the demands of generative AI workloads. Each one is built, wired, and tested in the factory to drastically accelerate deployment in customer data centers.

The Grace Blackwell-powered DGX SuperPOD features intelligent predictive management capabilities to continuously monitor thousands of data points in hardware and software to predict and intercept sources of downtime and inefficiency, saving time, energy, and computing costs.

The software can identify areas of concern and plan maintenance, flexibly adjust computing resources, and automatically save and resume jobs to prevent downtime, even without the presence of system administrators.

NVIDIA also introduced the NVIDIA DGX B200 system, a unified AI supercomputing platform for training, fine-tuning, and inference of AI models in industries.