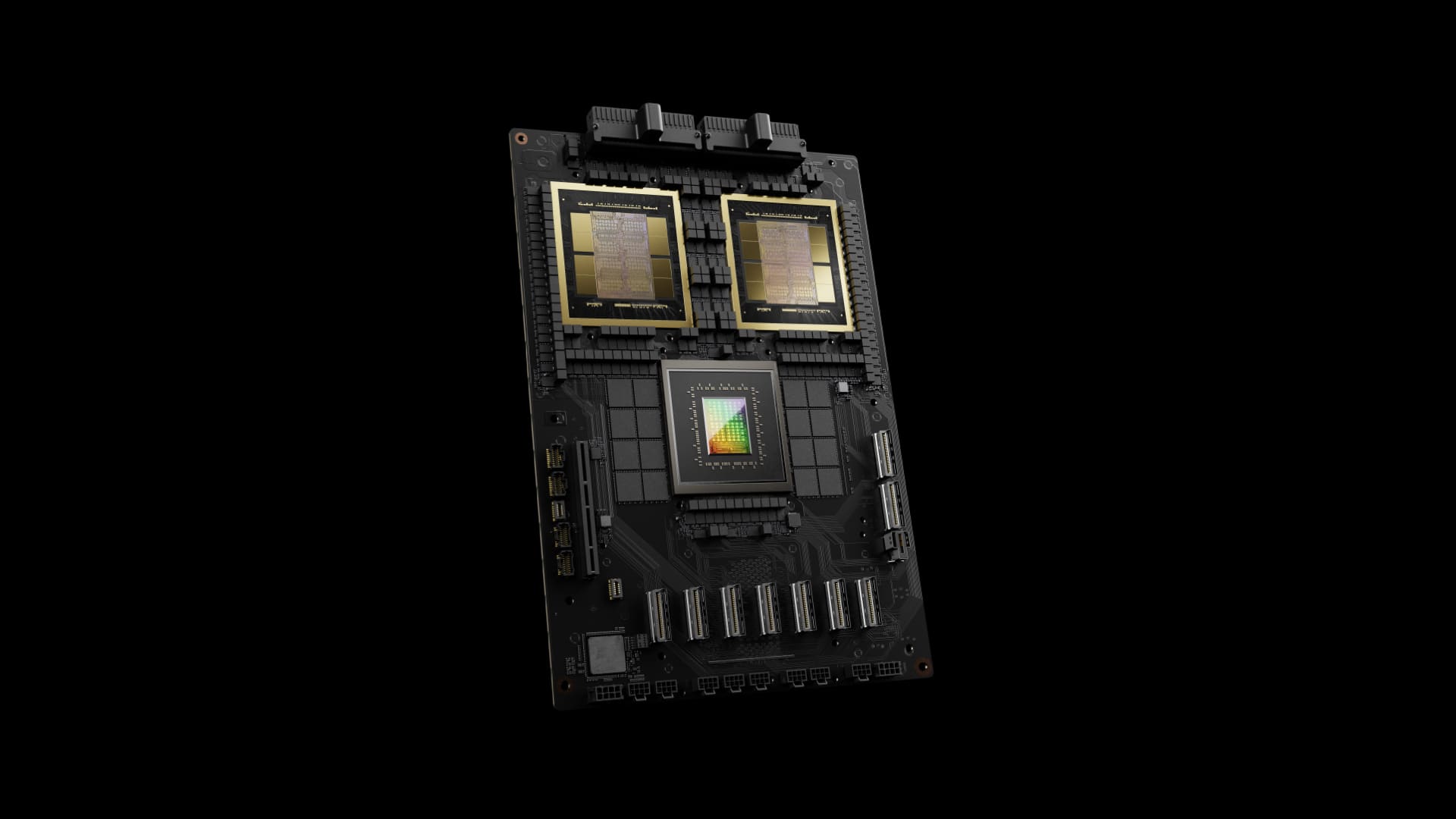

The NVIDIA H800, based on the Hopper architecture, represents a significant advancement in the world of enterprise GPUs, specifically designed for artificial intelligence (AI) workloads and advanced data analytics. With optimized performance, efficiency improvements, and innovative features, this GPU positions itself as a key solution for companies looking to accelerate AI applications, from large language models to computer vision.

Technical Comparison: H800 vs. Its Predecessors

The H800 stands out against previous models such as the A100, A800, and H100 in several key aspects. Here is a comparative table of essential technical specifications:

| Specification | A100 SXM | A800 SXM | H100 SXM | H800 SXM |

|---|---|---|---|---|

| FP64 (TFLOPS) | 9.7 | 9.7 | 34 | 1 |

| TF32 Tensor Core | 312 | 312 | 989 | 989 |

| FP16 Tensor Core | 624 | 624 | 1,979 | 1,979 |

| FP8 Tensor Core | NA | NA | 3,958 | 3,958 |

| Memory (GB) | 80 | 80 | 80 | 80 |

| BandwidthBandwidth is the maximum transfer capacity… Memory | 2.039 TB/s | 2.039 TB/s | 3.35 TB/s | 3.35 TB/s |

| NVLink (GB/s) | 600 | 400 | 900 | 400 |

| Power Consumption | 400W | 400W | 700W | 700W |

Key Innovations of the H800

- FP8 Precision Capabilities: The H800 incorporates FP8 compatibility through its fourth-generation Tensor cores, achieving up to 3,958 TFLOPS. This enables exceptional performance for training and executing large language models, such as those used in generative AI systems.

- Multi-Instance GPU (MIG) Technology: The H800 supports up to seven MIG instances, each with 10 GB of memory, allowing multiple users or tasks to operate simultaneously on a single GPU with guaranteed quality of service.

- Optimized for Enterprise Security: With new confidential computing capabilities, users can protect data and applications in multiuser environments, a critical feature for sectors like healthcare and finance.

- Energy Efficiency and Configurability: While the H800 has a maximum consumption of 700 W, it includes configurable options that allow balancing performance and efficiency based on the specific needs of each workload.

Comparison with the H100 Model

While the H100 excels in high-precision tasks and has superior NVLink bandwidth (900 GB/s compared to the H800’s 400 GB/s), the H800 offers a solution adapted to export restrictions for international markets. However, the reduction in FP64 from 34 TFLOPS to 1 TFLOP in the H800 limits it for intensive scientific computing applications, making it more suitable for AI and deep learning workloads that do not rely on double precision.

Use Cases and Benefits

- Large Language Models (LLM): With capabilities like FP8 support and memory bandwidth of 3.35 TB/s, the H800 is designed to accelerate the training and inference of models like GPT and BERT, providing faster and more efficient results.

- Real-Time Data Analysis: Companies can leverage the power of the H800 for tasks such as fraud detection, predictive analysis, and logistics optimization.

- Enterprise Scalability: With compatibility with NVIDIA AI Enterprise, companies can easily implement advanced AI workflows with technical support and constant updates.

Final Considerations

The NVIDIA H800 offers a unique combination of power, security, and flexibility for enterprise AI workloads. While it has limitations compared to the H100 model in scientific applications, its design and performance make it an ideal tool for companies looking to maximize efficiency in AI tasks while complying with international regulations.

For those organizations seeking a balance between performance and adaptability, the H800 positions itself as a strategic option in the competitive market of artificial intelligence and deep learning.