Here is the translation of your text into American English:

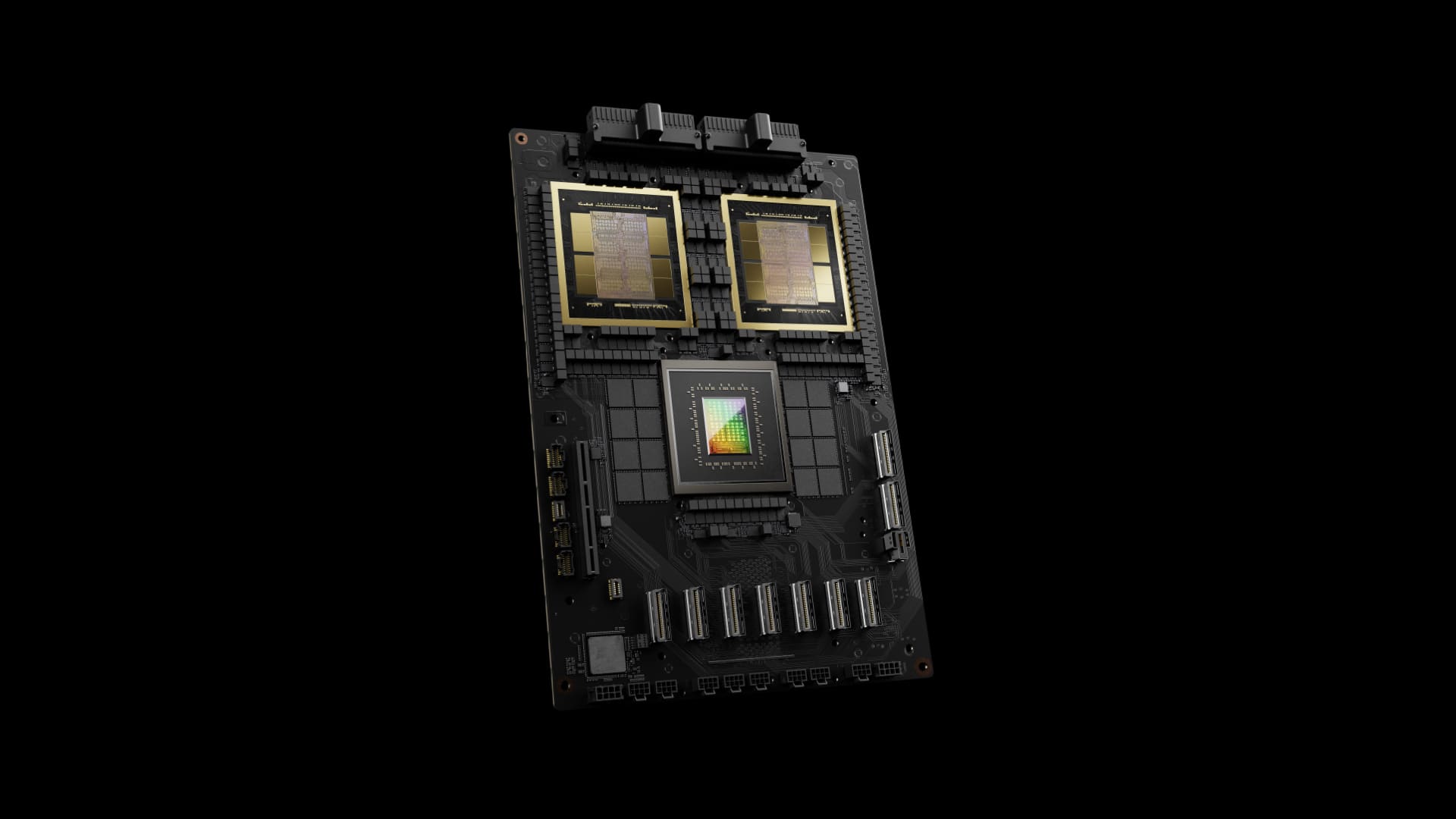

NVIDIA continues to set the pace in the artificial intelligence (AI) accelerator market with the announcement of the GB300 Blackwell Ultra, a model designed to push the current performance boundaries. This new component not only stands out for its 288 GB of HBM3E memory, but also for its astonishing power consumption of 1,400 watts, positioning it as one of the most powerful and demanding modules on the market.

Key Features of NVIDIA GB300 Blackwell Ultra

- Next-Generation Memory: The GB300 comes equipped with 288 GB of HBM3E memory, representing a significant increase from its predecessor, the GB200, which had 192 GB. To achieve this capacity, NVIDIA has integrated 12 layers of memory, 50% more than previous generations.

- Extreme Performance: With a 1.5 times improvement in FP4 operations over the GB200, the GB300 is designed to meet the most demanding requirements of advanced AI models. This level of performance is crucial in a landscape where language models and deep learning require vast amounts of data and processing power.

- Redesigned Cooling System: To manage its high energy consumption, the GB300 includes a completely redesigned cooling system with liquid cooling plates and quick disconnects to optimize temperatures in data centers. These improvements are essential to prevent overheating issues in high-density environments.

- Improved Interconnect Speed: The GB300 features ConnectX 8 network cards that double the data transfer speed from 800 Gbps to 1.6 Tbps. This ensures a constant and efficient data flow, a crucial requirement for large-scale inference and training systems.

- Compatibility with LPCAMM Memory: This new module is also compatible with LPCAMM memory, which offers better performance, lower energy consumption, and a compact design, making it ideal for various applications such as laptops and consoles.

A System Designed for High-Capacity Data Centers

The GB300 will be integrated into servers like the GB300 NVL72, which combine 36 Grace CPUs and 72 GB300 GPUs. These servers are designed to maximize performance for inference and training tasks, providing speeds up to 30 times greater than the previous generation, such as the NVIDIA H100.

Additionally, the GB300 systems include capacitor trays and an optional backup battery unit (BBU), essential for maintaining operational stability in critical infrastructures. However, these enhancements significantly increase costs, with prices potentially exceeding $200 billion in global sales.

NVIDIA and Its Leadership in AI

The Blackwell architecture has been key to maintaining NVIDIA’s leadership in the AI market. Since its announcement at this year’s Computex, the B100 and B200 GPUs have demonstrated a significant leap in performance, attracting the attention of major clients. The GB300 represents the next evolution, consolidating NVIDIA’s position as a leader in AI accelerators.

Implications of the GB300 for the Future of AI

With the GB300, NVIDIA not only seeks to meet the current demand for artificial intelligence hardware but also aims to set a standard for the next decade. Its advanced capabilities make it an essential component for large data centers and AI projects that require an unprecedented level of processing.

Although its high energy consumption poses challenges in terms of efficiency, its performance and ability to handle complex tasks make it an indispensable tool for companies looking to stay at the forefront of technological innovation.