India wants to compete in the top tier of Artificial Intelligence, and this week made it clear from New Delhi. During the AI Impact Summit, NVIDIA strengthened its presence in the country with a series of agreements and collaborations aimed at a very specific goal: deploying large-scale computing capacity, advancing sovereign models, and bringing AI to real industries — from government and public services to manufacturing, banking, and telecommunications.

The background image features the IndiaAI Mission, a government initiative with an allocation of ₹10,371.92 crore (approximately over US$1 billion) designed to create a national infrastructure base of talent and data that enables the scaling of AI adoption across the country.

From “pilot” to infrastructure: the leap AI demands at a national scale

For years, the AI debate revolved around proof of concepts, corporate chatbots, and selective automation. By 2026, the conversation shifts: the bottleneck is no longer just the algorithm, but the infrastructure — GPUs, networks, energy, cooling, supply chains, and 24/7 operations — and the ability to integrate everything securely and reliably.

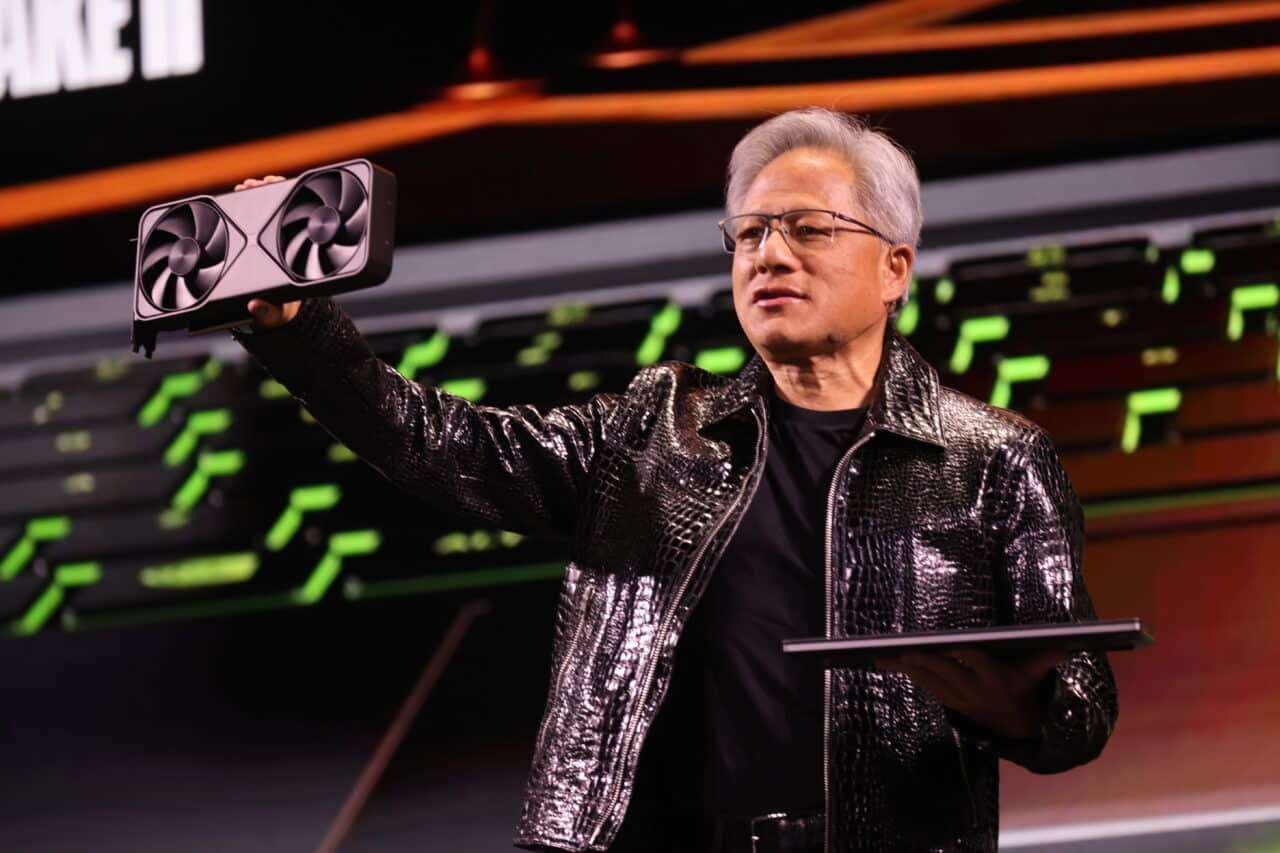

This context includes announcements around “AI factories,” a term increasingly used to describe centers and platforms designed for industrial-scale training and inference of models, with data pipelines, MLOps, observability, governance, and large-scale deployment capabilities.

Key partnerships: L&T, Yotta, and E2E Networks

Among the most notable moves in India is the collaboration with Larsen & Toubro (L&T), which aims to boost AI infrastructure in the country with significant energy deployments — including references to facilities with tens of megawatts — indicating a focus beyond labs toward sustained operational capacity.

Meanwhile, Yotta Data Services linked its roadmap to the availability of next-generation hardware: plans are underway to deploy around 20,000 Blackwell processors on their AI cloud platform, within an investment framework that aims to reach billions of dollars.

Another key player is E2E Networks, which has introduced a strategy centered on building GPU clusters to serve companies, developers, and local projects, with a narrative aligned with “sovereignty” — infrastructure and operational capacity under national control and jurisdiction.

The “industrial” layer: sovereign AI, local models, and adoption in critical sectors

The national mission isn’t just about hardware. Its broader approach involves facilitating access to computing, promoting “Made in India” datasets and models, strengthening the startup ecosystem, and enabling sector-specific adoption. In practice, this translates into a race to build internal capabilities — from language models and multimodal models to complete stacks for inference, RAG, agents, and enterprise automation.

NVIDIA emphasizes that the country needs infrastructure to bring AI into high-impact economic use cases — and also into sensitive sectors — without relying solely on external providers. Coverage of the event highlights collaborations and plans around “AI factories” and massive GPU deployments as part of this scaling shift.

Implications for companies and developers

For the market, these agreements send a clear message: more available “region-specific” capacity means less friction to train, fine-tune, and deploy models, along with more predictable access to inference infrastructure — especially for low-latency applications. For developers, the implications are twofold:

- Standardized ecosystems and tooling: As infrastructure consolidates around “de facto” stacks, it becomes easier to port workloads, automate deployments, and monitor.

- Greater focus on inference and agents: The growth of assistants and agent-based workflows pushes for optimization of token costs, latency, and orchestration, not just model size. Achieving this often involves combining GPUs with networking, storage, and a highly mature operational layer.

Real limits: energy, operations, and the economics of compute

The less glamorous side of the announcement is that deploying AI at a national scale involves overcoming very tangible constraints. High-density data centers demand stable power, efficient cooling, equipment availability, operational talent, and solid maintenance infrastructure. In emerging markets — even with political support — execution depends on construction timelines, permits, electrical interconnection, and attracting clients that can sustain the amortization.

India is trying to proactively address this dilemma by building a national compute “substrate,” but success will depend on whether access to GPUs translates into measurable productivity: increased export of services, more industrial automation, greater competitiveness, and startups capable of scaling.

What seems clear is that AI is no longer just a software trend. In 2026, it’s a matter of infrastructure investments, with decisions comparable to those in telecommunications, energy, or digital transportation sectors.