NVIDIA has turned Open Source AI Week into a showcase for its strategy to drive innovation in artificial intelligence through open source. The company emphasizes three levers: community, tools, and shared data. And it does so with very concrete announcements for PyTorch developers, aligned with a key movement this year: Python becomes a first-class language on the CUDA platform, drastically lowering the barrier to leverage GPUs from Python environments.

The story is clear and points toward production: CUDA Python arrives with built-in support for kernel fusion, extension modules, and simplified packaging. Simultaneously, nvmath-python acts as a bridge between Python code and highly optimized GPU libraries like cuBLAS, cuDNN, and CUTLASS. The result: less homemade “glue,” less friction when compiling extensions, and a shorter path from idea to deployment.

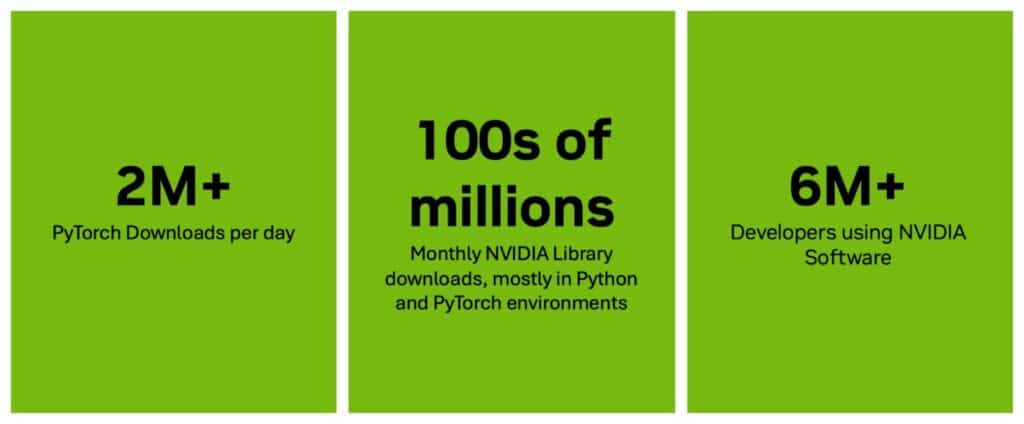

The message is backed by figures demonstrating real traction. According to data cited by the company, PyTorch averaged more than 2 million downloads daily on PyPI last month, with a peak of 2,303,217 on October 14, reaching 65 million downloads per month. Each month, developers download hundreds of millions of NVIDIA libraries — CUDA, cuDNN, cuBLAS, CUTLASS — mostly within Python and PyTorch environments. The ecosystem this strategy targets now exceeds 6 million developers.

PyTorch + CUDA Python: High-Octane Productivity

For years, Python has been the lingua franca of AI due to rapid iteration and an unbeatable ecosystem of scientific libraries. The hurdle appeared when crossing to the GPU: writing kernels, compiling extensions, and managing dependencies demanded highly specialized profiles. NVIDIA aims to resolve that bottleneck by elevating Python to the center of its accelerated computing platform:

- Kernel fusion to reduce memory trips and latency.

- Seamless integration with extension modules, avoiding toolchain conflicts.

- Predictable packaging and deployment — wheels, containers — shortening the notebook → production route.

- nvmath-python as an idiomatic access layer to optimized math cores (BLAS, convolutions, gemm, reduction), without rewriting blocks in C/C++.

For teams working with PyTorch, the appeal is clear: less friction in creating custom operators or integrating optimizations, more time on model and data architecture, and a more direct path to production without sacrificing performance.

A Week of Open Source… with a Roadmap

The Open Source AI Week kicks off with hackathons, workshops, and meetups dedicated to the latest advances in AI and machine learning. The goal is to bring together organizations, researchers, and open communities to share practices, co-create tools, and explore how openness accelerates development. NVIDIA, beyond participating and sponsoring, highlights the breadth of its open offerings:

- Over 1,000 open source tools in its GitHub repositories.

- More than 500 models and 100 datasets in its Hugging Face collections.

- Role as a highlighted contributor in Hugging Face repositories over the past year.

The symbolic conclusion of the week arrives with the PyTorch Conference 2025 (San Francisco), where NVIDIA previews a keynote, five technical sessions, and nine posters. The underlying message: if PyTorch is the common language of the sector, NVIDIA wants to be at the core of its evolution — including compilers, runtimes, kernels, and future GPU frameworks.

Why It Matters to a Tech Outlet (and Its Readers)

1) Less friction to deploy real AI

Technical teams no longer compete to write boilerplate integration code but to deliver value: models that solve problems, stable services, and predictable latencies. If CUDA Python simplifies extensions, packaging, and high-performance library access, the time-to-production shortens. This translates into faster iterations, fewer infrastructure issues, and more focus on the product.

2) Industry-standard benchmarks

The influence of PyTorch — with 65 million downloads in a month — makes any improvement in developer experience a multiplier. By offering an official and consistent route to accelerate Python on GPUs, it reduces the need for homegrown solutions per company, improves project portability, and cuts maintenance costs in the medium term.

3) Genuine open source, not just posturing

The open catalog (tools, models, datasets) has two practical effects:

- Reproducibility: solid starting points that prevent “reinventing the wheel” and shorten validation cycles.

- Talent attraction: contributing to visible repositories and working with community-adopted stacks facilitate hiring and onboarding.

nvmath-python: the missing piece

Although it might sound auxiliary, nvmath-python fills a real gap: providing a stable and efficient pathway in Python towards the mathematical cores of the platform. Implications include:

- Direct access to critical operations (gemm, conv) with bindings maintained by the vendor.

- Fewer fragile dependencies: less “compiler dance” and version conflicts.

- Improved ergonomics for kernel fusion and tuning without leaving Python.

For those maintaining custom PyTorch operators or integrating proprietary numeric blocks, this reduces technical debt and integration costs.

And what about the elephant in the room? Portability and lock-in

Any advancement centered on CUDA raises questions about portability. NVIDIA’s clear stance is: make CUDA Python the best experience when the stack is Python and the GPU is the accelerator. The balance with other backends will remain a topic of discussion among architects. The practical response will come through two avenues:

- Actual switching costs compared to multi-cloud/multi-backend alternatives.

- The maturity of tooling and runtimes competing in ergonomics without losing performance.

What a CTO or platform lead should watch for

- Official templates for PyTorch extension fusion and kernel fusion.

- Reproducible deployment routes (wheels, containers) that minimize surprises in CI/CD.

- Reusable ecosystem of models and datasets to accelerate baseline development.

- Compatibility with current toolchains and version policies (avoiding abrupt breaking changes).

- Total cost of ownership: does CUDA Python truly shorten the dev → prod cycle? Does it improve latencies and throughput without requiring exceptionally rare profiles?

What the week subtly reveals

- The frontier is no longer “whether to use GPU” but how to integrate acceleration with minimum friction and maximum portability.

- Open source isn’t just a reputation channel: it’s where standards, APIs, and best practices are decided, ultimately shaping production.

- The competitive battle hinges on both development experience (rapid iteration) and performance (tokens/sec, inference cost, latency P50/P99).

Summing up, Open Source AI Week enshrines an idea: if the community advances, the provider that best oils the machinery — with tooling, models, datasets, and technical presence — gains inertia. CUDA Python‘s move is precisely about lubrication.

Frequently Asked Questions

What is CUDA Python, and how does it compare to writing kernels by hand?

It’s the integration of Python as a first-class language within CUDA, with tooling for kernel fusion, extensions, and packaging. It enables acceleration directly from Python with native access to optimized libraries (cuBLAS, cuDNN, CUTLASS) and helps reduce the transition time from notebook to production.

What role does nvmath-python play in PyTorch projects?

It acts as a bridge between Python code and GPU’s mathematical cores. It minimizes integration “glue,” avoids compilation pain, and eases the creation of custom operators with top-tier performance.

What data illustrates PyTorch’s growth and NVIDIA’s software adoption?

In the last month, PyTorch surpassed 65 million downloads on PyPI, with an average above 2 million daily and a peak of 2,303,217. Hundreds of millions of NVIDIA libraries are downloaded monthly, mostly in Python/PyTorch environments, and the company’s ecosystem exceeds 6 million developers.

How does the Open Source AI Week benefit a technical lead?

Access to ready-to-use examples and templates, direct contact with maintainers, better deployment pathways, and a smoother learning curve for leveraging GPU from Python. Economically, it means less friction, faster delivery, and less technical debt.

via: blogs.nvidia and Open Source AI Week