The recent Morgan Stanley Research report assigns specific figures to the inference AI business, confirming market suspicions: NVIDIA holds a significant lead over its competitors, not only in hardware power but also in business profitability.

The study models a standardized “AI inference factory” of 100 MW, comparable to a medium-sized data center, to evaluate total cost of ownership (TCO) and revenue generated from token processing in language models and AI applications.

Results show an average operating margin exceeding 50% among major providers, making inference one of the most profitable segments in the digital economy.

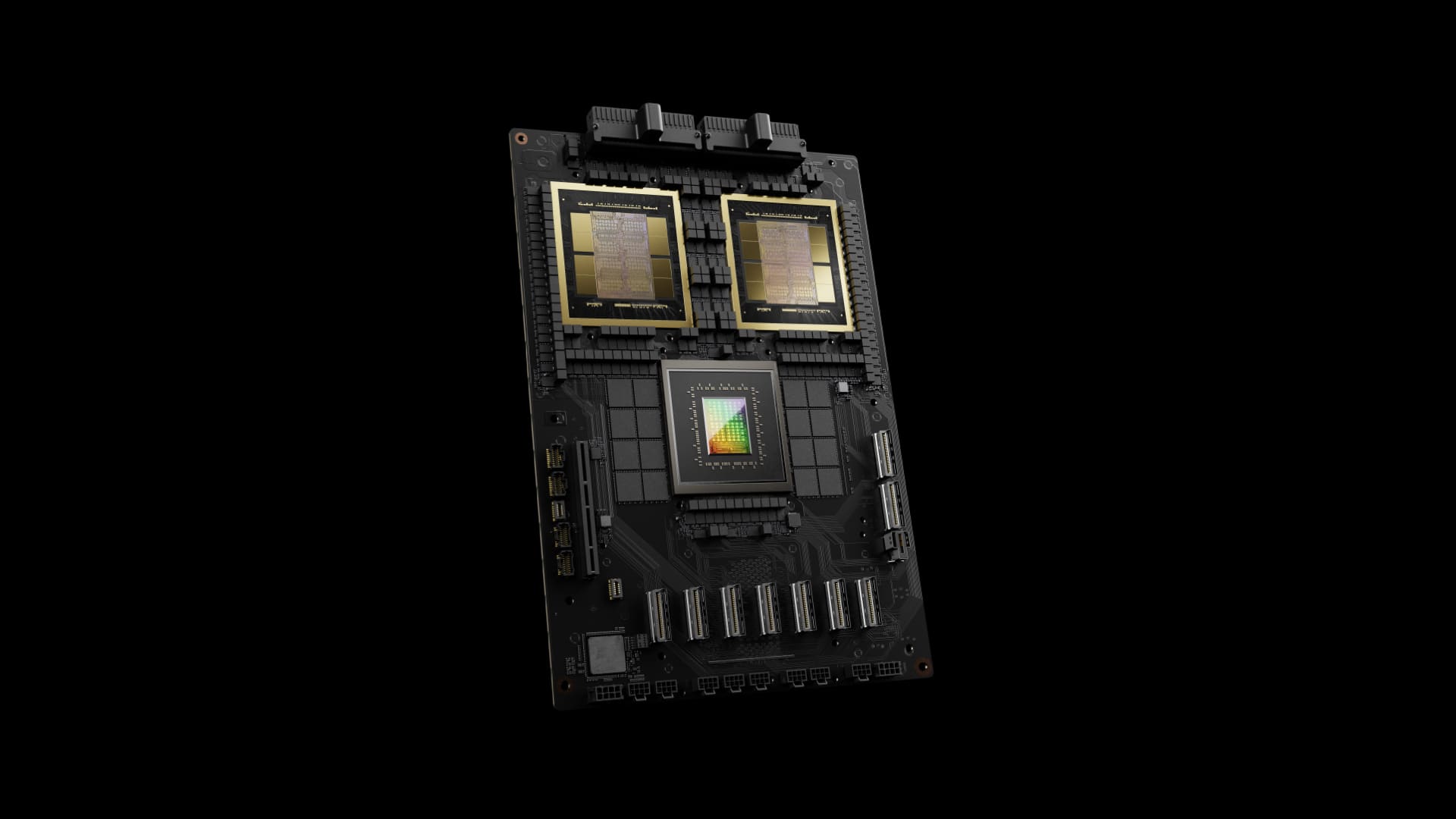

NVIDIA’s GB200 NVL72 Blackwell platform leads with a 77.6% margin and estimated benefits of $3.5 billion in a typical deployment. This figure reflects not only computing and memory capacity but also the strength of the CUDA ecosystem and optimizations supporting FP4, which improve efficiency per watt beyond any competitor.

Morgan Stanley also highlights the “fine wine” effect NVIDIA achieves with its GPUs: even older generations like Hopper continue to improve through software updates.

Google’s TPU v6e has a 74.9% margin, demonstrating how a cloud provider can monetize proprietary hardware and tightly integrated software. AWS Trn2 UltraServer follows with a 62.5% margin, reinforcing Amazon’s diversification strategy against NVIDIA’s dominance. Huawei’s CloudMatrix 384 also performs well, with a 47.9% margin, strengthening its position in the Asian market.

Conversely, AMD is the most disappointing. Its latest platform, MI355X, shows a negative margin of -28.2%, while the previous MI300X falls to -64.0%, mainly because the inference efficiency doesn’t generate enough revenue to cover investment costs, making it a loss-making business in this modeled scenario. Despite similar initial costs to NVIDIA’s GB200, AMD’s inference tasks are less profitable due to less mature software ecosystems and optimization levels.

The report emphasizes that inference will account for 85% of the AI market share in coming years, solidifying NVIDIA’s leadership. The company is already developing its next architecture—Rubin—expected to launch in 2026, with subsequent iterations like Rubin Ultra and Feynman.

AMD plans to respond with MI400, intended to compete directly with Rubin, while promoting UALink, an open standard for interconnection aiming to offer an alternative to NVLink. However, ecosystem adoption remains uncertain.

The conclusion is clear: NVIDIA dominates both technically and financially. By offering significantly higher returns at similar costs, it becomes the preferred choice for data center operators and AI service providers. Google and AWS demonstrate that hyper-scale providers with proprietary hardware can compete profitably, while Huawei maintains profitability in its domestic market. AMD faces a gap that cannot be closed solely through hardware improvements; it must develop a software ecosystem as mature as CUDA.

FAQs:

1. What is an “AI inference factory”?

It’s a conceptual 100 MW data center model dedicated to AI inference, used by Morgan Stanley to compare costs, revenues, and margins across different platforms.

2. Why does NVIDIA achieve such high margins?

Due to advanced hardware (GB200 Blackwell), support for FP4, and an optimized software ecosystem (CUDA), which maximize hardware efficiency.

3. What roles do Google and AWS play in this market?

Both achieve margins over 60% thanks to proprietary architectures, proving that vertical integration can rival NVIDIA in profitability.

4. Why is AMD losing money on inference?

Despite powerful chips, the lack of a mature software ecosystem and comparable optimizations to CUDA significantly reduces inference efficiency, resulting in negative margins.

5. What does this imply for the future of the AI market?

Since inference will dominate 85% of future market share, NVIDIA is likely to maintain its hegemon unless AMD or others close the software and efficiency gap.