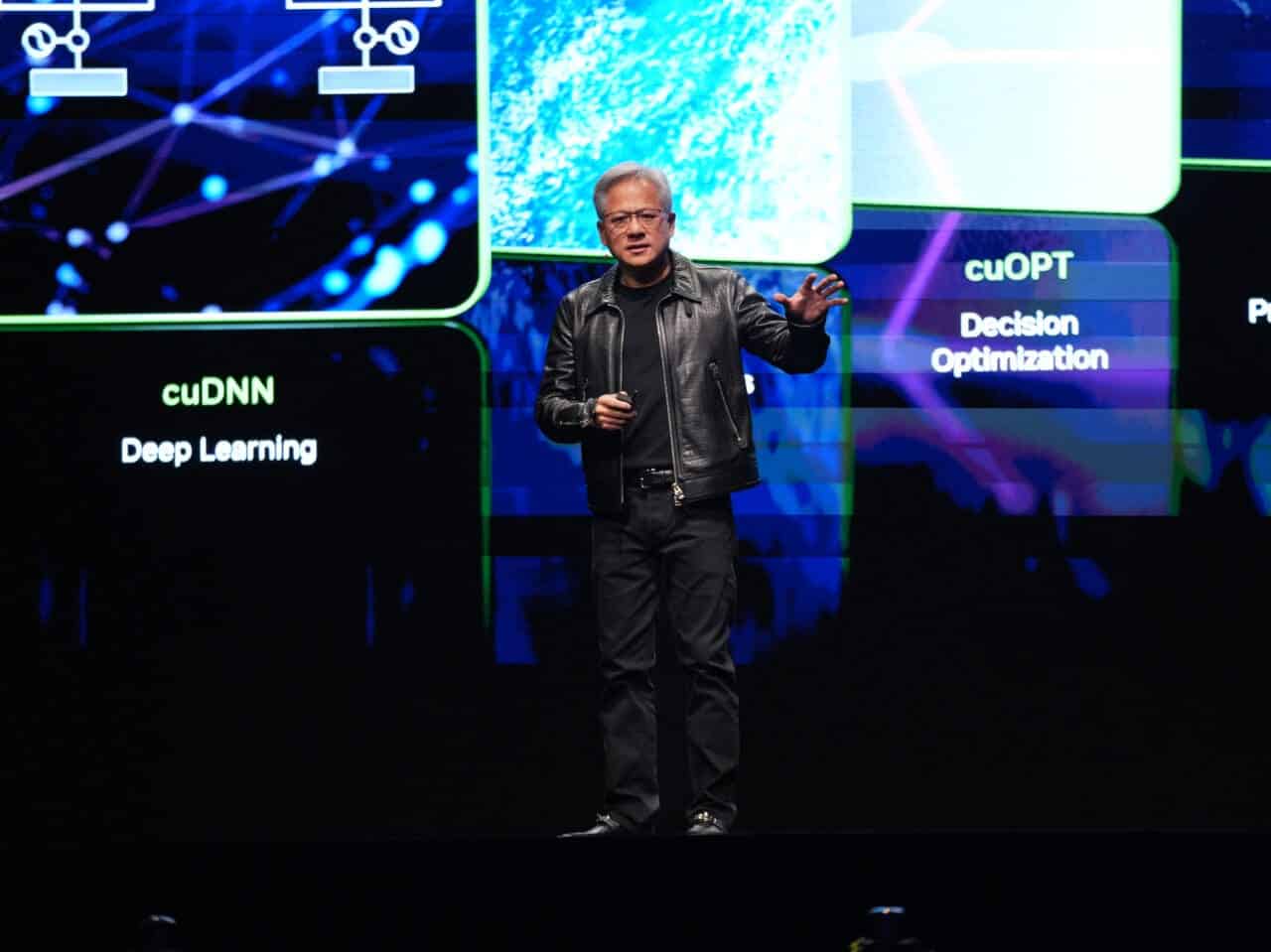

Jensen Huang, CEO of NVIDIA, has raised an increasingly relevant debate in the tech sector: Could ASICs dethrone GPUs as the standard for artificial intelligence? While NVIDIA’s GPUs are currently the heart of training and inference for the most advanced AI models, the advancement of custom-designed ASICs by companies like Google, Amazon, and OpenAI could transform the market in just a few years.

GPU vs. ASIC: The Technological Battle for Artificial Intelligence

GPUs (graphics processing units) have traditionally been the favorites for training AI models. Their main advantage lies in their versatility: they can perform large-scale parallel operations, adapt to multiple workloads, and are compatible with a vast ecosystem of tools and libraries (such as CUDA). This makes them the ideal choice for researching and developing new models.

In contrast, ASICs (application-specific integrated circuits) are designed for specific tasks. While GPUs can handle everything—sometimes not with the utmost efficiency—ASICs are created to perform a particular function with the highest possible performance. In the case of AI, this means that an ASIC can process inference or training tasks with lower energy consumption and a lower cost per operation, albeit with less flexibility.

Advantages and Disadvantages: GPU vs. ASIC

| Feature | GPU (NVIDIA) | ASIC |

|---|---|---|

| Versatility | Highly versatile; adaptable to different tasks | Limited to specific functions |

| Ecosystem | Extensive software support (CUDA, TensorRT, PyTorch) | Closed ecosystem; requires custom development |

| Raw Performance | High in general AI tasks and graphics | Maximum performance in specific tasks |

| Cost per Unit | High, especially in high-end models | Lower, but requires initial investment in design |

| Energy Consumption | High, especially in prolonged operations | Much more energy-efficient |

| Future Flexibility | Adaptable to future enhancements and models | Obsolete if the specific task changes or evolves |

| Availability | Affected by supply issues | Dependent on in-house production, no intermediaries |

NVIDIA’s Position and Fear of a Paradigm Shift

During his remarks, Jensen Huang noted that ASICs are still not competitive against GPUs in complex training tasks, but he did not hide his concern:

“If companies start mass-producing ASICs and these reach competitive levels in training and inference, our leadership could be threatened.”

Companies like Google, with its TPUs, and Amazon or Microsoft, are already developing their own chips for AI. Even OpenAI has begun designing its own ASICs to reduce dependence on NVIDIA.

The Risk for NVIDIA

If companies achieve equal or superior results using custom-designed chips, NVIDIA’s business model could be severely impacted. The market could shift from orders of tens of thousands of GPUs to a focus on more efficient proprietary solutions, leaving NVIDIA with excess production and a drop in sales that would affect its stock valuation.

Conclusion: Who Will Win the Race?

For now, GPUs remain the most flexible and widely used solution. However, optimization and efficiency are beginning to gain importance in a sector increasingly concerned about costs and energy sustainability. ASICs pose a latent threat that NVIDIA cannot ignore. The big question is whether the company will be able to reinvent itself to maintain its dominance or if tech giants will prefer to invest in customized solutions that reduce their dependence on a single supplier.

The future of artificial intelligence hardware is at stake, and the battle between GPUs and ASICs has only just begun.

Source: Artificial Intelligence News