Sure! Here’s the translated text in American English:

High-performance computing is essential for the development of artificial intelligence. NVIDIA has announced that its Blackwell GB200 NVL72 platform is now available in the cloud through CoreWeave, becoming the first service provider to offer these instances. This large-scale infrastructure, designed to operate up to 110,000 GPUs, optimizes the performance of AI reasoning models and autonomous agents, providing unprecedented capacity for real-time response generation.

Scalable Infrastructure for the AI of the Future

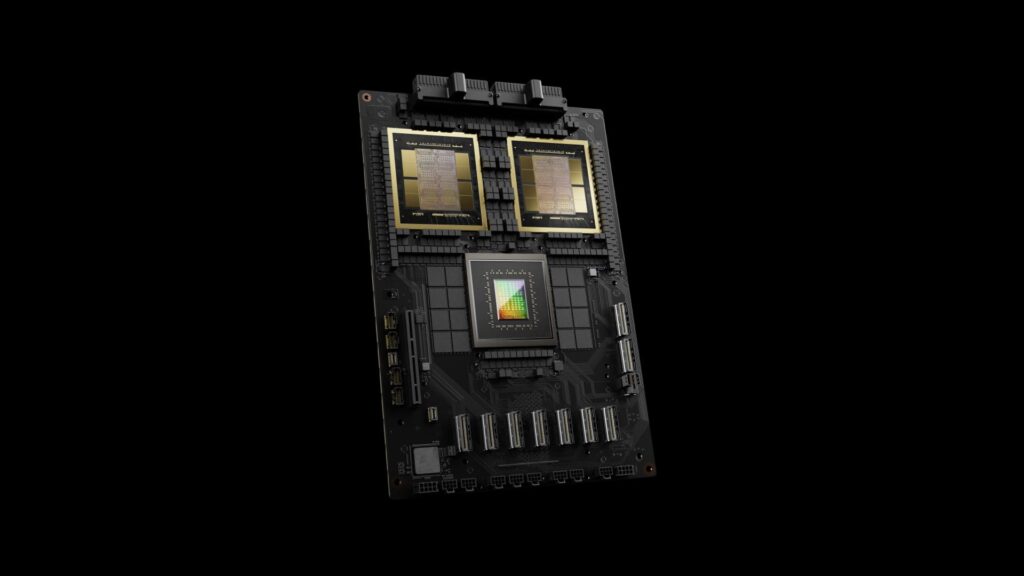

The NVIDIA GB200 NVL72 system integrates 72 interconnected Blackwell GPUs via NVLink, allowing them to work as a single computing unit. This technology is crucial for advanced AI models that require high communication speeds, optimized memory, and significant processing capacity.

Key features include:

- 130TB/s of bandwidth through fifth-generation NVLink, improving communication efficiency between GPUs.

- Second-generation Transformer engine with FP4 support, enhancing token generation speed without sacrificing accuracy.

- NVIDIA Quantum-2 InfiniBand networking, providing 400 Gb/s of bandwidth per GPU, facilitating scalability to thousands of units.

- BlueField-3 DPUs, optimizing data access and improving elasticity in multi-user environments.

This set of innovations makes Blackwell an ideal choice for companies looking to run generative AI models with maximum performance and efficiency.

An Enterprise-Level AI Platform

The GB200 NVL72 instances on CoreWeave are designed to facilitate the deployment of generative AI models and autonomous agents in sectors such as healthcare, scientific research, and business automation. NVIDIA complements this infrastructure with an optimized software stack for artificial intelligence, which includes:

- NVIDIA Blueprints, featuring preconfigured and customizable workflows to accelerate AI model deployment.

- NVIDIA NIM, a collection of microservices designed for securely and efficiently deploying high-performance AI models.

- NVIDIA NeMo, advanced tools for training, customizing, and continuously improving AI models.

This combination of hardware and software enables companies to develop AI models with increased accuracy, speed, and scalability, driving innovation in the tech sector.

Availability and Access to GB200 NVL72 Instances

The GB200 NVL72 instances are now available in the US-WEST-01 region of CoreWeave Kubernetes Service, under the instance identifier gb200-4x.

The availability of Blackwell in the cloud represents a significant advancement in the evolution of artificial intelligence, allowing AI models to operate at scale with optimized response times and reduced service costs. With this technology, NVIDIA and CoreWeave are marking the beginning of a new era in high-performance computing, offering solutions tailored to the needs of the next generation of artificial intelligence.

via: Nvidia