NVIDIA has unveiled the GH200 Grace Hopper™ platform, a technological revolution embodied in the new Superchip Grace Hopper with the world’s first HBM3e processor. Designed to tackle the most complex generative AI workloads, including extensive language models, recommendation systems, and vector databases, this platform promises to transform the computing landscape.

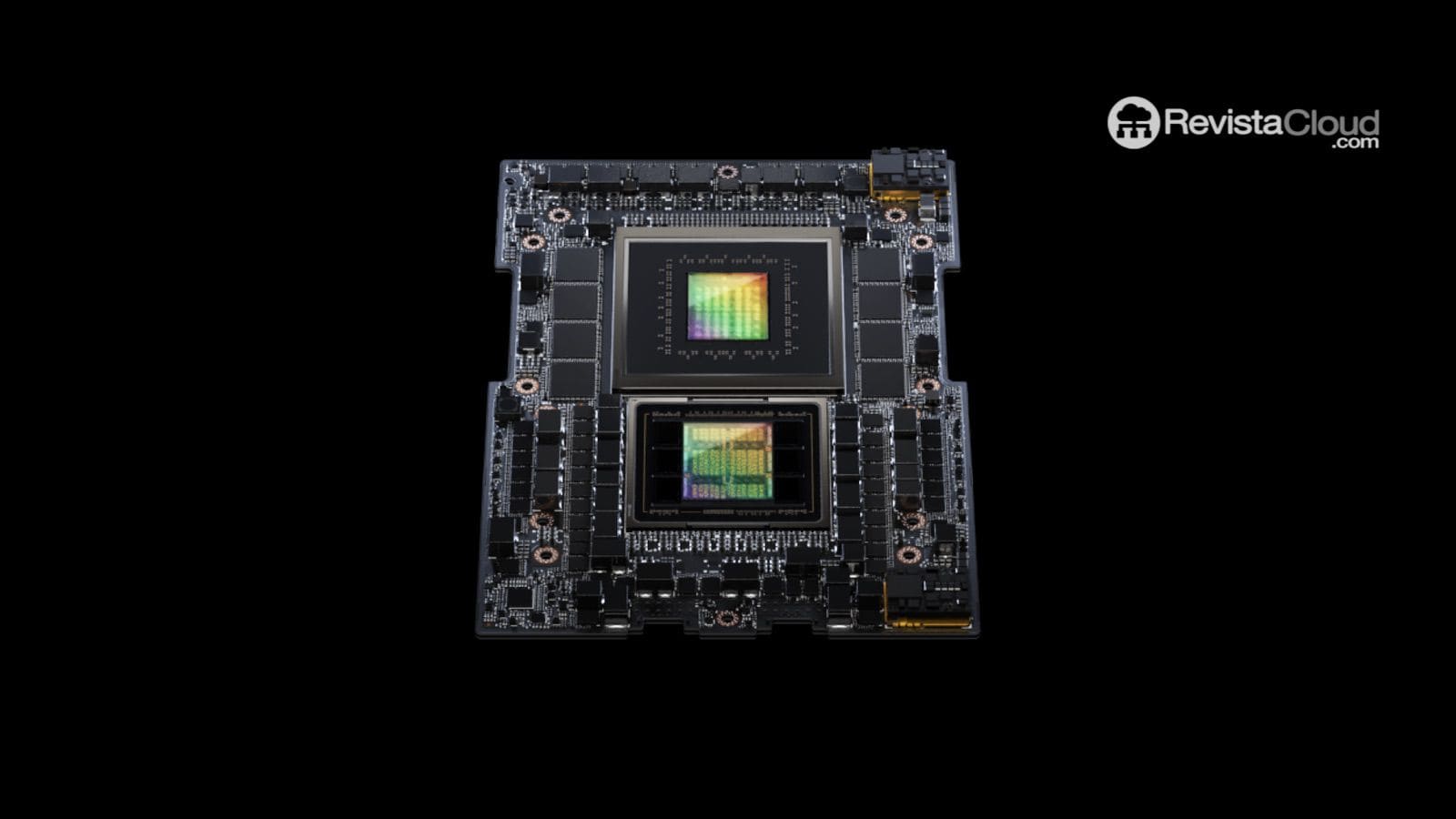

A Fusion of Power and Capacity: Dual Configuration for Exceptional Performance

The dual configuration of this platform, offering up to 3.5 times more memory capacity and 3 times more bandwidth than its predecessor, consists of a single server equipped with 144 Arm Neoverse cores, eight petaflops of AI performance, and 282GB of the latest HBM3e memory technology. This breakthrough represents a significant leap in data processing and storage capacity, setting a new industry standard.

Jensen Huang’s Vision: Accelerated Computing Platforms for Generative AI Demand

Jensen Huang, founder and CEO of NVIDIA, emphasizes the need for accelerated computing platforms with specialized requirements to meet the growing demand for generative AI. The GH200 Grace Hopper Superchip platform meets these demands by offering exceptional memory technology, the ability to connect multiple GPUs, and a easily scalable server design throughout the data center.

Cutting-Edge Connectivity and Memory: Grace Hopper Superchips and HBM3e

The Grace Hopper Superchip can be interconnected with additional Superchips via NVIDIA NVLink™, enabling the deployment of giant models used in generative AI. This high-speed coherent technology allows the GPU full access to the CPU memory, offering 1.2TB of fast memory in a dual configuration. The HBM3e memory, 50% faster than current HBM3, provides a total of 10TB/sec of combined bandwidth, allowing the new platform to run models 3.5 times larger than the previous version and improve performance with 3 times faster memory bandwidth.

Adoption and Availability: A Bright Future with Grace Hopper

Leading manufacturers are already offering systems based on the previously announced Grace Hopper Superchip. To drive widespread adoption of the technology, the next-generation GH200 Grace Hopper platform with HBM3e is fully compatible with the NVIDIA MGX™ server specification, introduced at COMPUTEX earlier this year. With MGX, any system manufacturer can quickly and cost-effectively add Grace Hopper in over 100 server variations.

Major system manufacturers are expected to deliver systems based on the platform in the second quarter of the calendar year 2024.

Discover more about Grace Hopper at Jensen Huang’s SIGGRAPH address, available on demand, opening a new chapter in accelerated computing and generative AI history.