For decades, robotics has excelled in environments where everything is measured to the millimeter: assembly lines, controlled settings, repetitive tasks, and scripted procedures. But the industry has been pursuing a qualitative leap for some time: machines capable of operating in everyday scenarios, handling different objects each day, with human interference and room for error. In this context, Microsoft Research has introduced Rho-alpha (ρα), its first robotics model derived from the Phi family of vision and language models, aiming to bring the so-called physical AI—the convergence of agentic AI and physical systems—closer to robots that can perceive, reason, and act with greater autonomy.

The company frames the announcement within the rise of VLA models (vision-language-action) for physical systems, a trend seeking to unify perception (vision), understanding (language), and execution (action) into a single operational “brain.” According to Ashley Llorens, corporate vice president and general manager of Microsoft Research Accelerator, these models are enabling physical systems to gain autonomy in much less structured environments, more similar to the real world than a factory floor.

From understanding instructions to moving a robot

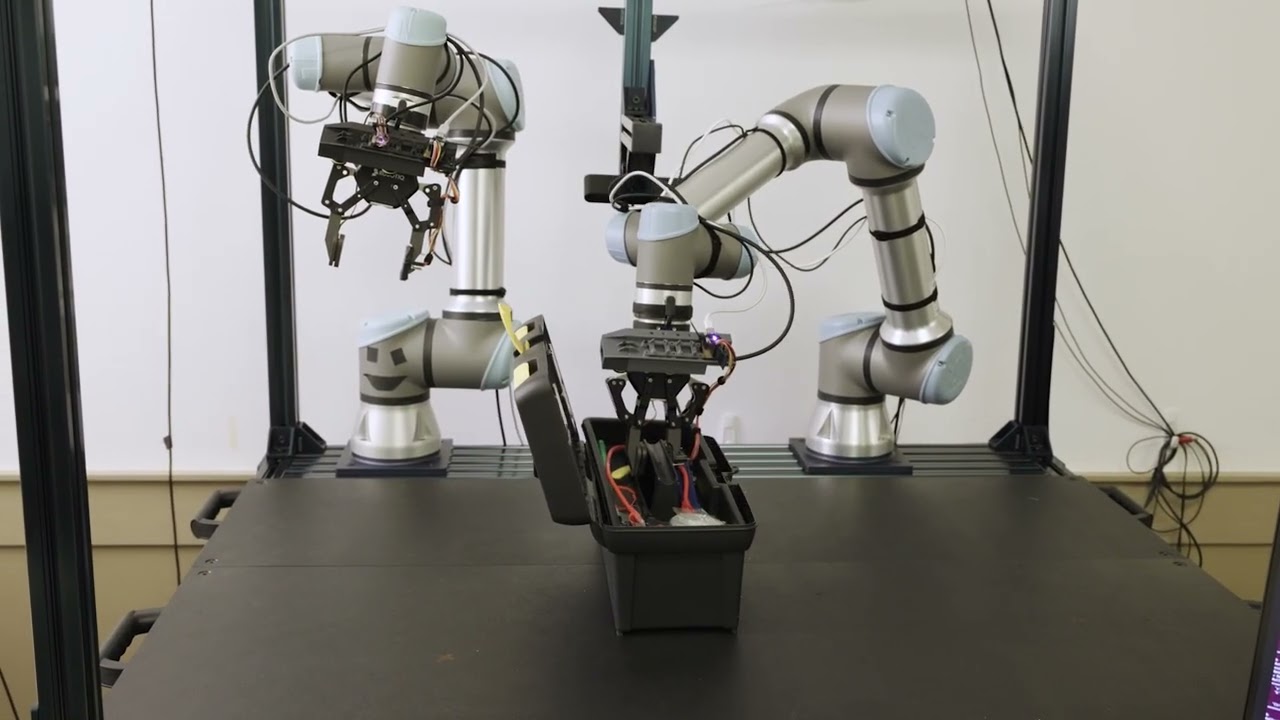

Rho-alpha is designed to translate natural language commands into control signals for robots performing bimanual manipulation, that is, tasks requiring fine coordination of both hands or grippers: pushing a button, turning a knob, moving a slider, or pulling a cable. Microsoft describes it as a VLA+ because it extends capabilities beyond typical VLAs in two key dimensions:

- Enhanced perception: it incorporates tactile sensing and, according to the team, is already working to add modalities like force.

- Learning during operation: the goal is for the model to improve on the fly by learning from human feedback, especially relevant when the robot makes mistakes or gets “stuck” during a maneuver.

This approach aims at a core objective repeatedly emphasized by Microsoft: adaptability. In robotics, adaptation is not just a slogan; it’s the difference between a system that works in a demo and one that sustains in production. Microsoft’s own wording stresses that robots capable of adjusting their behavior to dynamic situations and human preferences tend to be more useful and, above all, more reliable for operators.

BusyBox, the “testing ground” for physical interaction

To demonstrate the approach, Microsoft has used BusyBox, a recently introduced physical benchmark from Microsoft Research. In the videos and examples cited, the robot responds to commands like “press the green button,” “pull the red cable,” “turn the knob,” or “move the slider,” executing actions on a device designed to evaluate manipulation skills in realistic conditions.

BusyBox is significant for a simple reason: for years, comparing advances in robotics has been difficult due to the lack of standardized tests that reflect the variety of the physical world. A testing suite that combines objects, mechanical resistance, tolerances, and possible failures helps measure something that’s as important as precision in robotics: recovery capability—the ability to recover when things don’t go perfectly the first time.

The biggest bottleneck: data (and tactile data)

If there is a structural problem in modern robotics, it’s the scarcity of training data at a scale comparable to language or vision AI. Recording physical demonstrations is expensive, slow, and sometimes outright unfeasible. That’s why Microsoft emphasizes that simulation plays a central role in overcoming the lack of pretraining data, especially when aiming to include less common signals like tactile feedback.

The team’s approach combines:

- Trajectories of physical demonstrations (real data).

- Simulated tasks generated through a multi-phase pipeline, supported by reinforcement learning.

- Integration of simulation with the NVIDIA Isaac Sim framework and use of Azure to produce physically plausible synthetic datasets.

- Additional co-training with large-scale web data on “visual question answering” (visual Q&A), with the aim of injecting vision-language understanding into behavior.

This contribution is significant: robotics needs models that not only “see,” but also feel and adapt actions in real time. Here, tactile sensing becomes more than an extra feature—it could be the key element to reduce errors when manipulating plugs, tabs, fasteners, screws, or parts with tight tolerances.

Humans in the loop: correcting to learn

Microsoft acknowledges another critical point: even with expanded perception, robots can make mistakes that are difficult to resolve on their own. That’s why the team focuses on tools and techniques for the system to learn from human corrections during operation, such as through intuitive teleoperation devices like a 3D mouse. Examples show scenarios like inserting a plug where the robot struggles and receives real-time human assistance to complete the task.

This “human-in-the-loop” approach is not a minor detail: in real-world environments, the transition between autonomy and assistance determines whether a robot reduces workload or increases complications.

What’s next: evaluation, early access, and platform leap

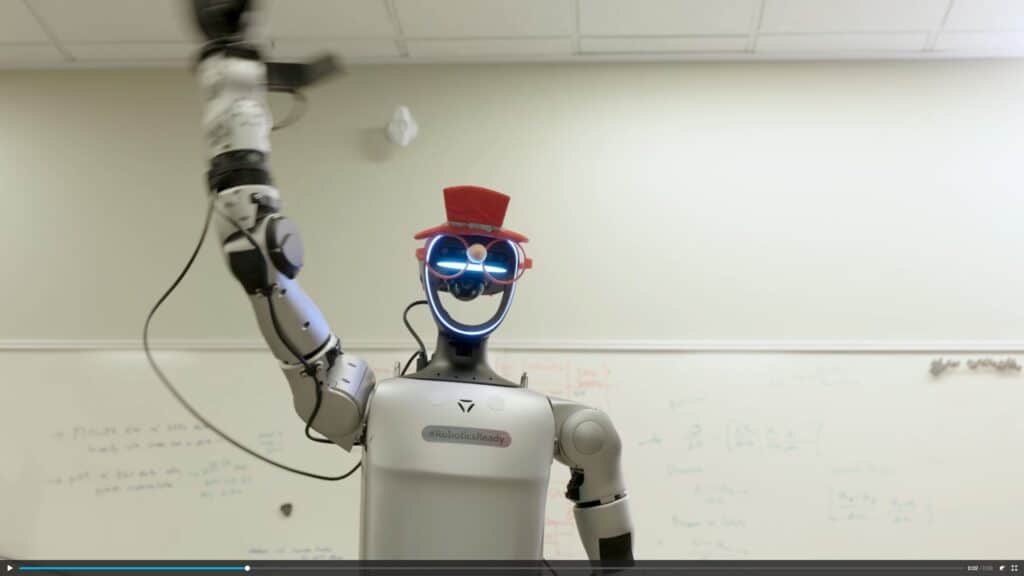

Microsoft states that Rho-alpha is under evaluation in both dual-arm and humanoid robot setups, and plans to release a technical description in the coming months. Additionally, it is inviting organizations interested in participating in an Early Access Program and anticipates that the model will also be available later through Microsoft Foundry.

In industry terms, the message is clear: the race for AI is no longer just about generating text or images. It’s shifting toward systems capable of acting in the physical world with a demanding mix of multimodal perception, fine control, and continuous learning. And in this landscape, tactile feedback—along with simulation and human correction—stands out as a major factor in moving from flashy demos to truly useful robotics.

Frequently Asked Questions

What is a VLA model and why is it important in robotics?

A vision-language-action (VLA) model aims to connect what the robot sees, understands in natural language, and what it does (control actions), to operate in less structured environments than industrial settings.

What does “tactile sensing” add to a robot compared to using vision alone?

Tactile sensing helps detect contact, resistance, and slips. It is especially useful in fine manipulation tasks (plugging in, switches, cables, tabs), where vision alone can fail due to occlusions or physical variations.

What is BusyBox and what is it used for?

BusyBox is a Microsoft Research physical benchmark designed to assess interaction and manipulation skills through tasks guided by natural language, such as moving sliders, operating switches, or turning knobs.

When will Rho-alpha be available outside the lab?

Microsoft has announced an Early Access Program for organizations and plans to make it available later through Microsoft Foundry, along with a technical publication with more details.

via: Microsoft