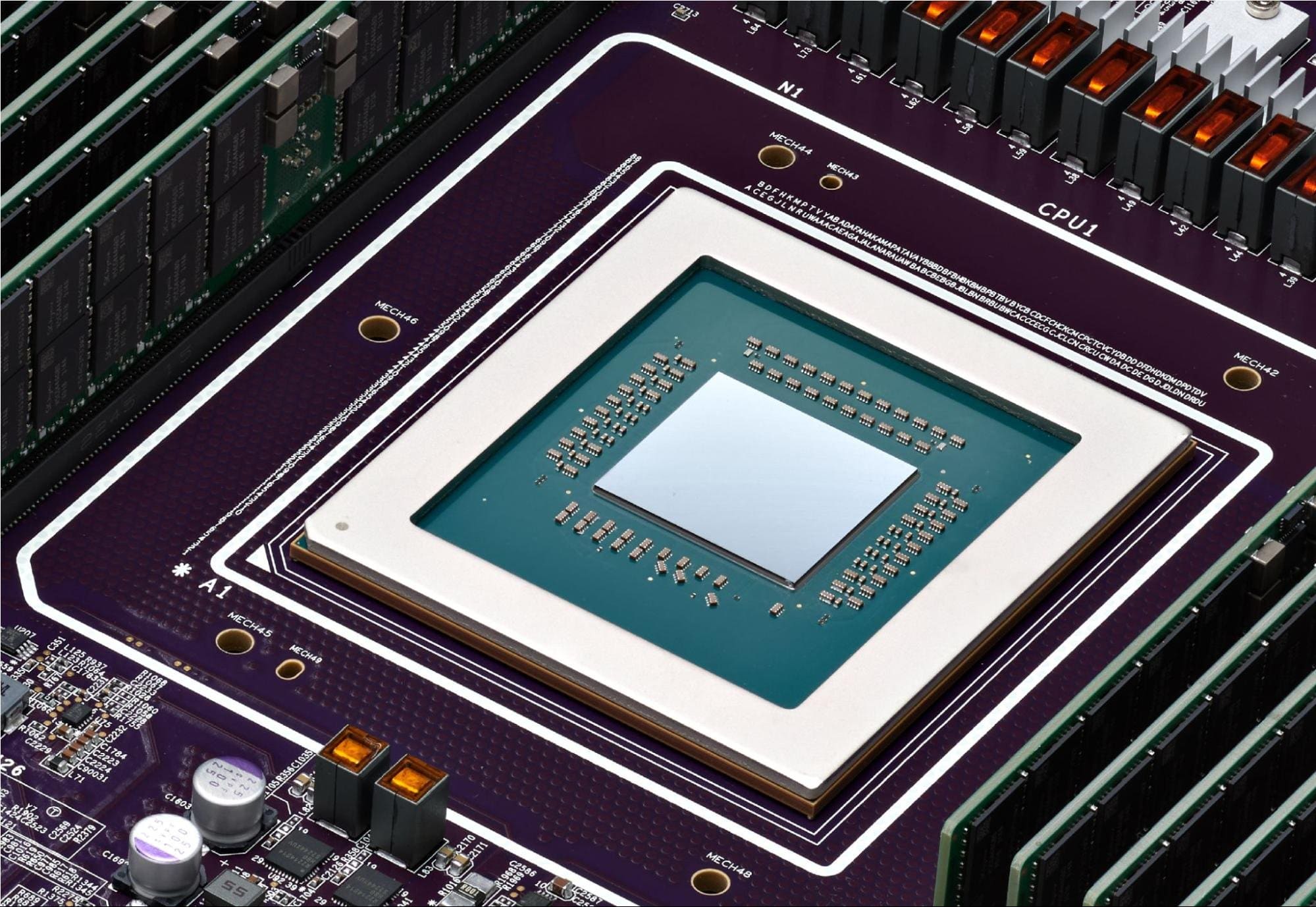

Meta is advancing in its strategy for hardware independence with the development of its first artificial intelligence (AI) chip based on RISC-V, specifically designed for training advanced models. According to a report from Reuters, the company has collaborated with Broadcom in the design of this custom accelerator, manufactured by TSMC, with the goal of reducing its dependence on Nvidia’s graphics processing units (GPUs), such as the H100, H200, B100, and B200.

This development is part of the Meta Training and Inference Accelerator (MTIA) initiative, Meta’s program for creating specialized AI hardware. The company has already deployed its first accelerators on a limited basis to assess their performance before expanding production.

A Commitment to RISC-V and In-House Hardware

Meta has been exploring the RISC-V architecture for years as an alternative to traditional processors. The main advantage of this architecture is its open and flexible nature, allowing Meta to customize the instruction set without paying licenses to third parties.

The technical details of the new chip are still unknown, but it is expected to include HBM3 or HBM3E memory, as AI models require vast data processing capabilities. Additionally, the accelerator is likely to use a systolic array architecture, similar to other training chips, which facilitates efficient calculations through a structured network of processing units.

Meta Seeks Independence from Nvidia After Relying on Its GPUs

The MTIA program has faced several challenges in the past. In 2022, Meta halted the development of its first inference processor after failing to meet performance and energy efficiency targets. This setback led the company to place large orders for Nvidia GPUs to fulfill its immediate needs for training AI models.

Since then, Meta has become one of Nvidia’s largest customers, acquiring tens of thousands of GPUs to train models like Llama Foundation, enhance its recommendation and advertising algorithms, and conduct inference processes on its platforms, which collectively serve over three billion daily users.

However, Meta remains committed to its strategy of developing in-house hardware. In 2023, it began using MTIA chips for inference tasks, and by 2026, it hopes to employ its training accelerator on a larger scale, provided it meets the necessary performance and energy consumption requirements.

Will Meta’s Chip Mark a Milestone in the RISC-V Industry?

If Meta’s AI training accelerator is also based on RISC-V, it could represent one of the most powerful chips ever created with this open architecture. This progress would strengthen the viability of RISC-V in high-performance applications, challenging the dominance of proprietary architectures like ARM and x86 in the AI market.

As Meta moves forward with its goal of developing custom hardware solutions for its data centers, its new chip could reshape the AI computing landscape, fostering a more open and efficient ecosystem for the development of artificial intelligence models.