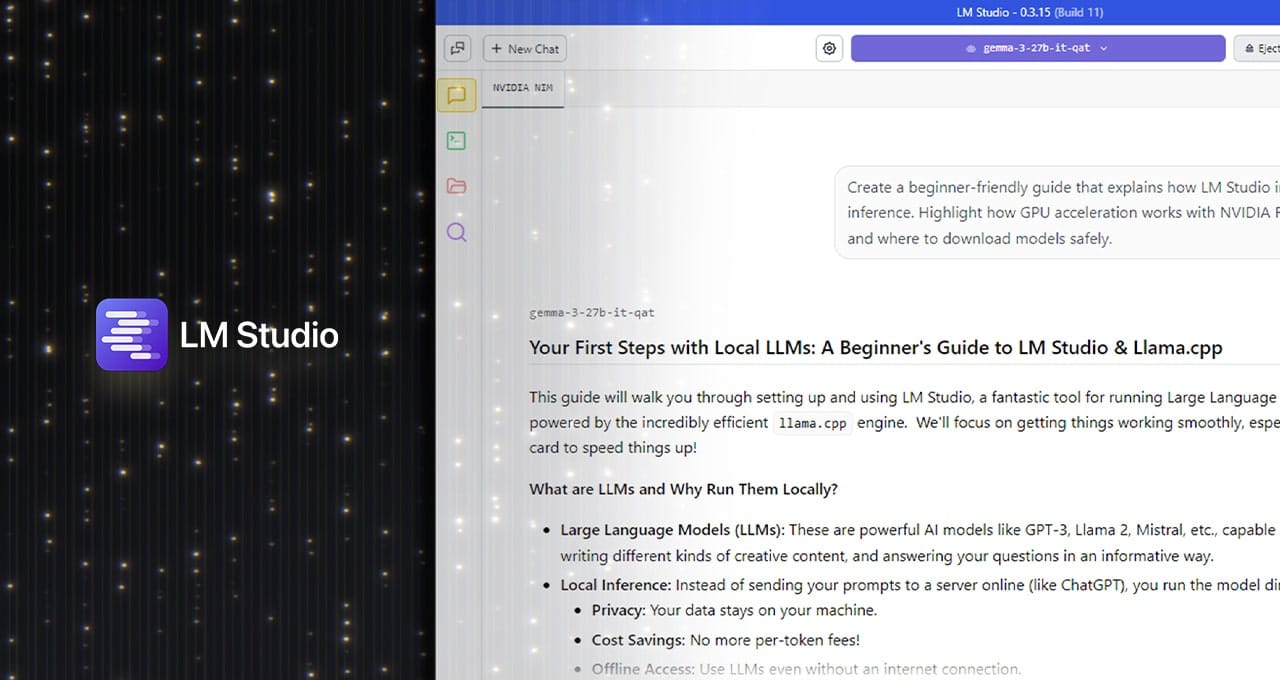

In a context where generative artificial intelligence is becoming part of the daily routine for developers, researchers, and advanced users, the ability to run language models (LLMs) locally has shifted from being an experimental option to a mature, powerful alternative with clear advantages. In this transformation, LM Studio stands out as one of the most versatile and accessible environments for running AI models locally, without reliance on cloud services.

With the arrival of version 0.3.15, LM Studio integrates natively with CUDA 12.8, the latest version of NVIDIA’s runtime environment, allowing for maximum performance from RTX GPUs, from the RTX 20 series to the more recent Blackwell architecture. The result: faster inference times, better resource utilization, and an optimized experience for generative AI directly on personal PCs.

Advantages of Running LLMs Locally with LM Studio

Running language models locally offers a series of strategic benefits compared to a cloud-based approach:

- Total Privacy: user data does not leave the device.

- Minimal Latency: nearly instantaneous responses, without depending on the network.

- Reduced Cost: no usage fees or limits from external APIs.

- Flexible Integration: connection with custom workflows, text editors, local assistants, and more.

LM Studio, built on the efficient llama.cpp library, allows deployment of popular models like Llama 3, Mistral, Gemma, or Orca in various quantization formats (Q4_K_M, Q8_0, full precision…), adapting to multiple hardware configurations.

CUDA 12.8: The Key to Unlocking Performance on RTX

The new integration of CUDA 12.8 enables LM Studio to leverage innovations specific to RTX GPUs:

| Optimization | Technical Description | Practical Benefit |

|---|---|---|

| CUDA Graphs | Consolidation of multiple GPU operations into a single call stream | +35% processing efficiency |

| Flash Attention (dedicated kernels) | Optimized algorithm for handling attention in transformers | Up to +15% performance in long contexts |

| Complete Offloading to GPU | All model layers run on the GPU | Reduction of CPU bottlenecks |

| Compatibility Across the Entire RTX Range | From RTX 2060 to RTX 5090 and the new Blackwell models | Scalable acceleration on PCs and workstations |

These advancements are reflected in the latest benchmarks for models like DeepSeek-R1-Distill-Llama-8B, where a 27% performance improvement has been recorded compared to previous versions of LM Studio, solely due to optimizations in CUDA and llama.cpp.

New Features for Advanced Developers

LM Studio 0.3.15 not only enhances performance but also strengthens its capabilities for developers:

- Improved Prompt Editor: allows for managing longer prompts, with better organization and persistence.

tool_choiceParameter: granular control over the use of external tools by the model, essential for RAG (retrieval-augmented generation), intelligent agents, or structured systems.- OpenAI-Compatible API Mode: enables LM Studio to connect to workflows as if it were a standard endpoint, perfect for plugins, assistants, or tools like Obsidian, VS Code, or Jupyter.

Real Use Cases and Practical Examples

Thanks to its modular design and ease of use, LM Studio is already a key tool in environments such as:

- Software Developers: integration with editors for generating, completing, or debugging code with models like Llama 3 or Codellama.

- Students and Researchers: using LLMs for text summarization, Q&A, or semantic exploration of PDF documents.

- Content Creators: generating ideas, headlines, descriptions, or long-form content without leaving the local environment.

- Advanced Linux or macOS Users: thanks to cross-platform compatibility and support for multiple runtimes.

How to Activate the CUDA 12.8 Runtime in LM Studio

Configuring LM Studio to use RTX acceleration with CUDA 12.8 is straightforward:

- Download LM Studio from its official website.

- In the left panel, go to Discover > Runtimes.

- Select CUDA 12 llama.cpp (Windows) or the corresponding version for your system and download.

- Set it as the default runtime in the configuration menu.

- Load a model, go to Settings, activate Flash Attention, and adjust “GPU Offload” to the maximum.

Once this process is complete, local inference will be hardware-accelerated for maximum available performance.

Conclusion: The Future of Personal AI is Local and Accelerated

Local deployment of LLMs is no longer an experiment; it is becoming a practical, powerful, and scalable solution. LM Studio, in conjunction with NVIDIA RTX GPUs and CUDA 12.8, offers one of the most robust platforms on the market for those looking to run artificial intelligence privately, quickly, and personalized.

Whether for creating a local assistant, integrating AI into your development workflow, or simply exploring the possibilities of language models, LM Studio is an ideal gateway. With active community support, constant improvements, and total freedom for customization, it represents the perfect balance of performance, accessibility, and control.

Source: Artificial Intelligence News