A team from the Tokyo Institute of Science presents a method that translates Wi-Fi network channel state information (CSI) into the latent space of a diffusion model, generating high-resolution RGB images of the environment efficiently and with text-based control.

Can Wi-Fi “see” through the changes that people and objects introduce to the radio channel? This idea has been circulating for years in labs: from activity recognition to breathing tracking with sufficient bandwidth. What was missing was a leap to high-resolution visual reconstructions without resorting to costly ad hoc training or complex architectures. That’s where LatentCSI comes in—a technique that takes the CSI (Channel State Information) of a multi-carrier Wi-Fi link, maps it to a latent space, and lets a pre-trained latent diffusion model handle the rest, including guidance via text for style or scene element refinement.

Authored by Eshan Ramesh and Takayuki Nishio (School of Engineering, Tokyo Institute of Science), the work —“High-resolution efficient image generation from WiFi CSI using a pretrained latent diffusion model”— describes a system that avoids pixel-by-pixel generation. Instead, it trains a lightweight network to predict directly the latent embedding that an LDM (like Stable Diffusion) would expect after encoding an image. From there, the known LDM pipeline denoises in the latent space (optionally with text instructions) and decodes into an RGB image.

What LatentCSI solves and why it matters

Previous attempts to “see” with Wi-Fi relied on sophisticated GANs or VAE networks: two adversarial networks, careful calibration, unstable training… and limited resolution outputs. Additionally, end-to-end learning on real images tends to memorize details, posing privacy risks: facial features, recognizable backgrounds, etc.

LatentCSI simplifies the process:

- A single network (the CSI encoder) learns to predict the latent embedding that the VAE of an LDM would produce for the paired image.

- It always operates in the latent space (much more compact than pixel space), with lower cost and less memory.

- The dimensional bottleneck reduces the likelihood of reconstructing sensitive details (faces, text), and the text guidance of the LDM enables controlled reconstruction/stylization of clothing, scene style, overall appearance.

Result: better perceptual quality compared to similar complexity baselines predicting pixels, more computational efficiency, and text control without additional image encoding steps.

How it works (without smoke and mirrors): from CSI to latent space

- Paired training data: CSI + image (the camera is only used during training for reference; inference runs only with CSI).

- A pretrained VAE in the LDM encodes the image into a latent embedding μ (practically, they use the mean of the Gaussian posterior, as the predicted variance is negligible).

- The CSI encoder (fw(x)) is trained to return μ from the amplitude CSI (phase is avoided for simplicity and robustness against unsynchronized devices).

- During inference, the LDM’s noise addition is controlled with a “strength” parameter, and it denoises with text conditioning if desired, before decoding to an RGB image.

The key is that only the CSI encoder is trained; the U-Net denoiser, VAE, and text encoder of the LDM are reused without finetuning. This makes training less costly and preserves the perceptual quality of the original LDM.

Datasets and evaluation: fewer pixels, more perception

The authors validate LatentCSI on two datasets:

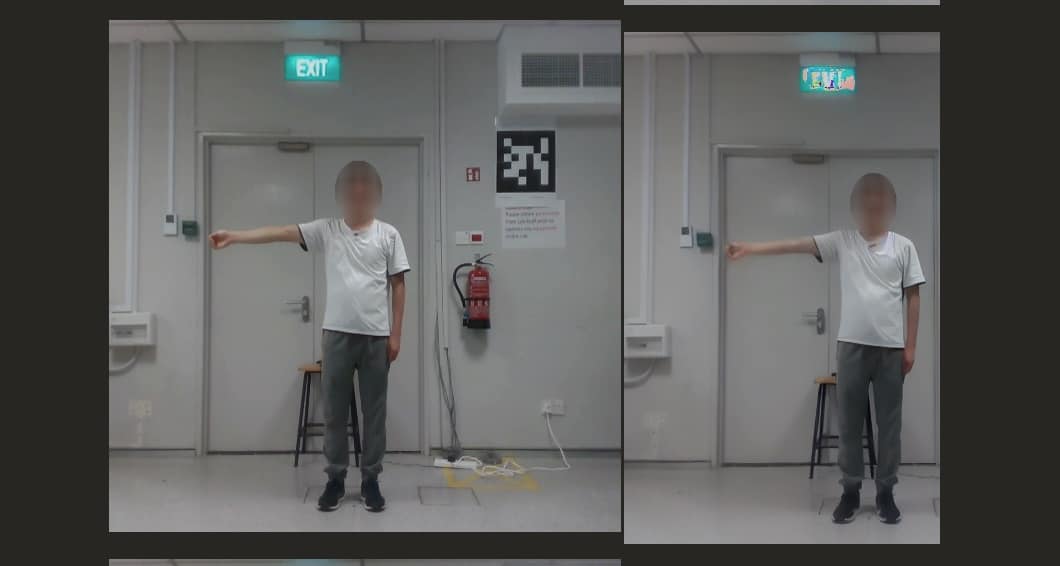

- Dataset 1: realistic environment with a moving person, frequent occlusions, and diverse views. CSI recorded at 10 Hz over 25 minutes with two NUCs and Intel AX210 (channel 160 MHz, 1×1, 1.992 subcarriers with FeitCSI). Images rescaled to 640×480 then to 512×512.

- Dataset 2 (MM-Fi subset): with more visual consistency (fixed position, stable background), 3 antennas, 40 MHz × 114 subcarriers (total 342), focusing on poses and arm movements.

Metrics

They compare against a pixel-prediction baseline with similar architecture and procedure (except for the output), and against CSI2Image (a hybrid GAN). Metrics include RMSE, SSIM (structural fidelity), and FID (perceptual quality), evaluated on full images and on detected persons (using YOLOv3).

- On Dataset 1, LatentCSI clearly outperforms in FID (perceptual quality), with significantly shorter training times due to the low-dimensional output.

- On MM-Fi, the baseline excels in RMSE/SSIM (repetitive scene favors memorization), but LatentCSI wins again in FID, especially focusing on people. The hybrid GAN falls behind in both scenarios.

Beyond numbers, the authors note that LatentCSI outputs are better starting points for further refinement with diffusion (adjustments with “strength” and prompts), while the baseline tends to undertrain or produce artifacts as resolution scales up.

Privacy and control: why the “bottleneck” helps

An especially interesting aspect is the tension between utility and privacy. The latent bottleneck prevents extracting micro-details that could identify individuals (e.g., a sharp face or visible text), but at the same time allows plausible reconstructions and text-guided edits (e.g., “a person in a small room, 4K, realistic”; “anime style drawing in a lab”, etc.). In essence, it preserves the semantic information about what and where in the CSI, while visual details are synthesized under user control.

For sensitive deployments (health, offices, homes), this approach reduces risks by design compared to pipelines that reproduce the original image.

Limitations and realism

LatentCSI assumes there is sufficient information in the CSI to accurately replace objects and people with visual coherence, depending on the scene, TX/RX geometry, bandwidth, and SNR. It also requires paired CSI-image data during training (e.g., calibration with a camera), though inference uses only CSI. Additionally, the pretrained LDM provides *perceptual* learned capacity from the world (not the CSI), which, if uncontrolled, can hallucinate stylistic details; hence the importance of text guidance and tuning the “strength” parameter to balance fidelity and diversity.

Contributions to the state of the art

- Simplified architecture: one CSI encoder → latent embedding → reused LDM pipeline.

- Efficiency: training in latent space reduces cost and memory and accelerates training epochs compared to pixel prediction.

- Perceptual quality: improved FID and semantic coherence in dynamic scenes.

- Control: text prompts to edit or stylize without explicit image encoding (no standard img2img).

- Privacy-by-design: lower chances of recreating sensitive traits; plausible content under explicit control.

How it would feature in real products

- Security/IoT: non-intrusive monitoring of rooms without active cameras; semantic alerts (“person standing by the door”).

- Smart-home/Assistance: fall detection or presence with synthetic visual feedback for operators without exposing individuals.

- Retail/Logistics: object distribution or activity recognition in corridors without cameras, with controlled visual reconstruction.

- Robotics/Edge: fast semantic maps from radio in environments where vision fails (smoke, low light).

All with initial calibration and legal evaluation: even if the reconstruction is not photographic, it is a representation of people and spaces that requires consent, information, and controls.

FAQs

Does it need cameras in production?

No. The camera is only used during training to pair CSI with images and teach the CSI encoder the correct latent embedding. In deployment, it infers only with CSI.

What diffusion model does it use?

It works with a pretrained latent diffusion model (e.g., Stable Diffusion). The CSI encoder is trained to mimic the embedding that the VAE of that LDM would produce, then the denoiser and decoder are used as-is.

Is it “seeing through walls”?

CSI captures the environment’s signature (reflections, multipath). With proper arrangements and sufficient bandwidth, it can detect presence and structures. However, the method does not promise photographic vision through walls; it synthesizes a plausible image consistent with the CSI and prompt, obeying clear physical limits.

What about privacy?

The latent bottleneck makes it difficult to reconstruct sensitive traits. Additionally, text guidance allows anonymizing/stylizing (e.g., cartoon style) or preventing reproduction of faces and text. Still, as it is a representation of people/spaces, it must adhere to regulations, consent, and good practices.

What quality can be expected in real, noisy scenes?

In dynamic, occluded environments, LatentCSI demonstrated better perceptual quality than similar baselines and less training cost. Exact fidelity depends on bandwidth, geometry, SNR, and calibration; adjustments to “strength” and prompts help balance realism and control.

Credits and support: The work cites funding from JSPS KAKENHI and JST ASPIRE, and the use of the TSUBAME4 supercomputer (Tokyo Institute of Science). The code and third-party datasets mentioned include MM-Fi and tools like FeitCSI.