Langfuse emerges as an indispensable open-source platform for the development, observability, and evaluation of applications using language models. Its cloud-native approach and integration capabilities in CI/CD workflows make it a strategic tool for any team working with generative AI at scale.

As the use of language models (LLMs) expands in sectors like commerce, customer service, medicine, and finance, having an observability infrastructure tailored to these systems becomes essential. Langfuse, an open-source platform born in the Y Combinator (W23) ecosystem, positions itself as one of the main enablers for companies looking to understand, optimize, and scale their applications with generative AI.

With an architecture designed to deploy in both public and private cloud environments—using Kubernetes, Terraform, or Docker Compose—Langfuse provides complete visibility into interactions with LLMs, as well as collaboration tools, automated testing, and detailed traceability.

Deep Observability for AI-Driven Applications

Langfuse addresses a growing need in cloud and AI: the lack of structured observability in applications built on language models. Unlike traditional logs, LLMs require capturing complex contexts, chained actions (such as retrievals, embeddings, or agent decisions), and quality metrics for generation.

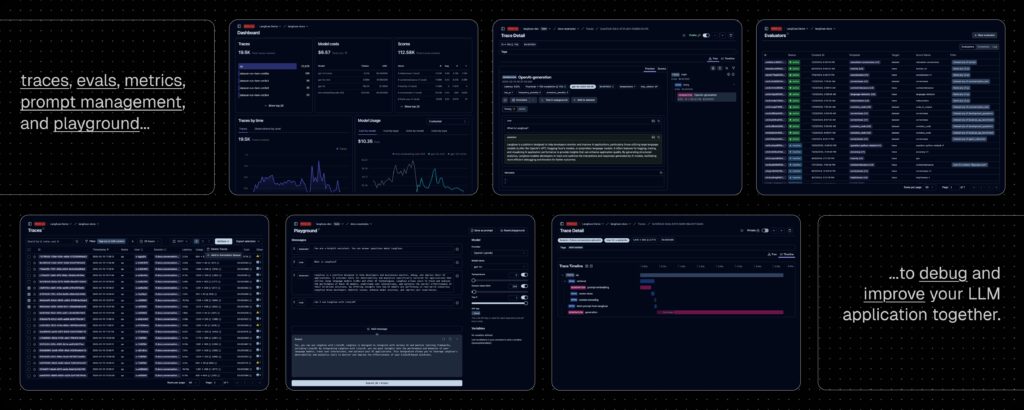

With Langfuse, teams can:

- Record and visualize detailed traces of each model call.

- Inspect the full flow of operations and decisions in real-time.

- Iterate and debug from an interactive environment (LLM Playground).

- Version and test prompts collaboratively and securely.

- Run automatic evaluations (LLM-as-a-judge) with human feedback or customized pipelines.

Full Integration in DevOps and LLMOps Environments

Langfuse’s cloud-native approach allows it to integrate into any modern deployment or monitoring pipeline. Its SDKs for Python and JavaScript/TypeScript, combined with a public API documented with OpenAPI and Postman collections, facilitate use in both local testing and complex CI/CD environments.

Langfuse integrates directly with:

- LangChain, LlamaIndex, Haystack, DSPy, LiteLLM, OpenAI, among other LLM frameworks.

- Visualization tools like Flowise, Langflow, or OpenWebUI.

- Local (e.g., Ollama) or remote (Amazon Bedrock, HuggingFace) execution models.

- Multi-agent platforms like CrewAI, Goose, or AutoGen.

In business contexts, this integration capability translates to complete traceability of deployed model behavior and increased ability to conduct regression testing, benchmarking, and performance audits, all while maintaining control over the infrastructure.

Flexible Deployment, Guaranteed Privacy

Langfuse can be deployed in minutes locally, on a VM, or in Kubernetes. The Langfuse Cloud version, offered as SaaS, includes a generous free tier with no credit card required. However, for organizations managing sensitive data or operating under strict regulatory frameworks, the self-hosted option remains the preferred route.

Regarding privacy, Langfuse does not share data with third parties, and its telemetry system can be completely disabled (TELEMETRY_ENABLED=false), ensuring compliance in sectors such as banking, healthcare, or defense.

Real Use Cases and Growing Adoption

Leading projects in the LLM ecosystem like Dify, LobeChat, Langflow, MindsDB, and Flowise are using Langfuse as a foundational traceability tool. This demonstrates its technological maturity and the strength of its community. Companies developing chatbots, intelligent assistants, semantic analysis tools, or document automation are already adopting Langfuse to gain control and scale their operations.

Furthermore, Langfuse aids in adhering to best practices in responsible AI by allowing the monitoring of model behavior, collecting user feedback, and preventing unexpected or biased outcomes.

A Key Infrastructure Layer for Enterprise Generative AI

As LLMs become part of the core business for many companies, Langfuse solidifies itself as a critical solution in the observability stack. Its offering not only speeds up development but also ensures transparency, reliability, and efficiency in cloud environments.

For organizations operating in multiple regions, Langfuse supports deployments in the EU or the US, meeting data sovereignty requirements. And for hybrid or edge architectures, its modular design allows for seamless adaptation.

Conclusion

Langfuse represents the natural evolution of traceability systems in the age of generative artificial intelligence. Its modular approach, self-hosted capability, and compatibility with the LLM ecosystem make it an essential platform for engineering and operations teams working with cloud AI.

In a future dominated by conversational AI, traceability is not an option; it’s a necessity. And Langfuse is leading the way.

🔗 More information: https://langfuse.com

🛠️ GitHub Repository: https://github.com/langfuse/langfuse

Source: AI News