The new version of its open-source software optimizes the balance between performance and storage capacity on SSDs, without relying on DRAM memory.

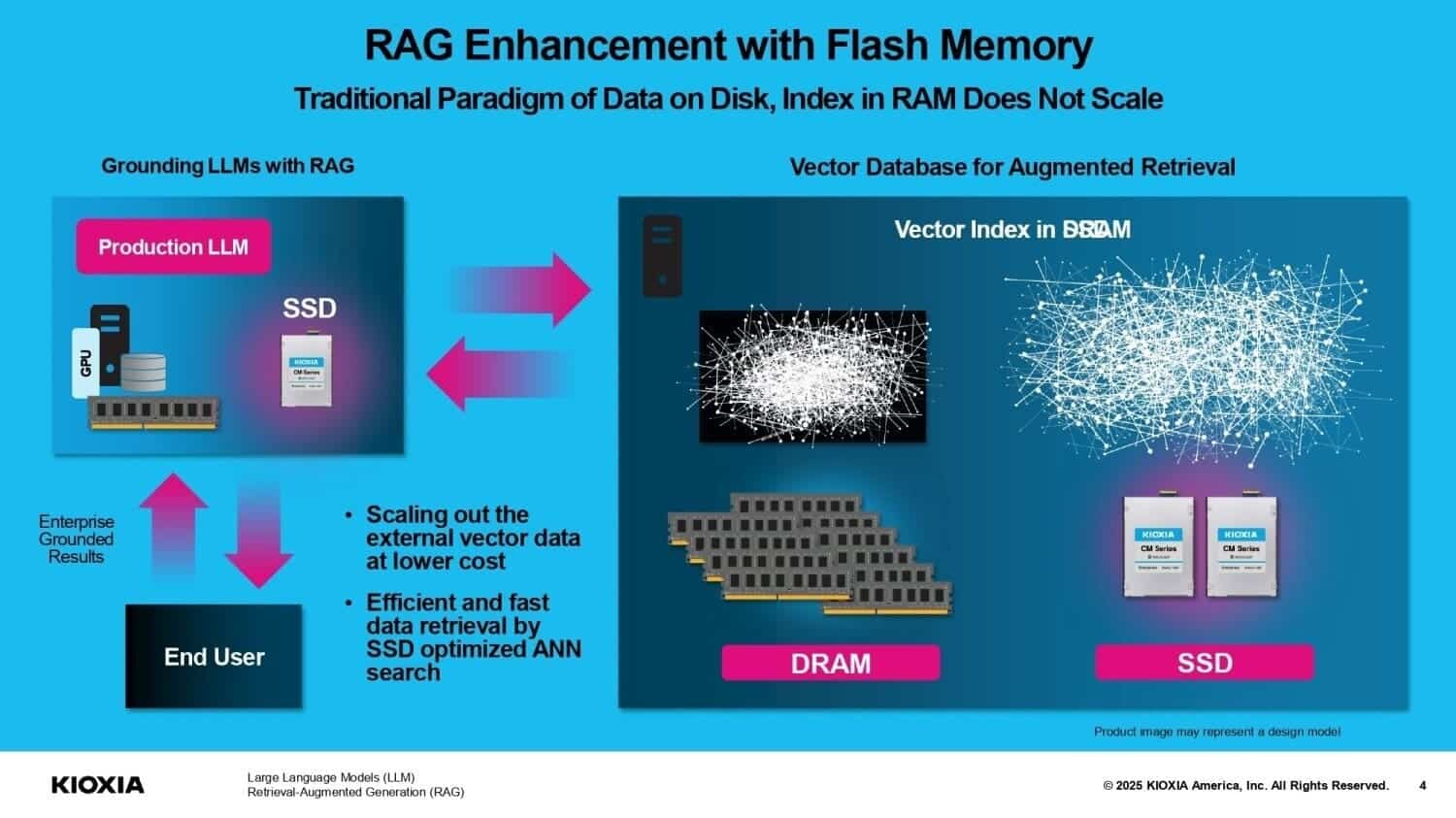

Kioxia Corporation, a global leader in memory solutions, has announced a significant update to its AiSAQ (All-in-Storage ANNS with Product Quantization) software, designed to enhance vector search performance in retrieval-augmented generation (RAG) systems, making the most of SSD capabilities without requiring DRAM memory.

The new version of AiSAQ, which is open-source, introduces configurable controls that allow system architects to define the optimal point between the number of storable vectors and search performance (queries per second), adjusting the system according to the specific requirements of each workload, without needing to modify the hardware.

An SSD-first approach to vector searches

Originally presented in January 2025, AiSAQ employs an optimized approximate nearest neighbor search (ANNS) algorithm for SSDs, derived from Microsoft’s DiskANN technology. Unlike traditional systems that load large vector indexes into DRAM, AiSAQ enables searches directly from storage, scaling more efficiently by not relying on limited host memory.

“When the SSD capacity is fixed, improving search performance means storing fewer vectors, and storing more vectors reduces performance,” Kioxia representatives explain. This new version allows administrators to fine-tune the system, establishing the most appropriate balance between performance and the volume of vector data, which is crucial for applications like RAG, offline semantic search, or recommendation systems.

Key innovations of the new AiSAQ-DiskANN

Among the main new features of the software are:

- Scalable search without DRAM: PQ (Product Quantization) vectors are read from disk on demand.

- In-line PQ vectors: they can be stored as part of the index node to reduce I/O operations.

- Optimized vector reorganization: minimizes disk accesses during searches.

- Multiple entry points: multiple accesses to the index are generated to reduce iterations.

- Vector beam width: allows search operations to be parallelized by adjusting the number of nodes per iteration.

- Static and dynamic PQ vector cache: accelerates access thanks to thread-specific LRU policies.

Thanks to these improvements, AiSAQ establishes itself as a robust and scalable solution for intensive vector searches, especially in generative AI environments, where storage efficiency and latency are critical.

AiSAQ in the open-source ecosystem

Kioxia has released the software openly on GitHub (aisaq-diskann), facilitating its use and contribution by the community. The code is based on Microsoft’s DiskANN algorithm, widely used in environments requiring rapid and scalable vector searches.

For its operation, AiSAQ requires the use of liburing for asynchronous reads on Linux systems, as well as the installation of libraries like Intel MKL for optimized compilation. Windows versions are also offered, compatible with Visual Studio 2017 and later.

A step towards scalable and sustainable AI

With the accelerated growth of generative language models and architectures like RAG, the volume of vector data has become a bottleneck for many organizations. Kioxia’s focus on SSD-centered architectures breaks away from the reliance on DRAM and enables systems that are more scalable, sustainable, and cost-effective for the next generation of artificial intelligence applications.

This evolution reinforces Kioxia’s commitment to advancing smart storage solutions and fostering efficient infrastructures for the AI era, where the integration between hardware and software is key to scaling without sacrificing performance.

via: techpowerup and Kioxia on GitHub