As artificial intelligence (AI) workloads become more complex, companies are seeking more efficient and scalable hardware architectures to run deep learning models. FuriosaAI, a promising company in AI chip development, has introduced Tensor Contraction Processing (TCPTCP (Transmission Control Protocol) is a protocol for…) as an alternative to traditional systolic arrays.

But is TCP truly a revolutionary innovation or merely an evolution of existing architectures? To answer this question, FuriosaAI engineers, Younggeun Choi and Junyoung Park, recently addressed the differences between TCP and systolic arrays at key events such as the Hot Chips Conference, AI Hardware Summit, and the PyTorch Conference.

Below, we explore their explanations and how TCP could redefine AI acceleration.

What is a systolic array and what are its limitations?

A systolic array is a grid of processing elements (PEs) that moves data in a structured and synchronous sequence, akin to a heartbeat (hence the name “systolic”). It is widely used for matrix multiplication, a key operation in deep learning, and has been the foundation of many AI accelerators.

When used under optimal conditions, a systolic array provides high computational efficiency and low energy consumption, as data flows predictably and keeps all processing elements busy. However, its rigid structure brings several limitations:

- Fixed size: If the workload does not exactly match the array’s dimensions, resources are wasted, leading to lower utilization efficiency.

- Predefined data flow pattern: This limits adaptability when processing tensors of different shapes—a common issue in AI inference tasks.

- Risk of inefficiency: If the array is too large, computational cycles are wasted on small matrices; if it is too small, it does not achieve the expected efficiency on large workloads.

This is where FuriosaAI’s TCP architecture aims to improve the situation.

What makes TCP different?

While TCP and systolic arrays share the goal of parallelizing calculations, FuriosaAI introduces key innovations in flexibility and efficiency:

1. Dynamic computing unit configuration

- Unlike the fixed structure of a systolic array, TCP consists of smaller units (“slices”) that can be dynamically reconfigured based on tensor size.

- This allows TCP to maintain high utilization even when workloads have variable tensor sizes.

2. Greater flexibility in data movement

- In a systolic array, data moves in a single predefined direction, leading to wasted computational cycles.

- TCP introduces a fetch network that distributes data to multiple processing units simultaneously, increasing data reuse.

- Instead of treating computation solely as a spatial operation (as soulgood in systolic arrays), TCP employs temporal reuse techniques to optimize performance.

3. Native tensor operations

- Traditional NPUs (neural processing units) are optimized for matrix multiplication, requiring software to transform tensor operations into 2D matrices.

- TCP processes tensors directly, eliminating the need for conversion and avoiding efficiency loss.

- This facilitates optimizing new AI models, reducing the engineering effort required to deploy models like Llama 2 or Llama 3.

4. Increased energy efficiency

- Memory access is the primary energy consumer in AI: moving data between external memory (DRAM) and chip processing units can consume up to 10,000 times more energy than computation itself.

- TCP maximizes data reuse within internal buffers, drastically reducing costly accesses to external memory.

How does TCP handle dynamic AI workloads better than GPUs?

One of the biggest challenges in AI inference is dealing with variable batch sizes and tensor shapes. Traditional systolic arrays struggle with this because:

- They require a static workload structure, reducing efficiency when batch sizes change.

- They are only efficient if the workload completely fills the array, which rarely happens in real inference.

TCP overcomes these limitations through the dynamic reconfiguration of its computing units based on tensor shape. For example:

- If a large model is running, TCP allocates more processing units to optimize performance.

- For smaller workloads, TCP divides into multiple independent units, avoiding resource waste.

- Unlike systolic arrays, TCP adapts in real-time to changes in tensor shape.

This makes TCP ideal for large-scale AI models used by cloud AI providers and large enterprises.

How does TCP improve AI inference compared to GPUs?

GPUs currently dominate AI acceleration, but they present inefficiencies compared to purpose-built chips like TCP:

1. Energy consumption

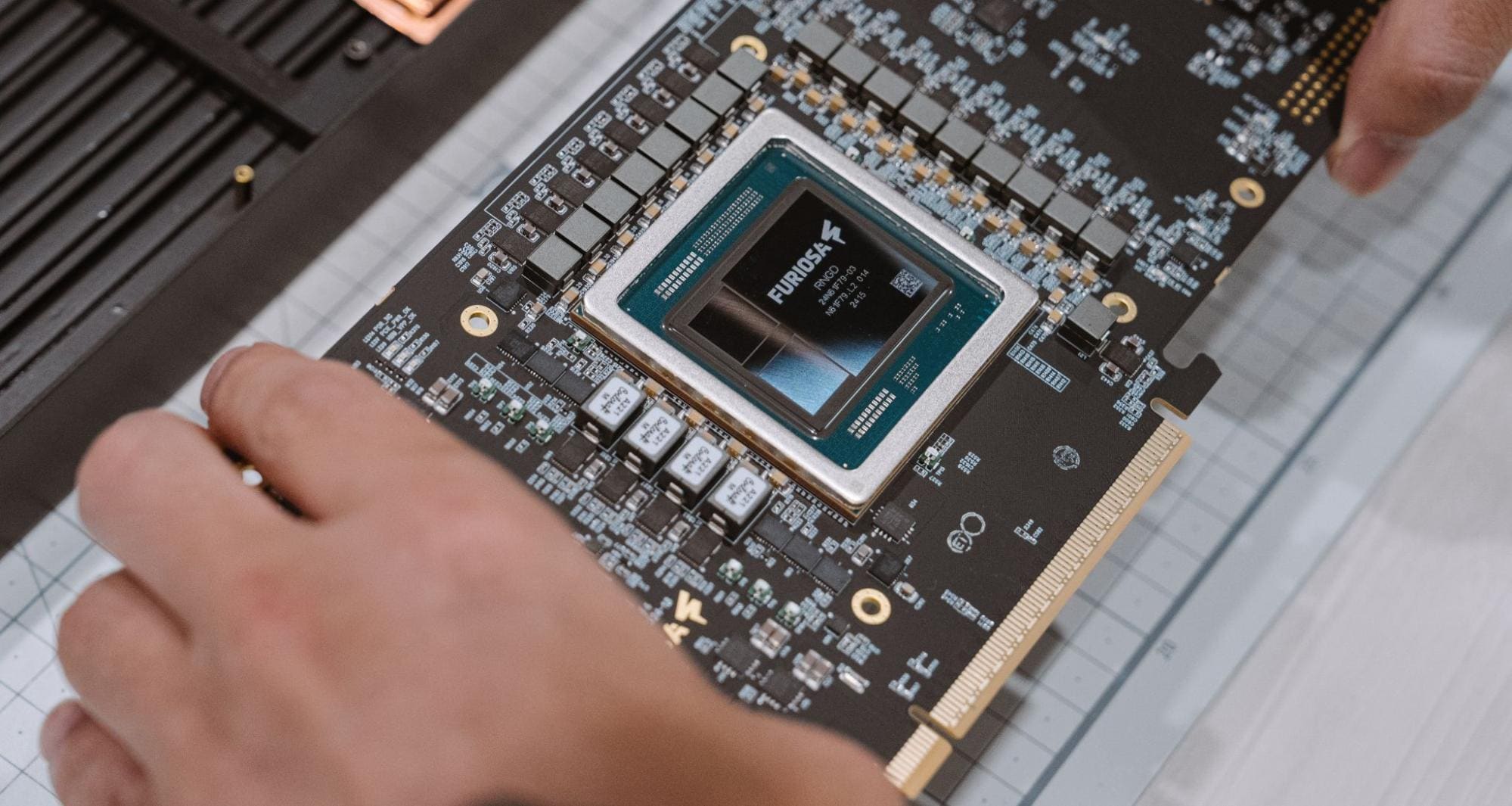

- High-performance GPUs like the Nvidia H100 consume up to 1,200W, while FuriosaAI’s RNGD chip operates at just 150W, an 8-fold reduction in energy consumption.

2. Efficient data processing

- GPUs process tensors as 2D matrices, which requires converting multidimensional data. This conversion adds overhead and reduces parallelism.

- TCP maintains tensor structures, eliminating inefficient transformations and simplifying model optimization.

3. Model deployment and customization

- Optimizing AI models for GPUs requires extensive kernel-level engineering to recover efficiency lost from tensor conversion.

- TCP eliminates this complexity by processing tensors natively, easing AI model deployment and tuning.

The future of AI acceleration: Where does TCP fit in?

The AI hardware sector is evolving rapidly, with a rise in custom chip design to reduce reliance on Nvidia and enhance efficiency. TCP reflects key industry trends:

- The decline of general-purpose AI chips

- While GPUs initiated the AI revolution, companies are now seeking specialized chips that maximize efficiency for specific workloads.

- The rise of custom silicon for AI

- Giants like Google, Meta, and Amazon are developing their own AI accelerators to cut costs and improve performance.

- The potential acquisition of FuriosaAI by Meta suggests a move toward in-house AI chips.

- The step beyond systolic arrays

- Systolic arrays have dominated AI acceleration, but their structural limitations are no longer viable for modern models.

- TCP represents the next evolution, optimizing both performance and energy consumption.

Conclusion: Is TCP the future of AI hardware?

FuriosaAI’s TCP architecture represents a major advancement in AI acceleration by overcoming the limitations of systolic arrays and GPUs.

With companies like Meta exploring acquisitions in this field, TCP could play a crucial role in the next generation of AI hardware. It may not completely replace GPUs, but its efficiency and adaptability position it as a key player in modern AI acceleration.

Source: FuriosaAI