Google unveils its seventh generation of TPUs, designed from the ground up to drive large-scale AI inference models with energy efficiency, extreme performance, and unprecedented scalability.

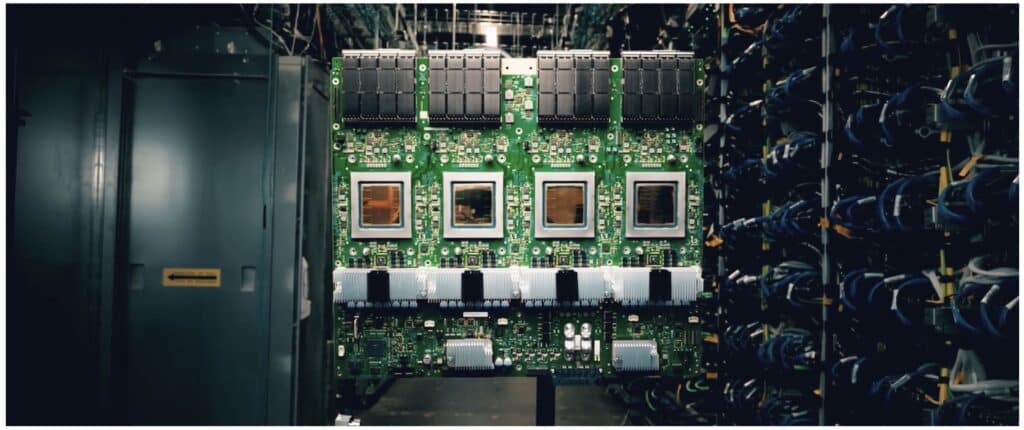

During the Google Cloud Next 2025 event, the company has revealed Ironwood, its seventh-generation TPU (Tensor Processing Unit), an architecture specifically tailored to accelerate AI model inference in cloud environments. This marks the largest technological leap in the trajectory of these processing units since Google launched its first TPU in 2016.

With Ironwood, Google redefines its AI infrastructure to respond to a new paradigm: the shift from models that react to models that think and act proactively. This transition signals the beginning of what the company calls the “age of inference.”

A Historic Leap in Computational Power

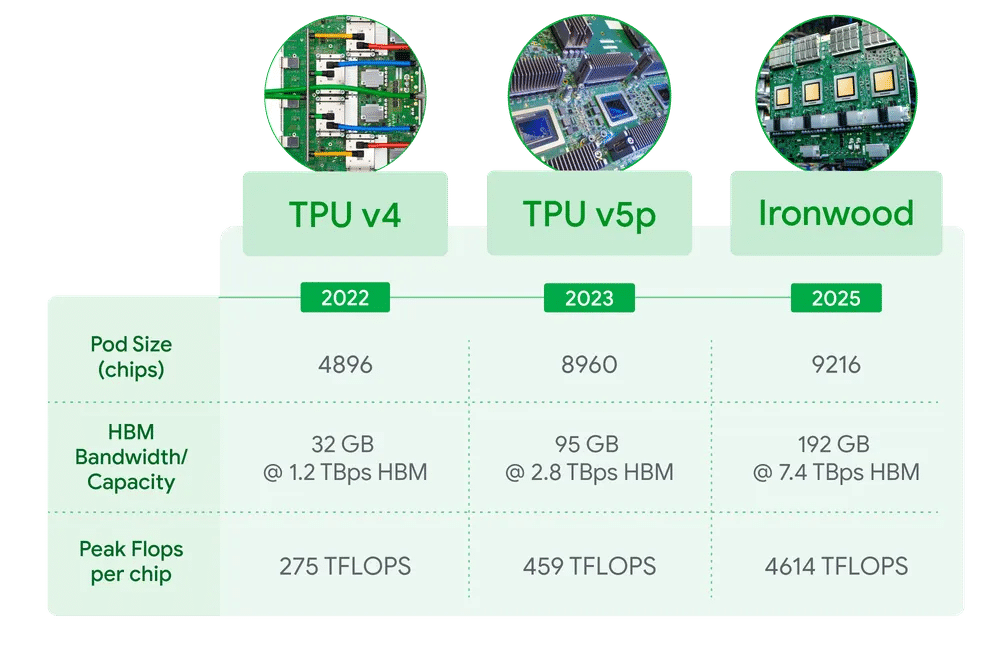

Ironwood can scale up to 9,216 chips per pod, which translates to 42.5 exaflops of raw power, over 24 times the capacity of the world’s most powerful supercomputer, El Capitan (1.7 exaflops). Each individual chip achieves a peak performance of 4,614 teraflops (FP8) and features 192 GB of HBM memory, delivering 7.2 TB/s of bandwidth, breaking all previous limits in cloud computing.

This massive capability is designed to tackle models like Gemini 2.5 or AlphaFold, as well as future architectures of LLMs (Large Language Models) and MoEs (Mixture of Experts), which require extreme parallel processing, ultra-fast memory access, and an internal network that minimizes latency. To achieve this, Google has implemented a 1.2 Tbps bidirectional Inter-Chip Interconnect (ICI), 50% more than the previous generation, Trillium.

Designed for Inference, Not Just Training

While many current AI architectures are optimized for training, Ironwood has been developed from the ground up to maximize inference at scale. This results in lower latencies, greater energy efficiency, and a superior ability to serve models to thousands of users simultaneously in cloud environments.

“The era of inference is one where models not only react but also understand, interpret, and act,” says Sundar Pichai, CEO of Google. “Ironwood is our commitment to lead this transition.”

An Architecture Focused on Performance and Efficiency

Ironwood stands out not only for its power but also for its thermal and energy efficiency. Thanks to its liquid cooling system and deeply optimized chip engineering, Ironwood achieves twice the performance per watt compared to Trillium, and is up to 30 times more efficient than the 2018 TPU v2.

At a time when energy availability has become a bottleneck for scaling AI infrastructures, this improvement in efficiency becomes a key differentiator for cloud customers who need performance, but also sustainability and cost control.

Two Configurations to Adapt to Every Workload

Ironwood will be available in two main configurations on Google Cloud:

- Ironwood 256: designed for startups and companies that need advanced performance without deploying a hyper-scale infrastructure.

- Ironwood 9,216: tailored for industry leaders, such as research laboratories, LLM providers, or companies that need to train and serve models with trillions of parameters in real-time.

Both will be integrated within the AI Hypercomputer ecosystem of Google Cloud, an architecture composed of optimized hardware, networking, storage, and software, allowing developers to easily scale using Pathways, the machine learning runtime from Google DeepMind.

Support for Non-Traditional Workloads

Ironwood is not only designed for LLMs or generative models. It also incorporates enhancements in SparseCore, the inference accelerator specialized in large embeddings, commonly used in recommendation systems and search, but increasingly prevalent in finance, healthcare, or scientific simulations.

This evolution positions Ironwood as a flexible solution, capable of encompassing everything from conversational AI to workloads in critical sectors where low latency, consistency, and scalability are vital.

Path to the Future: AI-as-a-Service with Google DNA

With Ironwood, Google Cloud has solidified its position as the most experienced provider in AI computing. It has designed not only the hardware but also the entire software stack, interconnect networks, and distributed inference operating system.

“Google is the only hyperscaler with over a decade of experience in delivering AI at planetary scale. Gmail, Google Search, and other services serve billions of people every day with our infrastructure,” explain the Google Cloud team.

With Ironwood, this experience translates into an AI-as-a-Service offering that will allow any company to deploy powerful models without building their own infrastructure. Google takes care of everything: from cooling to scaling, security, and cost optimization.

Conclusion: Ironwood is the Future of Inferential AI

Ironwood is not just another chip. It is the culmination of a decade of leadership in AI computing and a bridge to the next stage of artificial intelligence, where models think, reason, and act.

With significant improvements in power, memory, networking, and efficiency, Ironwood marks a turning point in cloud infrastructure for artificial intelligence. A future where training and serving models will not be a barrier, but a competitive advantage for those who trust the cloud as a platform for innovation.

Source: Google blog