Intel has announced its participation in the creation of the Open Platform for Enterprise AI (OPEA), an initiative led by the Linux Foundation AI & Data to unify the developer community around the advancement of generative artificial intelligence systems (GenAI). This project aims to establish an open and interoperable standard for secure and efficient deployments of GenAI, starting with augmented retrieval generation (RAG).

During the Intel Vision 2024 event, Intel CEO Pat Gelsinger highlighted the industry challenges and expressed Intel’s intention to lead the creation of an open industrial platform for enterprise AI. OPEA represents a significant step in this direction, with Intel committed from the early stages of the project.

In the initial phase, Intel plans to publish a technical conceptual framework and launch reference implementations for GenAI pipelines, using secure solutions based on Intel® Xeon® processors and Intel® Gaudi® AI accelerators. Additionally, Intel will continue to expand infrastructure capacity in the Intel® Tiber™ Developer Cloud for ecosystem development, AI acceleration, and RAG validation, as well as future pipelines.

The OPEA initiative aims to address the lack of de facto standards that allow companies to choose and deploy RAG solutions openly and interoperably. This will include standardizing components such as frameworks, architectural blueprints, and reference solutions that demonstrate performance, interoperability, reliability, and enterprise-grade readiness.

Intel, in collaboration with industry partners, has developed OPEA as a sandbox-level project within the LF AI & Data foundation. OPEA’s mission is to facilitate the creation of robust and composite GenAI solutions that can leverage the best of innovation across the ecosystem.

Melissa Evers, Vice President of the Software and Advanced Technology Group and General Manager of Execution Strategy at Intel Corporation, stated that OPEA will address critical points in RAG adoption today and define a platform for developers’ future innovation phases.

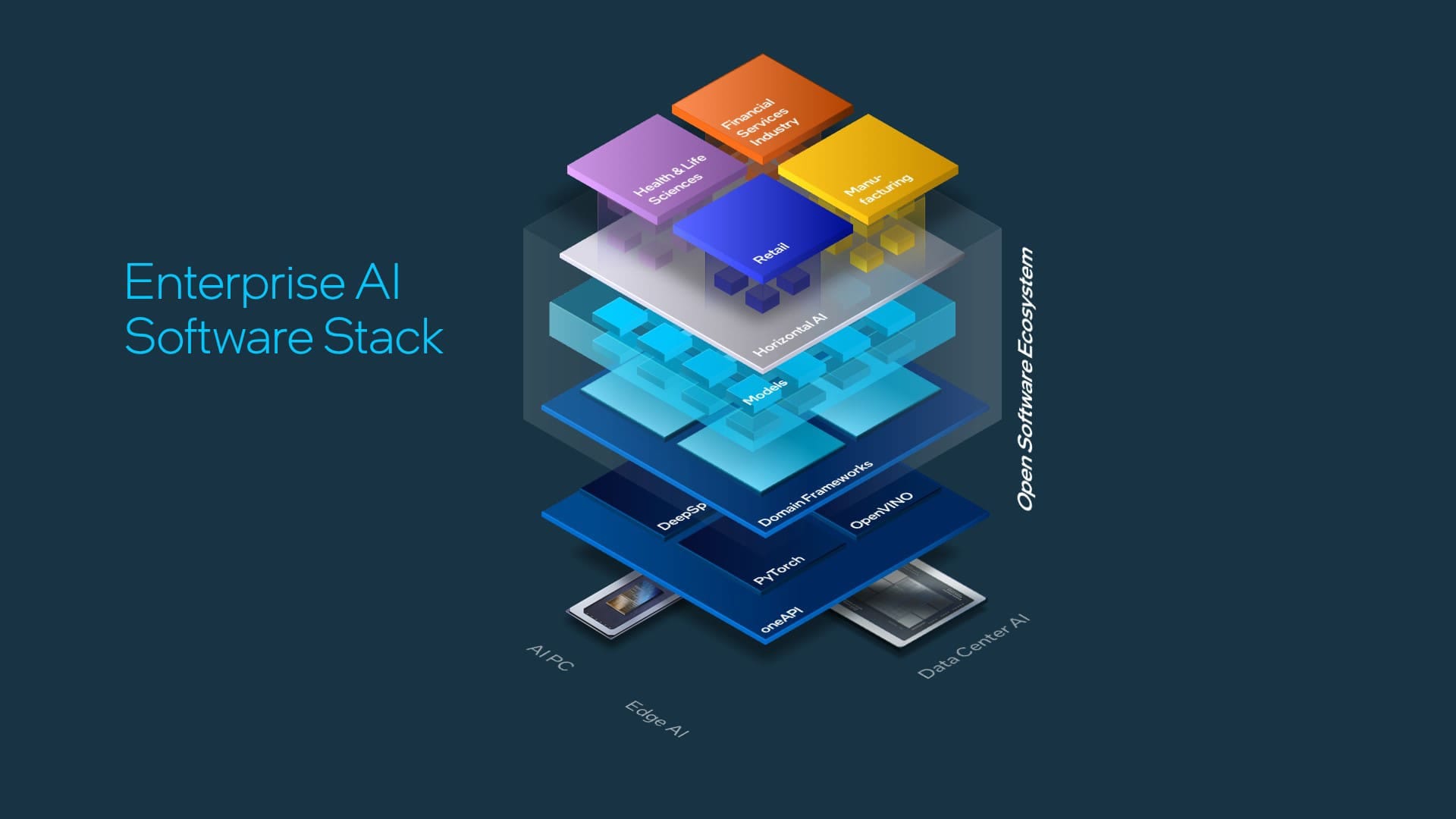

The OPEA framework provides a comprehensive and straightforward view of the GenAI workflow, including GenAI models such as large-scale language and vision models, modeling services, data vector and graph storage, query engines, and memory systems, among others.

This framework is complemented by an evaluation system that Intel has already made available in the OPEA GitHub repository. This system allows for performance, reliability, scalability, and resilience-based evaluation, ensuring that solutions are truly fit for enterprises.

With the support of a wide range of partners, including Anyscale, Cloudera, and IBM, OPEA is poised to elevate GenAI implementation to the next level, providing a standardized platform for evaluation, development, and deployment of solutions. Intel is committed to openly collaborating with the open-source community to enhance and expand OPEA, thus accelerating the value of GenAI for enterprises.