The industry is still unprepared for the change.

Data centers are critical infrastructures that underpin the digital economy. They process everything from video calls and Netflix streaming to financial transactions and AI model training. However, the energy demand of new processors and GPUs is reaching unprecedented levels, and traditional air cooling methods are falling short.

In this context, immersion cooling—which until recently was considered an exotic technology reserved for supercomputers or cryptocurrency mining—emerges as the only viable medium-term alternative. But the industry, still tied to outdated paradigms, isn’t ready to adopt this transformation on a large scale.

Air’s Limit: Why Traditional Methods Are No Longer Enough

For decades, data center design has relied on air cooling systems: cold and hot aisles, raised floors, high-powered fans, and sophisticated climate control strategies.

Despite its longevity, this model is reaching its limits:

- Increasing density: AI and HPC racks easily surpass 50-100 kW, figures that are impossible to dissipate with air alone.

- More powerful processors: GPUs like NVIDIA Blackwell or AMD MI300 exceed 700-1,000 watts per chip.

- Regulatory demands: Europe is pushing for energy efficiency targets with PUE values close to 1.1, very difficult to achieve with conventional climate systems.

The result is clear: although affordable and well-known, air is no longer sufficient for the new era of intensive computing.

How Immersion Cooling Works and Its Benefits

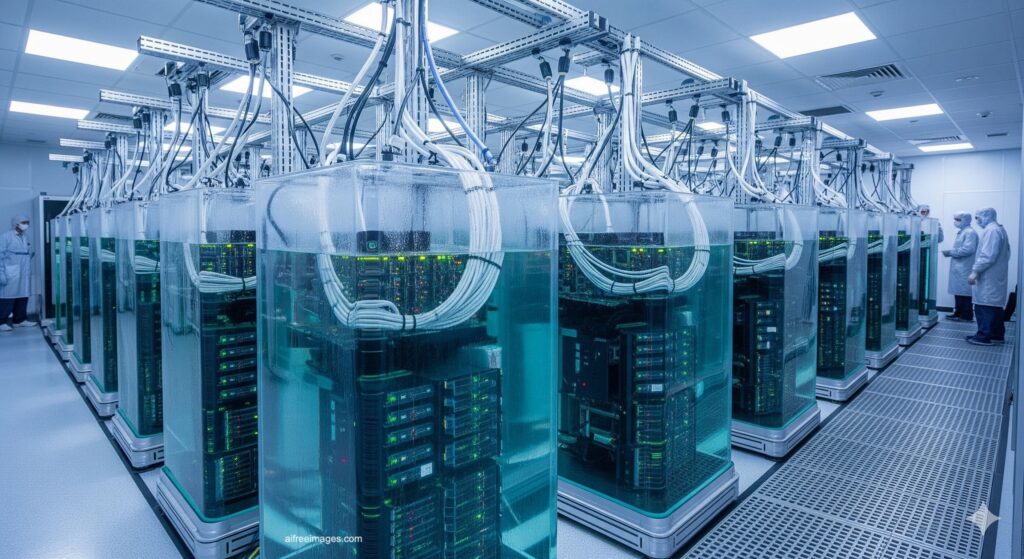

Immersion cooling involves submerging servers in dielectric, non-conductive fluids capable of directly absorbing heat generated by electronic components.

Its advantages include:

- Energy efficiency: achieving PUEs below 1.05.

- Higher rack density: supports configurations exceeding 100 kW, essential in AI clusters.

- Less noise and vibration: eliminating fans extends equipment lifespan.

- Sustainability: reduces water consumption compared to traditional cooling towers and enables the reuse of residual heat in urban networks or industrial processes.

Companies like Submer, GRC, and Asperitas have already demonstrated viability in pilot projects. Even giants such as Microsoft, Meta, and Alibaba are conducting large-scale tests.

Why Isn’t It Widespread Yet?

Despite its benefits, immersion cooling currently accounts for less than 1% of global installed capacity.

Several barriers exist:

- Ecosystem inertia: racks, servers, and data centers are designed for air, not liquids.

- High initial costs: the upfront investment can be higher, although operational savings become clear in the medium term.

- Non-standardized fluids: dielectric liquids are expensive, and consensus on durability and recycling is lacking.

- Cultural risk aversion: companies hesitate to submerge critical hardware valued at millions of dollars.

- Limited manufacturer support: major OEMs like Dell, HPE, and Supermicro are just beginning to certify immersion-ready equipment.

An Increasingly Inevitable Future

Physics doesn’t lie: air cannot dissipate the heat generated by future data centers. Therefore, immersion isn’t a passing trend but a technical necessity that will become dominant.

Forecasts suggest the immersion cooling market could grow annually by over 20% until 2030. However, this pace might be insufficient when compared to AI electricity consumption, which could multiply by three or four times before the end of the decade.

This raises a question: will industry adapt in time to prevent a “cooling crisis”?

What’s Needed to Accelerate Adoption

To become standard, experts highlight several essential steps:

- Standardization of fluids and equipment, with clear standards to reduce perceived risks.

- Optimized hardware designs, built for immersion from the ground up, rather than retrofitted.

- Training technicians and operators capable of maintaining liquid infrastructures.

- Regulatory incentives, especially in Europe and the U.S., where climate targets pressure industry to improve energy efficiency.

Conclusion: The Future Is Already Underwater

Immersion cooling is the inevitable answer to the thermal challenges of AI, high-performance computing, and hyperscale cloud services. The question is no longer if it will be widely adopted but when and at what pace.

What today seems like an exotic technology will, in a few years, become an essential standard for keeping the digital economy running.

Frequently Asked Questions (FAQ) about Immersion Cooling

1. What’s the difference between traditional liquid cooling and immersion?

Conventional liquid cooling uses closed-loop water or glycol circuits that pass through cold plates on CPUs and GPUs. In contrast, immersion submerges entire equipment in dielectric fluids, achieving higher efficiency and eliminating fans.

2. How much does implementing immersion cooling cost?

Initial investments can be between 20% to 40% higher than air systems, but savings in electricity, maintenance, and space allow for recouping costs within 3-5 years, depending on data center density.

3. Is it safe for electronic equipment?

Yes. The fluids used are non-conductive and designed not to damage components. In fact, some studies indicate that server lifespan extends thanks to reduced vibrations and dust.

4. What is the environmental impact?

Highly positive: it reduces water consumption, improves energy efficiency, and facilitates heat recovery for heating systems or industrial processes.

5. When will it be widely adopted?

Analysts believe a turning point will occur between 2027 and 2030, driven by AI workloads and regulatory pressures.