Hewlett Packard Enterprise Unveils Innovations in Accelerated Computing, Storage, and Observability Optimized for the Validated NVIDIA Enterprise AI Factory Architecture

At Computex 2025, Hewlett Packard Enterprise (HPE) has announced a significant expansion of its collaboration with NVIDIA to shape the next generation of Enterprise AI Factories. The new joint offering includes enhancements in storage, servers, private cloud, and management software, all aimed at covering the full lifecycle of AI: from data ingestion to training and inference.

“We are building the foundation for companies to leverage intelligence as a new industrial resource, from the data center to the cloud and the edge,” stated Jensen Huang, founder and CEO of NVIDIA.

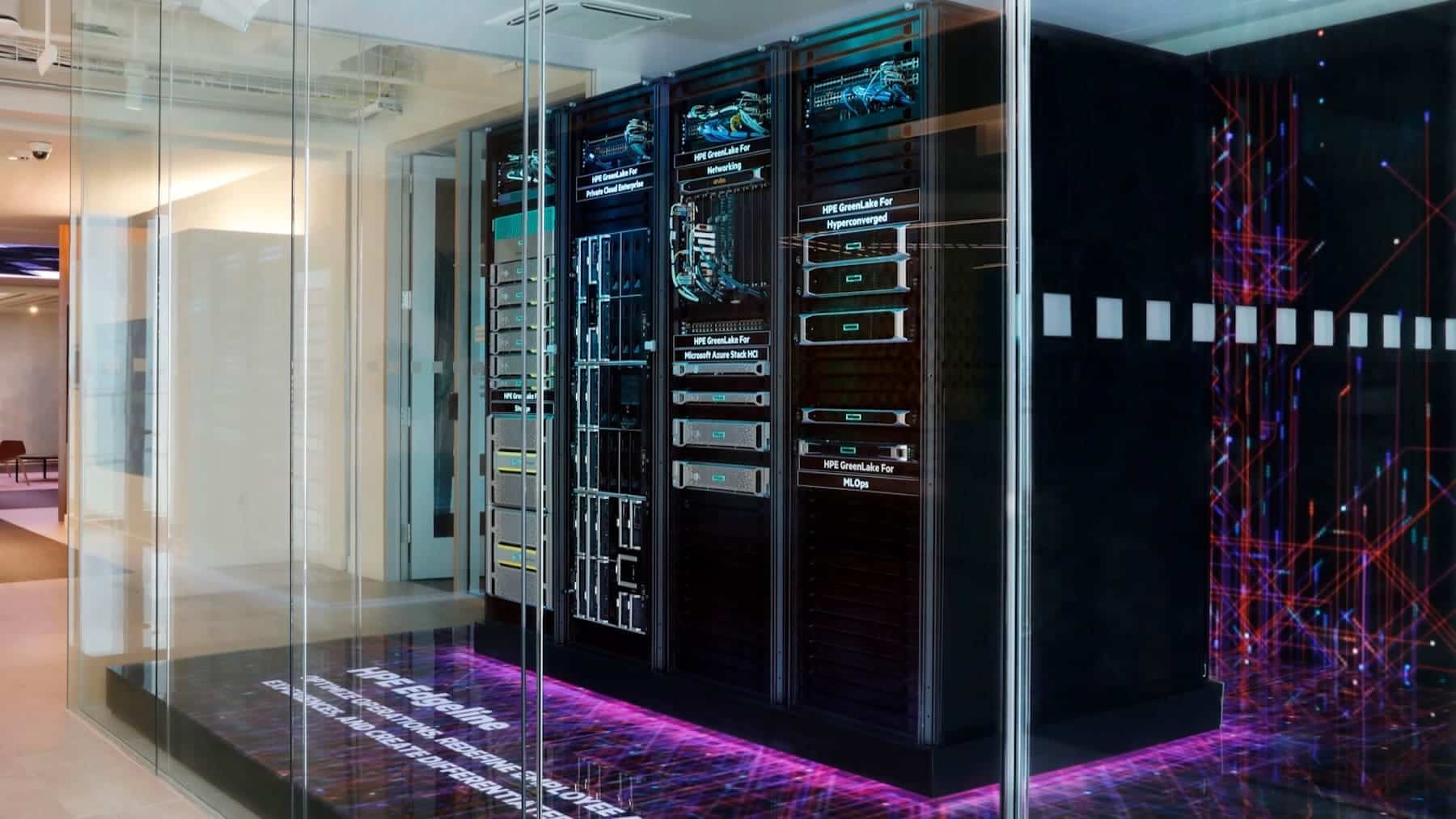

HPE Private Cloud AI: Scalable Private AI with Security and Speed

The HPE Private Cloud AI system, developed in collaboration with NVIDIA, positions itself as a private cloud solution for AI that integrates accelerated computing and specific tools such as NVIDIA NIM, SDKs, and pre-trained models. Starting in the summer of 2025, this platform will also support “feature branch” updates of NVIDIA AI Enterprise, allowing developers to experiment with new features in validation environments before scaling to production.

This infrastructure is optimized for generative AI (GenAI) and agent-based AI applications and aligns with the validated design of the NVIDIA Enterprise AI Factory.

New HPE Alletra MP X10000 Storage with SDK for NVIDIA Data Platform

HPE also announced a strategic integration between its new storage solution, Alletra Storage MP X10000, and NVIDIA’s AI data platform. The SDK will provide advanced functionalities such as:

- Vector indexing and metadata enrichment.

- Direct memory access between GPUs and storage (RDMA).

- Modular composition for scaling capacity and performance separately.

This workflow optimizes the processing of unstructured data for inference, training, and continuous learning, essential in environments where agent-based AI needs real-time access to reliable and contextualized data.

HPE ProLiant DL380a Gen12 Server: Benchmark-Validated Power

The HPE ProLiant Compute DL380a Gen12 server, which has led over 50 MLPerf v5.0 benchmark tests, will be available with up to 10 NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs starting June 4. Features include:

- Air cooling and direct liquid cooling (DLC).

- Enhanced security with iLO 7 and post-quantum cryptography readiness.

- Advanced operations management with HPE Compute Ops Management.

This server joins other leading models in the range, such as the DL384 Gen12 with NVIDIA GH200 NVL2 processors and the Cray XD670, which topped 30 inference benchmarks in LLMs and computer vision.

Total Observability with HPE OpsRamp

HPE OpsRamp, the SaaS-based IT management software, adds support for the new RTX PRO 6000 Blackwell GPUs. Highlighted features include:

- Monitoring GPU performance and energy consumption.

- Automation of responses to critical events (such as overheating).

- Predicting resource needs.

- Managing distributed workloads in hybrid environments.

With direct integration into network and accelerated computing technologies from NVIDIA such as BlueField, Quantum InfiniBand, and Base Command Manager, HPE offers a comprehensive platform for full-stack AI observability.

Availability

- HPE Private Cloud AI with support for “feature branch” models will be available this summer.

- The SDK for the Alletra X10000 will also arrive in summer 2025.

- The DL380a Gen12 with RTX PRO 6000 Blackwell can be ordered starting June 4.

- OpsRamp Software will be available for the new GPUs at launch.