The race for HBM4 memory—the “gasoline” that fuels AI GPUs—is entering a particularly delicate phase: the final touches before the ultimate validation. In this battleground, the current market leader, SK Hynix, has once again moved strategically with a new batch of improved samples for Nvidia, aiming to close the qualification process as soon as possible within the first quarter. At the same time, Samsung Electronics is emerging as a serious contender to enter Nvidia’s supply chain with its 12-layer HBM4, showing signs that it could be close to starting production for customers within a few weeks.

Behind these headlines lies a less glamorous, yet crucial reality: in stacked memories like HBM, the generational leap isn’t achieved solely with “more performance.” It is gained through a combination of performance, reliability, and manufacturability. This balance explains why SK Hynix is continuously reviewing and “re-reviewing” its final samples, even after delivering Customer Samples (CS) and undergoing circuit redesigns following SiP (System-in-Package) tests.

What does “qualifying” HBM4 with Nvidia (and why are the revisions repeated)?

Validation with a client like Nvidia isn’t a mere formality; it’s a rigorous filter. HBM memories are stacked, interconnected, and integrated into a complex package where each adjustment—latencies, signal integrity, thermal stability, behavior under load, voltage margins—can impact performance… and above all, performance at scale.

Therefore, a “working” sample doesn’t necessarily mean it’s ready. It can perform well in the lab but fall short when it comes to consistent manufacturing, acceptable yield, and meeting the speed and power targets within the client’s requirements. In this context, the industry expects repeated rounds of optimization: fixing issues identified in package tests (SiP), fine-tuning margins to avoid penalizing yield, and adjusting designs to meet specific client requests.

For SK Hynix, the story is of a final polishing phase: the company continues to deliver revised samples (including paid samples) while simultaneously providing an even more refined iteration for qualification testing, with results expected within the first quarter.

Nvidia can’t wait: “Rubin” needs a stable supply ramp

The schedule is driven by Nvidia’s upcoming platform. The company is preparing the transition to “Rubin”—its next generation—and, according to market signals, the goal isn’t just to arrive first but to arrive with volume. This is where SK Hynix plays a key role: although Samsung may be ahead in qualification pace, SK Hynix aims to hold a larger share of the total supply throughout the year thanks to previously closed volume contracts.

Meanwhile, the sector’s reliance on advanced memory supply chains for AI becomes increasingly evident. As AI infrastructure demand intensifies, HBM suppliers are becoming strategic players, and any bottleneck—be it capacity, validation, or packaging—translates directly into delays and increased costs.

Samsung intensifies: 12 layers and ambitions to join the “Nvidia club”

Samsung, for its part, has ramped up its public statements about HBM4 and its competitiveness, while the market interprets that it might soon start production for clients if the remaining steps are solidified.

Beyond marketing, the move makes strategic sense: in HBM, being part of the winning platform is half the battle. If Nvidia pushes “Rubin” toward production, the reward for the supplier that establishes itself as a second source—or a capable alternative—is significant. The very competitive dynamics suggest that even when a supplier leads, customers seek to diversify to reduce risk and increase bargaining power.

Volume, bargaining power, and the price factor

Another sensitive point is price. The market expects HBM4 to cost more than its predecessor—not only due to technical complexity but also because of demand conditions and advanced packaging pressures. Sector estimates place the price of a 12-layer HBM4 above $600, and discussions are already pointing toward price parity among suppliers—an important shift from earlier stages where significant price gaps existed between competitors.

This detail matters for a simple reason: HBM is one of the components most heavily influencing the overall cost of an AI GPU, and consequently, the cost per token, training, or inference in data centers. In other words, this isn’t just a technological race; it’s a battle over the margin throughout the entire supply chain.

The quiet role of TSMC and the “faster vs more manufacturable” dilemma

The final stretch of optimization often involves difficult decisions. If the customer demands higher speed, the design might be pushed further, but that can strain manufacturing yields. An increasingly discussed element in sector conversations is the coordination with across-chain players—including foundries and packaging—to maintain a “safety margin” and prevent performance improvements from turning into supply issues.

Ultimately, success will depend not just on delivering the first passing demo sample, but on getting a product that passes qualification, scales in volume, and maintains stability. That’s why, in HBM4, revisits are not signs of weakness but signals that the product has entered the critical phase where everything is decided.

What’s next?

If qualification is achieved within the first quarter, the market will move into a second phase: real ramp-up, with contracts, committed capacity, and a supply chain tasked with accommodating a generation expected to require even more memory.

Meanwhile, Nvidia needs the memory to support the ambitions of “Rubin.” Samsung must demonstrate that its HBM4 isn’t just competitive but supplyable. SK Hynix, on the other hand, aims to maintain its leadership not just through technological prowess but with an equally crucial strength: being the supplier that, when the time comes, can say “yes, and I have volume.”

Frequently Asked Questions

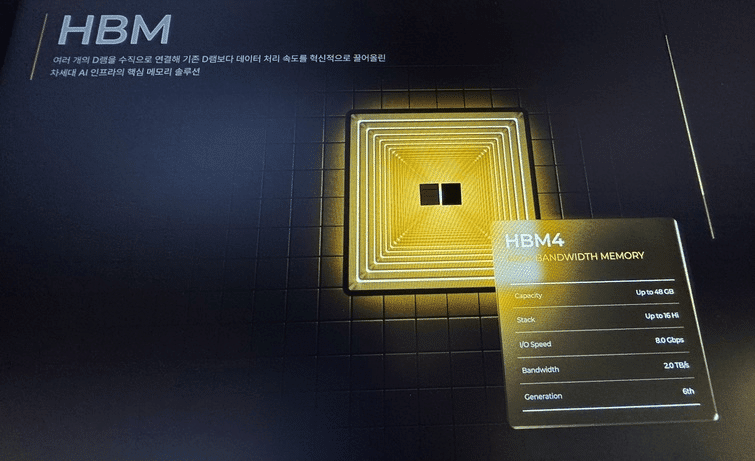

What is HBM4 memory, and why is it so important for AI GPUs?

HBM4 is a generation of high-bandwidth, 3D-stacked memory designed to deliver immense speed and energy efficiency. In AI, it supplies accelerateors with data at a rapid pace, preventing bottlenecks that limit actual performance.

What does it mean for Nvidia to “qualify” an HBM4?

It means the memory passes a series of technical and reliability tests within a specific package and platform. It’s not enough for it to just work— it must operate with stability, sufficient margins, and consistency for large-scale production and deployment.

Why are samples repeatedly reviewed before mass production?

Because the final step involves balancing speed, power consumption, thermal stability, and manufacturing yield. Pushing performance can reduce yield, which in turn affects volume and pricing sustainability.

How does the price of HBM4 affect the cost of training or serving AI models?

HBM is a critical and costly component of AI hardware. If its price rises or availability shrinks, the cost of systems increases, impacting the price per training session or inference and directly influencing the economics of deploying models.

via: dealsite.co.kr